Archived release notes

Summary

Azure HDInsight is one of the most popular services among enterprise customers for open-source analytics on Azure. Subscribe to the HDInsight Release Notes for up-to-date information on HDInsight and all HDInsight versions.

To subscribe, click the “watch” button in the banner and watch out for HDInsight Releases.

Release Information

Release date: Aug 30, 2024

Note

This is a Hotfix / maintenance release for Resource Provider. For more information see, Resource Provider.

Azure HDInsight periodically releases maintenance updates for delivering bug fixes, performance enhancements, and security patches ensuring you stay up to date with these updates guarantees optimal performance and reliability.

This release note applies to

HDInsight 5.1 version.

HDInsight 5.0 version.

HDInsight 4.0 version.

HDInsight release will be available to all regions over several days. This release note is applicable for image number 2407260448. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see HDInsight 5.x component versions.

Issue fixed

- Default DB bug fix.

Coming soon

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

- Retirement Notifications for HDInsight 4.0 and HDInsight 5.0.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A.

We're listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community.

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: Aug 09, 2024

This release note applies to

HDInsight 5.1 version.

HDInsight 5.0 version.

HDInsight 4.0 version.

HDInsight release will be available to all regions over several days. This release note is applicable for image number 2407260448. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see HDInsight 5.x component versions.

Updates

Addition of Azure Monitor Agent for Log Analytics in HDInsight

Addition of SystemMSI and Automated DCR for Log analytics, given the deprecation of New Azure Monitor experience (preview).

Note

Effective Image number 2407260448, customers using portal for log analytics will have default Azure Monitor Agent experience. In case you wish to switch to Azure Monitor experience (preview), you can pin your clusters to old images by creating a support request.

Release date: Jul 05, 2024

Note

This is a Hotfix / maintenance release for Resource Provider. For more information see, Resource Provider

Fixed issues

HOBO tags overwrite user tags.

- HOBO tags overwrite user tags on sub-resources in HDInsight cluster creation.

Release date: Jun 19, 2024

This release note applies to

HDInsight 5.1 version.

HDInsight 5.0 version.

HDInsight 4.0 version.

HDInsight release will be available to all regions over several days. This release note is applicable for image number 2406180258. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see HDInsight 5.x component versions.

Fixed issues

Security enhancements

Improvements in the HDInsight Log Analytics with System Managed Identity support for HDInsight Resource Provider.

Addition of new activity to upgrade the

mdsdagent version for old image (created before 2024).Enabling MISE in gateway as part of the continued improvements for MSAL Migration.

Incorporate Spark Thrift Server

Httpheader hiveConfto the Jetty HTTP ConnectionFactory.Revert RANGER-3753 and RANGER-3593.

The

setOwnerUserimplementation given in Ranger 2.3.0 release has a critical regression issue when being used by Hive. In Ranger 2.3.0, when HiveServer2 tries to evaluate the policies, Ranger Client tries to get the owner of the hive table by calling the Metastore in the setOwnerUser function which essentially makes call to storage to check access for that table. This issue causes the queries to run slow when Hive runs on 2.3.0 Ranger.

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

- Retirement Notifications for HDInsight 4.0 and HDInsight 5.0.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A.

We're listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community.

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: May 16, 2024

This release note applies to

HDInsight 5.0 version.

HDInsight 4.0 version.

HDInsight release will be available to all regions over several days. This release note is applicable for image number 2405081840. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see HDInsight 5.x component versions.

Fixed issues

- Added API in gateway to get token for Keyvault, as part of the SFI initiative.

- In the new Log monitor

HDInsightSparkLogstable, for log typeSparkDriverLog, some of the fields were missing. For example,LogLevel & Message. This release adds the missing fields to schemas and fixed formatting forSparkDriverLog. - Livy logs not available in Log Analytics monitoring

SparkDriverLogtable, which was due to an issue with Livy log source path and log parsing regex inSparkLivyLogconfigs. - Any HDInsight cluster, using ADLS Gen2 as a primary storage account can leverage MSI based access to any of the Azure resources (for example, SQL, Keyvaults) which is used within the application code.

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

- Retirement Notifications for HDInsight 4.0 and HDInsight 5.0.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A.

We're listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community.

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: April 15, 2024

This release note applies to

HDInsight 5.1 version.

HDInsight release will be available to all regions over several days. This release note is applicable for image number 2403290825. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see HDInsight 5.x component versions.

Fixed issues

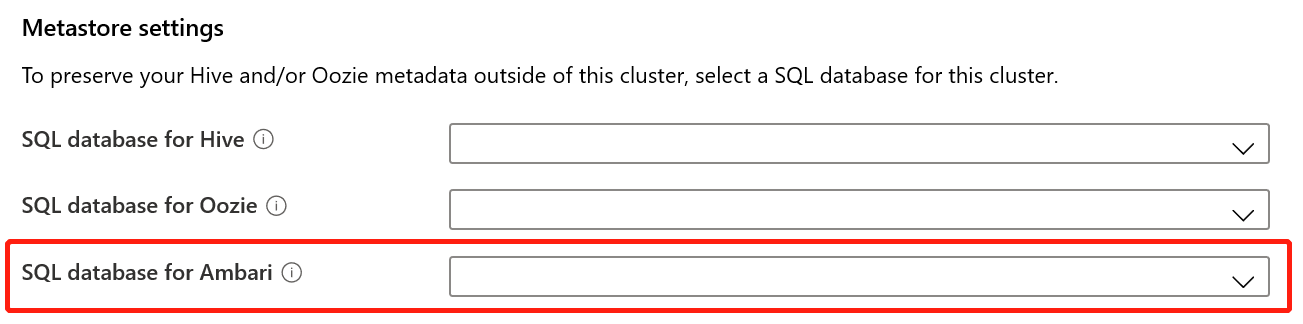

- Bug fixes for Ambari DB, Hive Warehouse Controller (HWC), Spark, HDFS

- Bug fixes for Log analytics module for HDInsightSparkLogs

- CVE Fixes for HDInsight Resource Provider.

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

- Retirement Notifications for HDInsight 4.0 and HDInsight 5.0.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A.

We're listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community.

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: February 15, 2024

This release applies to HDInsight 4.x and 5.x versions. HDInsight release will be available to all regions over several days. This release is applicable for image number 2401250802. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see

New features

- Apache Ranger support for Spark SQL in Spark 3.3.0 (HDInsight version 5.1) with Enterprise security package. Learn more about it here.

Fixed issues

- Security fixes from Ambari and Oozie components

Coming soon

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A

We are listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Next steps

- Azure HDInsight: Frequently asked questions

- Configure the OS patching schedule for Linux-based HDInsight clusters

- Previous release note

Azure HDInsight is one of the most popular services among enterprise customers for open-source analytics on Azure. If you would like to subscribe on release notes, watch releases on this GitHub repository.

Release date: January 10, 2024

This hotfix release applies to HDInsight 4.x and 5.x versions. HDInsight release will be available to all regions over several days. This release is applicable for image number 2401030422. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

Note

Ubuntu 18.04 is supported under Extended Security Maintenance(ESM) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

For workload specific versions, see

Fixed issues

- Security fixes from Ambari and Oozie components

Coming soon

Coming soon

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A

We are listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: October 26, 2023

This release applies to HDInsight 4.x and 5.x HDInsight release will be available to all regions over several days. This release is applicable for image number 2310140056. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

For workload specific versions, see

What's new

HDInsight announces the General availability of HDInsight 5.1 starting November 1, 2023. This release brings in a full stack refresh to the open source components and the integrations from Microsoft.

- Latest Open Source Versions – HDInsight 5.1 comes with the latest stable open-source version available. Customers can benefit from all latest open source features, Microsoft performance improvements, and Bug fixes.

- Secure – The latest versions come with the most recent security fixes, both open-source security fixes and security improvements by Microsoft.

- Lower TCO – With performance enhancements customers can lower the operating cost, along with enhanced autoscale.

Cluster permissions for secure storage

- Customers can specify (during cluster creation) whether a secure channel should be used for HDInsight cluster nodes to connect the storage account.

HDInsight Cluster Creation with Custom VNets.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

Microsoft Network/virtualNetworks/subnets/join/actionto perform create operations. Customer might face creation failures if this check is not enabled.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

Non-ESP ABFS clusters [Cluster Permissions for Word Readable]

- Non-ESP ABFS clusters restrict non-Hadoop group users from executing Hadoop commands for storage operations. This change improves cluster security posture.

In-line quota update.

- Now you can request quota increase directly from the My Quota page, with the direct API call it is much faster. In case the API call fails, you can create a new support request for quota increase.

Coming soon

Coming soon

The max length of cluster name will be changed to 45 from 59 characters, to improve the security posture of clusters. This change will be rolled out to all regions starting upcoming release.

Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we will retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs).

- To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A

We are listening: You’re welcome to add more ideas and other topics here and vote for them - HDInsight Ideas and follow us for more updates on AzureHDInsight Community

Note

This release addresses the following CVEs released by MSRC on September 12, 2023. The action is to update to the latest image 2308221128 or 2310140056. Customers are advised to plan accordingly.

| CVE | Severity | CVE Title | Remark |

|---|---|---|---|

| CVE-2023-38156 | Important | Azure HDInsight Apache Ambari Elevation of Privilege Vulnerability | Included on image 2308221128 or 2310140056 |

| CVE-2023-36419 | Important | Azure HDInsight Apache Oozie Workflow Scheduler Elevation of Privilege Vulnerability | Apply Script action on your clusters, or update to 2310140056 image |

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: September 7, 2023

This release applies to HDInsight 4.x and 5.x HDInsight release will be available to all regions over several days. This release is applicable for image number 2308221128. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

For workload specific versions, see

Important

This release addresses the following CVEs released by MSRC on September 12, 2023. The action is to update to the latest image 2308221128. Customers are advised to plan accordingly.

| CVE | Severity | CVE Title | Remark |

|---|---|---|---|

| CVE-2023-38156 | Important | Azure HDInsight Apache Ambari Elevation of Privilege Vulnerability | Included on 2308221128 image |

| CVE-2023-36419 | Important | Azure HDInsight Apache Oozie Workflow Scheduler Elevation of Privilege Vulnerability | Apply Script action on your clusters |

Coming soon

- The max length of cluster name will be changed to 45 from 59 characters, to improve the security posture of clusters. This change will be implemented by September 30, 2023.

- Cluster permissions for secure storage

- Customers can specify (during cluster creation) whether a secure channel should be used for HDInsight cluster nodes to contact the storage account.

- In-line quota update.

- Request quotas increase directly from the My Quota page, which will be a direct API call, which is faster. If the APdI call fails, then customers need to create a new support request for quota increase.

- HDInsight Cluster Creation with Custom VNets.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

Microsoft Network/virtualNetworks/subnets/join/actionto perform create operations. Customers would need to plan accordingly as this change would be a mandatory check to avoid cluster creation failures before September 30, 2023.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

- Basic and Standard A-series VMs Retirement.

- On August 31, 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs). To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before August 31, 2024.

- Non-ESP ABFS clusters [Cluster Permissions for Word Readable]

- Plan to introduce a change in non-ESP ABFS clusters, which restricts non-Hadoop group users from executing Hadoop commands for storage operations. This change to improve cluster security posture. Customers need to plan for the updates before September 30, 2023.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A

You’re welcome to add more proposals and ideas and other topics here and vote for them - HDInsight Community (azure.com).

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: July 25, 2023

This release applies to HDInsight 4.x and 5.x HDInsight release will be available to all regions over several days. This release is applicable for image number 2307201242. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.1: Ubuntu 18.04.5 LTS Linux Kernel 5.4

For workload specific versions, see

What's new

What's new

- HDInsight 5.1 is now supported with ESP cluster.

- Upgraded version of Ranger 2.3.0 and Oozie 5.2.1 are now part of HDInsight 5.1

- The Spark 3.3.1 (HDInsight 5.1) cluster comes with Hive Warehouse Connector (HWC) 2.1, which works together with the Interactive Query (HDInsight 5.1) cluster.

- Ubuntu 18.04 is supported under ESM(Extended Security Maintenance) by the Azure Linux team for Azure HDInsight July 2023, release onwards.

Important

This release addresses the following CVEs released by MSRC on August 8, 2023. The action is to update to the latest image 2307201242. Customers are advised to plan accordingly.

| CVE | Severity | CVE Title |

|---|---|---|

| CVE-2023-35393 | Important | Azure Apache Hive Spoofing Vulnerability |

| CVE-2023-35394 | Important | Azure HDInsight Jupyter Notebook Spoofing Vulnerability |

| CVE-2023-36877 | Important | Azure Apache Oozie Spoofing Vulnerability |

| CVE-2023-36881 | Important | Azure Apache Ambari Spoofing Vulnerability |

| CVE-2023-38188 | Important | Azure Apache Hadoop Spoofing Vulnerability |

Coming soon

Coming soon

- The max length of cluster name will be changed to 45 from 59 characters, to improve the security posture of clusters. Customers need to plan for the updates before 30, September 2023.

- Cluster permissions for secure storage

- Customers can specify (during cluster creation) whether a secure channel should be used for HDInsight cluster nodes to contact the storage account.

- In-line quota update.

- Request quotas increase directly from the My Quota page, which will be a direct API call, which is faster. If the API call fails, then customers need to create a new support request for quota increase.

- HDInsight Cluster Creation with Custom VNets.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

Microsoft Network/virtualNetworks/subnets/join/actionto perform create operations. Customers would need to plan accordingly as this change would be a mandatory check to avoid cluster creation failures before 30, September 2023.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

- Basic and Standard A-series VMs Retirement.

- On 31 August 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs). To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before 31, August 2024.

- Non-ESP ABFS clusters [Cluster Permissions for Word Readable]

- Plan to introduce a change in non-ESP ABFS clusters, which restricts non-Hadoop group users from executing Hadoop commands for storage operations. This change to improve cluster security posture. Customers need to plan for the updates before 30 September 2023.

If you have any more questions, contact Azure Support.

You can always ask us about HDInsight on Azure HDInsight - Microsoft Q&A

You’re welcome to add more proposals and ideas and other topics here and vote for them - HDInsight Community (azure.com) and follow us for more updates on X

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: May 08, 2023

This release applies to HDInsight 4.x and 5.x HDInsight release is available to all regions over several days. This release is applicable for image number 2304280205. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

For workload specific versions, see

![]()

Azure HDInsight 5.1 updated with

- Apache HBase 2.4.11

- Apache Phoenix 5.1.2

- Apache Hive 3.1.2

- Apache Spark 3.3.1

- Apache Tez 0.9.1

- Apache Zeppelin 0.10.1

- Apache Livy 0.5

- Apache Kafka 3.2.0

Note

- All components are integrated with Hadoop 3.3.4 & ZK 3.6.3

- All above upgraded components are now available in non-ESP clusters for public preview.

![]()

Enhanced Autoscale for HDInsight

Azure HDInsight has made notable improvements stability and latency on Autoscale, The essential changes include improved feedback loop for scaling decisions, significant improvement on latency for scaling and support for recommissioning the decommissioned nodes, Learn more about the enhancements, how to custom configure and migrate your cluster to enhanced autoscale. The enhanced Autoscale capability is available effective 17 May 2023 across all supported regions.

Azure HDInsight ESP for Apache Kafka 2.4.1 is now Generally Available.

Azure HDInsight ESP for Apache Kafka 2.4.1 has been in public preview since April 2022. After notable improvements in CVE fixes and stability, Azure HDInsight ESP Kafka 2.4.1 now becomes generally available and ready for production workloads, learn the detail about the how to configure and migrate.

Quota Management for HDInsight

HDInsight currently allocates quota to customer subscriptions at a regional level. The cores allocated to customers are generic and not classified at a VM family level (For example,

Dv2,Ev3,Eav4, etc.).HDInsight introduced an improved view, which provides a detail and classification of quotas for family-level VMs, this feature allows customers to view current and remaining quotas for a region at the VM family level. With the enhanced view, customers have richer visibility, for planning quotas, and a better user experience. This feature is currently available on HDInsight 4.x and 5.x for East US EUAP region. Other regions to follow later.

For more information, see Cluster capacity planning in Azure HDInsight | Microsoft Learn

![]()

- Poland Central

- The max length of cluster name changes to 45 from 59 characters, to improve the security posture of clusters.

- Cluster permissions for secure storage

- Customers can specify (during cluster creation) whether a secure channel should be used for HDInsight cluster nodes to contact the storage account.

- In-line quota update.

- Request quotas increase directly from the My Quota page, which is a direct API call, which is faster. If the API call fails, then customers need to create a new support request for quota increase.

- HDInsight Cluster Creation with Custom VNets.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

Microsoft Network/virtualNetworks/subnets/join/actionto perform create operations. Customers would need to plan accordingly as this would be a mandatory check to avoid cluster creation failures.

- To improve the overall security posture of the HDInsight clusters, HDInsight clusters using custom VNETs need to ensure that the user needs to have permission for

- Basic and Standard A-series VMs Retirement.

- On 31 August 2024, we'll retire Basic and Standard A-series VMs. Before that date, you need to migrate your workloads to Av2-series VMs, which provide more memory per vCPU and faster storage on solid-state drives (SSDs). To avoid service disruptions, migrate your workloads from Basic and Standard A-series VMs to Av2-series VMs before 31 August 2024.

- Non-ESP ABFS clusters [Cluster Permissions for World Readable]

- Plan to introduce a change in non-ESP ABFS clusters, which restricts non-Hadoop group users from executing Hadoop commands for storage operations. This change to improve cluster security posture. Customers need to plan for the updates.

Release date: February 28, 2023

This release applies to HDInsight 4.0. and 5.0, 5.1. HDInsight release is available to all regions over several days. This release is applicable for image number 2302250400. How to check the image number?

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

For workload specific versions, see

Important

Microsoft has issued CVE-2023-23408, which is fixed on the current release and customers are advised to upgrade their clusters to latest image.

![]()

HDInsight 5.1

We have started rolling out a new version of HDInsight 5.1. All new open-source releases added as incremental releases on HDInsight 5.1.

For more information, see HDInsight 5.1.0 version

![]()

Kafka 3.2.0 Upgrade (Preview)

- Kafka 3.2.0 includes several significant new features/improvements.

- Upgraded Zookeeper to 3.6.3

- Kafka Streams support

- Stronger delivery guarantees for the Kafka producer enabled by default.

log4j1.x replaced withreload4j.- Send a hint to the partition leader to recover the partition.

JoinGroupRequestandLeaveGroupRequesthave a reason attached.- Added Broker count metrics8.

- Mirror

Maker2improvements.

HBase 2.4.11 Upgrade (Preview)

- This version has new features such as the addition of new caching mechanism types for block cache, the ability to alter

hbase:meta tableand view thehbase:metatable from the HBase WEB UI.

Phoenix 5.1.2 Upgrade (Preview)

- Phoenix version upgraded to 5.1.2 in this release. This upgrade includes the Phoenix Query Server. The Phoenix Query Server proxies the standard Phoenix JDBC driver and provides a backwards-compatible wire protocol to invoke that JDBC driver.

Ambari CVEs

- Multiple Ambari CVEs are fixed.

Note

ESP isn't supported for Kafka and HBase in this release.

![]()

What's next

- Autoscale

- Autoscale with improved latency and several improvements

- Cluster name change limitation

- The max length of cluster name changes to 45 from 59 in Public, Azure China, and Azure Government.

- Cluster permissions for secure storage

- Customers can specify (during cluster creation) whether a secure channel should be used for HDInsight cluster nodes to contact the storage account.

- Non-ESP ABFS clusters [Cluster Permissions for World Readable]

- Plan to introduce a change in non-ESP ABFS clusters, which restricts non-Hadoop group users from executing Hadoop commands for storage operations. This change to improve cluster security posture. Customers need to plan for the updates.

- Open-source upgrades

- Apache Spark 3.3.0 and Hadoop 3.3.4 are under development on HDInsight 5.1 and includes several significant new features, performance and other improvements.

Note

We advise customers to use to latest versions of HDInsight Images as they bring in the best of open source updates, Azure updates and security fixes. For more information, see Best practices.

Release date: December 12, 2022

This release applies to HDInsight 4.0. and 5.0 HDInsight release is made available to all regions over several days.

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

OS versions

- HDInsight 4.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

- HDInsight 5.0: Ubuntu 18.04.5 LTS Linux Kernel 5.4

![]()

- Log Analytics - Customers can enable classic monitoring to get the latest OMS version 14.19. To remove old versions, disable and enable classic monitoring.

- Ambari user auto UI sign out due to inactivity. For more information, see here

- Spark - A new and optimized version of Spark 3.1.3 is included in this release. We tested Apache Spark 3.1.2(previous version) and Apache Spark 3.1.3(current version) using the TPC-DS benchmark. The test was carried out using E8 V3 SKU, for Apache Spark on 1-TB workload. Apache Spark 3.1.3 (current version) outperformed Apache Spark 3.1.2 (previous version) by over 40% in total query runtime for TPC-DS queries using the same hardware specs. The Microsoft Spark team added optimizations available in Azure Synapse with Azure HDInsight. For more information, please refer to Speed up your data workloads with performance updates to Apache Spark 3.1.2 in Azure Synapse

![]()

- Qatar Central

- Germany North

![]()

HDInsight has moved away from Azul Zulu Java JDK 8 to

Adoptium Temurin JDK 8, which supports high-quality TCK certified runtimes, and associated technology for use across the Java ecosystem.HDInsight has migrated to

reload4j. Thelog4jchanges are applicable to- Apache Hadoop

- Apache Zookeeper

- Apache Oozie

- Apache Ranger

- Apache Sqoop

- Apache Pig

- Apache Ambari

- Apache Kafka

- Apache Spark

- Apache Zeppelin

- Apache Livy

- Apache Rubix

- Apache Hive

- Apache Tez

- Apache HBase

- OMI

- Apache Pheonix

![]()

HDInsight to implement TLS1.2 going forward, and earlier versions are updated on the platform. If you're running any applications on top of HDInsight and they use TLS 1.0 and 1.1, upgrade to TLS 1.2 to avoid any disruption in services.

For more information, see How to enable Transport Layer Security (TLS)

![]()

End of support for Azure HDInsight clusters on Ubuntu 16.04 LTS from 30 November 2022. HDInsight begins release of cluster images using Ubuntu 18.04 from June 27, 2021. We recommend our customers who are running clusters using Ubuntu 16.04 is to rebuild their clusters with the latest HDInsight images by 30 November 2022.

For more information on how to check Ubuntu version of cluster, see here

Execute the command “lsb_release -a” in the terminal.

If the value for “Description” property in output is “Ubuntu 16.04 LTS”, then this update is applicable to the cluster.

![]()

- Support for Availability Zones selection for Kafka and HBase (write access) clusters.

Open source bug fixes

Hive bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| HIVE-26127 | INSERT OVERWRITE error - File Not Found |

| HIVE-24957 | Wrong results when subquery has COALESCE in correlation predicate |

| HIVE-24999 | HiveSubQueryRemoveRule generates invalid plan for IN subquery with multiple correlations |

| HIVE-24322 | If there is direct insert, the attempt ID has to be checked when reading the manifest fails |

| HIVE-23363 | Upgrade DataNucleus dependency to 5.2 |

| HIVE-26412 | Create interface to fetch available slots and add the default |

| HIVE-26173 | Upgrade derby to 10.14.2.0 |

| HIVE-25920 | Bump Xerce2 to 2.12.2. |

| HIVE-26300 | Upgrade Jackson data bind version to 2.12.6.1+ to avoid CVE-2020-36518 |

Release date: 08/10/2022

This release applies to HDInsight 4.0. HDInsight release is made available to all regions over several days.

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

![]()

New Feature

1. Attach external disks in HDI Hadoop/Spark clusters

HDInsight cluster comes with predefined disk space based on SKU. This space might not be sufficient in large job scenarios.

This new feature allows you to add more disks in cluster, which used as node manager local directory. Add number of disks to worker nodes during HIVE and Spark cluster creation, while the selected disks are part of node manager’s local directories.

Note

The added disks are only configured for node manager local directories.

For more information, see here

2. Selective logging analysis

Selective logging analysis is now available on all regions for public preview. You can connect your cluster to a log analytics workspace. Once enabled, you can see the logs and metrics like HDInsight Security Logs, Yarn Resource Manager, System Metrics etc. You can monitor workloads and see how they're affecting cluster stability. Selective logging allows you to enable/disable all the tables or enable selective tables in log analytics workspace. You can adjust the source type for each table, since in new version of Geneva monitoring one table has multiple sources.

- The Geneva monitoring system uses mdsd(MDS daemon) which is a monitoring agent and fluentd for collecting logs using unified logging layer.

- Selective Logging uses script action to disable/enable tables and their log types. Since it doesn't open any new ports or change any existing security setting hence, there are no security changes.

- Script Action runs in parallel on all specified nodes and changes the configuration files for disabling/enabling tables and their log types.

For more information, see here

![]()

Fixed

Log analytics

Log Analytics integrated with Azure HDInsight running OMS version 13 requires an upgrade to OMS version 14 to apply the latest security updates. Customers using older version of cluster with OMS version 13 need to install OMS version 14 to meet the security requirements. (How to check current version & Install 14)

How to check your current OMS version

- Sign in to the cluster using SSH.

- Run the following command in your SSH Client.

sudo /opt/omi/bin/ominiserver/ --version

How to upgrade your OMS version from 13 to 14

- Sign in to the Azure portal

- From the resource group, select the HDInsight cluster resource

- Select Script actions

- From Submit script action panel, choose Script type as custom

- Paste the following link in the Bash script URL box https://hdiconfigactions.blob.core.windows.net/log-analytics-patch/OMSUPGRADE14.1/omsagent-vulnerability-fix-1.14.12-0.sh

- Select Node type(s)

- Select Create

Verify the successful installation of the patch using the following steps:

Sign in to the cluster using SSH.

Run the following command in your SSH Client.

sudo /opt/omi/bin/ominiserver/ --version

Other bug fixes

- Yarn log’s CLI failed to retrieve the logs if any

TFileis corrupt or empty. - Resolved invalid service principal details error while getting the OAuth token from Azure Active Directory.

- Improved cluster creation reliability when 100+ worked nodes are configured.

Open source bug fixes

TEZ bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| Tez Build Failure: FileSaver.js not found | TEZ-4411 |

Wrong FS Exception when warehouse and scratchdir are on different FS |

TEZ-4406 |

| TezUtils.createConfFromByteString on Configuration larger than 32 MB throws com.google.protobuf.CodedInputStream exception | TEZ-4142 |

| TezUtils::createByteStringFromConf should use snappy instead of DeflaterOutputStream | TEZ-4113 |

| Update protobuf dependency to 3.x | TEZ-4363 |

Hive bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| Perf optimizations in ORC split-generation | HIVE-21457 |

| Avoid reading table as ACID when table name is starting with "delta", but table isn't transactional and BI Split Strategy is used | HIVE-22582 |

| Remove an FS#exists call from AcidUtils#getLogicalLength | HIVE-23533 |

| Vectorized OrcAcidRowBatchReader.computeOffset and bucket optimization | HIVE-17917 |

Known issues

HDInsight is compatible with Apache HIVE 3.1.2. Due to a bug in this release, the Hive version is shown as 3.1.0 in hive interfaces. However, there's no impact on the functionality.

Release date: 08/10/2022

This release applies to HDInsight 4.0. HDInsight release is made available to all regions over several days.

HDInsight uses safe deployment practices, which involve gradual region deployment. It might take up to 10 business days for a new release or a new version to be available in all regions.

![]()

New Feature

1. Attach external disks in HDI Hadoop/Spark clusters

HDInsight cluster comes with predefined disk space based on SKU. This space might not be sufficient in large job scenarios.

This new feature allows you to add more disks in cluster, which will be used as node manager local directory. Add number of disks to worker nodes during HIVE and Spark cluster creation, while the selected disks are part of node manager’s local directories.

Note

The added disks are only configured for node manager local directories.

For more information, see here

2. Selective logging analysis

Selective logging analysis is now available on all regions for public preview. You can connect your cluster to a log analytics workspace. Once enabled, you can see the logs and metrics like HDInsight Security Logs, Yarn Resource Manager, System Metrics etc. You can monitor workloads and see how they're affecting cluster stability. Selective logging allows you to enable/disable all the tables or enable selective tables in log analytics workspace. You can adjust the source type for each table, since in new version of Geneva monitoring one table has multiple sources.

- The Geneva monitoring system uses mdsd(MDS daemon) which is a monitoring agent and fluentd for collecting logs using unified logging layer.

- Selective Logging uses script action to disable/enable tables and their log types. Since it doesn't open any new ports or change any existing security setting hence, there are no security changes.

- Script Action runs in parallel on all specified nodes and changes the configuration files for disabling/enabling tables and their log types.

For more information, see here

![]()

Fixed

Log analytics

Log Analytics integrated with Azure HDInsight running OMS version 13 requires an upgrade to OMS version 14 to apply the latest security updates. Customers using older version of cluster with OMS version 13 need to install OMS version 14 to meet the security requirements. (How to check current version & Install 14)

How to check your current OMS version

- Log in to the cluster using SSH.

- Run the following command in your SSH Client.

sudo /opt/omi/bin/ominiserver/ --version

How to upgrade your OMS version from 13 to 14

- Sign in to the Azure portal

- From the resource group, select the HDInsight cluster resource

- Select Script actions

- From Submit script action panel, choose Script type as custom

- Paste the following link in the Bash script URL box https://hdiconfigactions.blob.core.windows.net/log-analytics-patch/OMSUPGRADE14.1/omsagent-vulnerability-fix-1.14.12-0.sh

- Select Node type(s)

- Select Create

Verify the successful installation of the patch using the following steps:

Sign in to the cluster using SSH.

Run the following command in your SSH Client.

sudo /opt/omi/bin/ominiserver/ --version

Other bug fixes

- Yarn log’s CLI failed to retrieve the logs if any

TFileis corrupt or empty. - Resolved invalid service principal details error while getting the OAuth token from Azure Active Directory.

- Improved cluster creation reliability when 100+ worked nodes are configured.

Open source bug fixes

TEZ bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| Tez Build Failure: FileSaver.js not found | TEZ-4411 |

Wrong FS Exception when warehouse and scratchdir are on different FS |

TEZ-4406 |

| TezUtils.createConfFromByteString on Configuration larger than 32 MB throws com.google.protobuf.CodedInputStream exception | TEZ-4142 |

| TezUtils::createByteStringFromConf should use snappy instead of DeflaterOutputStream | TEZ-4113 |

| Update protobuf dependency to 3.x | TEZ-4363 |

Hive bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| Perf optimizations in ORC split-generation | HIVE-21457 |

| Avoid reading table as ACID when table name is starting with "delta", but table isn't transactional and BI Split Strategy is used | HIVE-22582 |

| Remove an FS#exists call from AcidUtils#getLogicalLength | HIVE-23533 |

| Vectorized OrcAcidRowBatchReader.computeOffset and bucket optimization | HIVE-17917 |

Known issues

HDInsight is compatible with Apache HIVE 3.1.2. Due to a bug in this release, the Hive version is shown as 3.1.0 in hive interfaces. However, there's no impact on the functionality.

Release date: 06/03/2022

This release applies for HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region over several days.

Release highlights

The Hive Warehouse Connector (HWC) on Spark v3.1.2

The Hive Warehouse Connector (HWC) allows you to take advantage of the unique features of Hive and Spark to build powerful big-data applications. HWC is currently supported for Spark v2.4 only. This feature adds business value by allowing ACID transactions on Hive Tables using Spark. This feature is useful for customers who use both Hive and Spark in their data estate. For more information, see Apache Spark & Hive - Hive Warehouse Connector - Azure HDInsight | Microsoft Docs

Ambari

- Scaling and provisioning improvement changes

- HDI hive is now compatible with OSS version 3.1.2

HDI Hive 3.1 version is upgraded to OSS Hive 3.1.2. This version has all fixes and features available in open source Hive 3.1.2 version.

Note

Spark

- If you are using Azure User Interface to create Spark Cluster for HDInsight, you will see from the dropdown list an other version Spark 3.1.(HDI 5.0) along with the older versions. This version is a renamed version of Spark 3.1.(HDI 4.0). This is only an UI level change, which doesn’t impact anything for the existing users and users who are already using the ARM template.

Note

Interactive Query

- If you are creating an Interactive Query Cluster, you will see from the dropdown list an other version as Interactive Query 3.1 (HDI 5.0).

- If you are going to use Spark 3.1 version along with Hive which require ACID support, you need to select this version Interactive Query 3.1 (HDI 5.0).

TEZ bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| TezUtils.createConfFromByteString on Configuration larger than 32 MB throws com.google.protobuf.CodedInputStream exception | TEZ-4142 |

| TezUtils createByteStringFromConf should use snappy instead of DeflaterOutputStream | TEZ-4113 |

HBase bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

TableSnapshotInputFormat should use ReadType.STREAM for scanning HFiles |

HBASE-26273 |

| Add option to disable scanMetrics in TableSnapshotInputFormat | HBASE-26330 |

| Fix for ArrayIndexOutOfBoundsException when balancer is executed | HBASE-22739 |

Hive bug fixes

| Bug Fixes | Apache JIRA |

|---|---|

| NPE when inserting data with 'distribute by' clause with dynpart sort optimization | HIVE-18284 |

| MSCK REPAIR Command with Partition Filtering Fails While Dropping Partitions | HIVE-23851 |

| Wrong exception thrown if capacity<=0 | HIVE-25446 |

| Support parallel load for HastTables - Interfaces | HIVE-25583 |

| Include MultiDelimitSerDe in HiveServer2 By Default | HIVE-20619 |

| Remove glassfish.jersey and mssql-jdbc classes from jdbc-standalone jar | HIVE-22134 |

| Null pointer exception on running compaction against an MM table. | HIVE-21280 |

Hive query with large size via knox fails with Broken pipe Write failed |

HIVE-22231 |

| Adding ability for user to set bind user | HIVE-21009 |

| Implement UDF to interpret date/timestamp using its internal representation and Gregorian-Julian hybrid calendar | HIVE-22241 |

| Beeline option to show/not show execution report | HIVE-22204 |

| Tez: SplitGenerator tries to look for plan files, which doesn't exist for Tez | HIVE-22169 |

Remove expensive logging from the LLAP cache hotpath |

HIVE-22168 |

| UDF: FunctionRegistry synchronizes on org.apache.hadoop.hive.ql.udf.UDFType class | HIVE-22161 |

| Prevent the creation of query routing appender if property is set to false | HIVE-22115 |

| Remove cross-query synchronization for the partition-eval | HIVE-22106 |

| Skip setting up hive scratch dir during planning | HIVE-21182 |

| Skip creating scratch dirs for tez if RPC is on | HIVE-21171 |

switch Hive UDFs to use Re2J regex engine |

HIVE-19661 |

| Migrated clustered tables using bucketing_version 1 on hive 3 uses bucketing_version 2 for inserts | HIVE-22429 |

| Bucketing: Bucketing version 1 is incorrectly partitioning data | HIVE-21167 |

| Adding ASF License header to the newly added file | HIVE-22498 |

| Schema tool enhancements to support mergeCatalog | HIVE-22498 |

| Hive with TEZ UNION ALL and UDTF results in data loss | HIVE-21915 |

| Split text files even if header/footer exists | HIVE-21924 |

| MultiDelimitSerDe returns wrong results in last column when the loaded file has more columns than the one is present in table schema | HIVE-22360 |

| LLAP external client - Need to reduce LlapBaseInputFormat#getSplits() footprint | HIVE-22221 |

| Column name with reserved keyword is unescaped when query including join on table with mask column is rewritten (Zoltan Matyus via Zoltan Haindrich) | HIVE-22208 |

Prevent LLAP shutdown on AMReporter related RuntimeException |

HIVE-22113 |

| LLAP status service driver might get stuck with wrong Yarn app ID | HIVE-21866 |

| OperationManager.queryIdOperation doesn't properly clean up multiple queryIds | HIVE-22275 |

| Bringing a node manager down blocks restart of LLAP service | HIVE-22219 |

| StackOverflowError when drop lots of partitions | HIVE-15956 |

| Access check is failed when a temporary directory is removed | HIVE-22273 |

| Fix wrong results/ArrayOutOfBound exception in left outer map joins on specific boundary conditions | HIVE-22120 |

| Remove distribution management tag from pom.xml | HIVE-19667 |

| Parsing time can be high if there's deeply nested subqueries | HIVE-21980 |

For ALTER TABLE t SET TBLPROPERTIES ('EXTERNAL'='TRUE'); TBL_TYPE attribute changes not reflecting for non-CAPS |

HIVE-20057 |

JDBC: HiveConnection shades log4j interfaces |

HIVE-18874 |

Update repo URLs in poms - branch 3.1 version |

HIVE-21786 |

DBInstall tests broken on master and branch-3.1 |

HIVE-21758 |

| Load data into a bucketed table is ignoring partitions specs and loads data into default partition | HIVE-21564 |

| Queries with join condition having timestamp or timestamp with local time zone literal throw SemanticException | HIVE-21613 |

| Analyze compute stats for column leave behind staging dir on HDFS | HIVE-21342 |

| Incompatible change in Hive bucket computation | HIVE-21376 |

| Provide a fallback authorizer when no other authorizer is in use | HIVE-20420 |

| Some alterPartitions invocations throw 'NumberFormatException: null' | HIVE-18767 |

| HiveServer2: Preauthenticated subject for http transport isn't retained for entire duration of http communication in some cases | HIVE-20555 |

Release date: 03/10/2022

This release applies for HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region over several days.

The OS versions for this release are:

- HDInsight 4.0: Ubuntu 18.04.5

Spark 3.1 is now generally available

Spark 3.1 is now Generally Available on HDInsight 4.0 release. This release includes

- Adaptive Query Execution,

- Convert Sort Merge Join to Broadcast Hash Join,

- Spark Catalyst Optimizer,

- Dynamic Partition Pruning,

- Customers will be able to create new Spark 3.1 clusters and not Spark 3.0 (preview) clusters.

For more information, see the Apache Spark 3.1 is now Generally Available on HDInsight - Microsoft Tech Community.

For a complete list of improvements, see the Apache Spark 3.1 release notes.

For more information on migration, see the migration guide.

Kafka 2.4 is now generally available

Kafka 2.4.1 is now Generally Available. For more information, please see Kafka 2.4.1 Release Notes.

Other features include MirrorMaker 2 availability, new metric category AtMinIsr topic partition, Improved broker start-up time by lazy on demand mmap of index files, More consumer metrics to observe user poll behavior.

Map Datatype in HWC is now supported in HDInsight 4.0

This release includes Map Datatype Support for HWC 1.0 (Spark 2.4) Via the spark-shell application, and all other all spark clients that HWC supports. Following improvements are included like any other data types:

A user can

- Create a Hive table with any column(s) containing Map datatype, insert data into it and read the results from it.

- Create an Apache Spark dataframe with Map Type and do batch/stream reads and writes.

New regions

HDInsight has now expanded its geographical presence to two new regions: China East 3 and China North 3.

OSS backport changes

OSS backports that are included in Hive including HWC 1.0 (Spark 2.4) which supports Map data type.

Here are the OSS backported Apache JIRAs for this release:

| Impacted Feature | Apache JIRA |

|---|---|

| Metastore direct sql queries with IN/(NOT IN) should be split based on max parameters allowed by SQL DB | HIVE-25659 |

Upgrade log4j 2.16.0 to 2.17.0 |

HIVE-25825 |

Update Flatbuffer version |

HIVE-22827 |

| Support Map data-type natively in Arrow format | HIVE-25553 |

| LLAP external client - Handle nested values when the parent struct is null | HIVE-25243 |

| Upgrade arrow version to 0.11.0 | HIVE-23987 |

Deprecation notices

Azure Virtual Machine Scale Sets on HDInsight

HDInsight will no longer use Azure Virtual Machine Scale Sets to provision the clusters, no breaking change is expected. Existing HDInsight clusters on virtual machine scale sets have no impact, any new clusters on latest images will no longer use Virtual Machine Scale Sets.

Scaling of Azure HDInsight HBase workloads will now be supported only using manual scale

Starting from March 01, 2022, HDInsight will only support manual scale for HBase, there's no impact on running clusters. New HBase clusters won't be able to enable schedule based Autoscaling. For more information on how to manually scale your HBase cluster, refer our documentation on Manually scaling Azure HDInsight clusters

Release date: 12/27/2021

This release applies for HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region over several days.

The OS versions for this release are:

- HDInsight 4.0: Ubuntu 18.04.5 LTS

HDInsight 4.0 image has been updated to mitigate Log4j vulnerability as described in Microsoft’s Response to CVE-2021-44228 Apache Log4j 2.

Note

- Any HDI 4.0 clusters created post 27 Dec 2021 00:00 UTC are created with an updated version of the image which mitigates the

log4jvulnerabilities. Hence, customers need not patch/reboot these clusters. - For new HDInsight 4.0 clusters created between 16 Dec 2021 at 01:15 UTC and 27 Dec 2021 00:00 UTC, HDInsight 3.6 or in pinned subscriptions after 16 Dec 2021 the patch is auto applied within the hour in which the cluster is created, however customers must then reboot their nodes for the patching to complete (except for Kafka Management nodes, which are automatically rebooted).

Release date: 07/27/2021

This release applies for both HDInsight 3.6 and HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region in several days.

The OS versions for this release are:

- HDInsight 3.6: Ubuntu 16.04.7 LTS

- HDInsight 4.0: Ubuntu 18.04.5 LTS

New features

Azure HDInsight support for Restricted Public Connectivity is generally available on Oct 15, 2021

Azure HDInsight now supports restricted public connectivity in all regions. Below are some of the key highlights of this capability:

- Ability to reverse resource provider to cluster communication such that it's outbound from the cluster to the resource provider

- Support for bringing your own Private Link enabled resources (For example, storage, SQL, key vault) for HDInsight cluster to access the resources over private network only

- No public IP addresses are resource provisioned

By using this new capability, you can also skip the inbound network security group (NSG) service tag rules for HDInsight management IPs. Learn more about restricting public connectivity

Azure HDInsight support for Azure Private Link is generally available on Oct 15 2021

You can now use private endpoints to connect to your HDInsight clusters over private link. Private link can be used in cross VNET scenarios where VNET peering isn't available or enabled.

Azure Private Link enables you to access Azure PaaS Services (for example, Azure Storage and SQL Database) and Azure hosted customer-owned/partner services over a private endpoint in your virtual network.

Traffic between your virtual network and the service travels the Microsoft backbone network. Exposing your service to the public internet is no longer necessary.

Let more at enable private link.

New Azure Monitor integration experience (Preview)

The new Azure monitor integration experience will be Preview in East US and West Europe with this release. Learn more details about the new Azure monitor experience here.

Deprecation

HDInsight 3.6 version is deprecated effective Oct 01, 2022.

Behavior changes

HDInsight Interactive Query only supports schedule-based Autoscale

As customer scenarios grow more mature and diverse, we've identified some limitations with Interactive Query (LLAP) load-based Autoscale. These limitations are caused by the nature of LLAP query dynamics, future load prediction accuracy issues, and issues in the LLAP scheduler's task redistribution. Due to these limitations, users might see their queries run slower on LLAP clusters when Autoscale is enabled. The effect on performance can outweigh the cost benefits of Autoscale.

Starting from July 2021, the Interactive Query workload in HDInsight only supports schedule-based Autoscale. You can no longer enable load-based autoscale on new Interactive Query clusters. Existing running clusters can continue to run with the known limitations described above.

Microsoft recommends that you move to a schedule-based Autoscale for LLAP. You can analyze your cluster's current usage pattern through the Grafana Hive dashboard. For more information, see Automatically scale Azure HDInsight clusters.

Upcoming changes

The following changes happen in upcoming releases.

Built-in LLAP component in ESP Spark cluster will be removed

HDInsight 4.0 ESP Spark cluster has built-in LLAP components running on both head nodes. The LLAP components in ESP Spark cluster were originally added for HDInsight 3.6 ESP Spark, but has no real user case for HDInsight 4.0 ESP Spark. In the next release scheduled in Sep 2021, HDInsight will remove the built-in LLAP component from HDInsight 4.0 ESP Spark cluster. This change helps to offload head node workload and avoid confusion between ESP Spark and ESP Interactive Hive cluster type.

New region

- West US 3

JioIndia West- Australia Central

Component version change

The following component version has been changed with this release:

- ORC version from 1.5.1 to 1.5.9

You can find the current component versions for HDInsight 4.0 and HDInsight 3.6 in this doc.

Back ported JIRAs

Here are the back ported Apache JIRAs for this release:

| Impacted Feature | Apache JIRA |

|---|---|

| Date / Timestamp | HIVE-25104 |

| HIVE-24074 | |

| HIVE-22840 | |

| HIVE-22589 | |

| HIVE-22405 | |

| HIVE-21729 | |

| HIVE-21291 | |

| HIVE-21290 | |

| UDF | HIVE-25268 |

| HIVE-25093 | |

| HIVE-22099 | |

| HIVE-24113 | |

| HIVE-22170 | |

| HIVE-22331 | |

| ORC | HIVE-21991 |

| HIVE-21815 | |

| HIVE-21862 | |

| Table Schema | HIVE-20437 |

| HIVE-22941 | |

| HIVE-21784 | |

| HIVE-21714 | |

| HIVE-18702 | |

| HIVE-21799 | |

| HIVE-21296 | |

| Workload Management | HIVE-24201 |

| Compaction | HIVE-24882 |

| HIVE-23058 | |

| HIVE-23046 | |

| Materialized view | HIVE-22566 |

Price Correction for HDInsight Dv2 Virtual Machines

A pricing error was corrected on April 25, 2021, for the Dv2 VM series on HDInsight. The pricing error resulted in a reduced charge on some customer's bills prior to April 25, and with the correction, prices now match what had been advertised on the HDInsight pricing page and the HDInsight pricing calculator. The pricing error impacted customers in the following regions who used Dv2 VMs:

- Canada Central

- Canada East

- East Asia

- South Africa North

- Southeast Asia

- UAE Central

Starting on April 25, 2021, the corrected amount for the Dv2 VMs will be on your account. Customer notifications were sent to subscription owners prior to the change. You can use the Pricing calculator, HDInsight pricing page, or the Create HDInsight cluster blade in the Azure portal to see the corrected costs for Dv2 VMs in your region.

No other action is needed from you. The price correction will only apply for usage on or after April 25, 2021 in the specified regions, and not to any usage prior to this date. To ensure you have the most performant and cost-effective solution, we recommended that you review the pricing, VCPU, and RAM for your Dv2 clusters and compare the Dv2 specifications to the Ev3 VMs to see if your solution would benefit from utilizing one of the newer VM series.

Release date: 06/02/2021

This release applies for both HDInsight 3.6 and HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region in several days.

The OS versions for this release are:

- HDInsight 3.6: Ubuntu 16.04.7 LTS

- HDInsight 4.0: Ubuntu 18.04.5 LTS

New features

OS version upgrade

As referenced in Ubuntu's release cycle, the Ubuntu 16.04 kernel reaches End of Life (EOL) in April 2021. We started rolling out the new HDInsight 4.0 cluster image running on Ubuntu 18.04 with this release. Newly created HDInsight 4.0 clusters run on Ubuntu 18.04 by default once available. Existing clusters on Ubuntu 16.04 runs as is with full support.

HDInsight 3.6 will continue to run on Ubuntu 16.04. It will change to Basic support (from Standard support) beginning 1 July 2021. For more information about dates and support options, see Azure HDInsight versions. Ubuntu 18.04 won't be supported for HDInsight 3.6. If you'd like to use Ubuntu 18.04, you'll need to migrate your clusters to HDInsight 4.0.

You need to drop and recreate your clusters if you'd like to move existing HDInsight 4.0 clusters to Ubuntu 18.04. Plan to create or recreate your clusters after Ubuntu 18.04 support becomes available.

After creating the new cluster, you can SSH to your cluster and run sudo lsb_release -a to verify that it runs on Ubuntu 18.04. We recommend that you test your applications in your test subscriptions first before moving to production.

Scaling optimizations on HBase accelerated writes clusters

HDInsight made some improvements and optimizations on scaling for HBase accelerated write enabled clusters. Learn more about HBase accelerated write.

Deprecation

No deprecation in this release.

Behavior changes

Disable Stardard_A5 VM size as Head Node for HDInsight 4.0

HDInsight cluster Head Node is responsible for initializing and managing the cluster. Standard_A5 VM size has reliability issues as Head Node for HDInsight 4.0. Starting from this release, customers won't be able to create new clusters with Standard_A5 VM size as Head Node. You can use other two-core VMs like E2_v3 or E2s_v3. Existing clusters will run as is. A four-core VM is highly recommended for Head Node to ensure the high availability and reliability of your production HDInsight clusters.

Network interface resource not visible for clusters running on Azure virtual machine scale sets

HDInsight is gradually migrating to Azure virtual machine scale sets. Network interfaces for virtual machines are no longer visible to customers for clusters that use Azure virtual machine scale sets.

Upcoming changes

The following changes will happen in upcoming releases.

HDInsight Interactive Query only supports schedule-based Autoscale

As customer scenarios grow more mature and diverse, we've identified some limitations with Interactive Query (LLAP) load-based Autoscale. These limitations are caused by the nature of LLAP query dynamics, future load prediction accuracy issues, and issues in the LLAP scheduler's task redistribution. Due to these limitations, users might see their queries run slower on LLAP clusters when Autoscale is enabled. The effect on performance can outweigh the cost benefits of Autoscale.

Starting from July 2021, the Interactive Query workload in HDInsight only supports schedule-based Autoscale. You can no longer enable Autoscale on new Interactive Query clusters. Existing running clusters can continue to run with the known limitations described above.

Microsoft recommends that you move to a schedule-based Autoscale for LLAP. You can analyze your cluster's current usage pattern through the Grafana Hive dashboard. For more information, see Automatically scale Azure HDInsight clusters.

VM host naming will be changed on July 1, 2021

HDInsight now uses Azure virtual machines to provision the cluster. The service is gradually migrating to Azure virtual machine scale sets. This migration will change the cluster host name FQDN name format, and the numbers in the host name won't be guarantee in sequence. If you want to get the FQDN names for each node, refer to Find the Host names of Cluster Nodes.

Move to Azure virtual machine scale sets

HDInsight now uses Azure virtual machines to provision the cluster. The service will gradually migrate to Azure virtual machine scale sets. The entire process might take months. After your regions and subscriptions are migrated, newly created HDInsight clusters will run on virtual machine scale sets without customer actions. No breaking change is expected.

Release date: 03/24/2021

New features

Spark 3.0 preview

HDInsight added Spark 3.0.0 support to HDInsight 4.0 as a Preview feature.

Kafka 2.4 preview

HDInsight added Kafka 2.4.1 support to HDInsight 4.0 as a Preview feature.

Eav4-series support

HDInsight added Eav4-series support in this release.

Moving to Azure virtual machine scale sets

HDInsight now uses Azure virtual machines to provision the cluster. The service is gradually migrating to Azure virtual machine scale sets. The entire process might take months. After your regions and subscriptions are migrated, newly created HDInsight clusters will run on virtual machine scale sets without customer actions. No breaking change is expected.

Deprecation

No deprecation in this release.

Behavior changes

Default cluster version is changed to 4.0

The default version of HDInsight cluster is changed from 3.6 to 4.0. For more information about available versions, see available versions. Learn more about what is new in HDInsight 4.0.

Default cluster VM sizes are changed to Ev3-series

Default cluster VM sizes are changed from D-series to Ev3-series. This change applies to head nodes and worker nodes. To avoid this change impacting your tested workflows, specify the VM sizes that you want to use in the ARM template.

Network interface resource not visible for clusters running on Azure virtual machine scale sets

HDInsight is gradually migrating to Azure virtual machine scale sets. Network interfaces for virtual machines are no longer visible to customers for clusters that use Azure virtual machine scale sets.

Upcoming changes

The following changes will happen in upcoming releases.

HDInsight Interactive Query only supports schedule-based Autoscale

As customer scenarios grow more mature and diverse, we've identified some limitations with Interactive Query (LLAP) load-based Autoscale. These limitations are caused by the nature of LLAP query dynamics, future load prediction accuracy issues, and issues in the LLAP scheduler's task redistribution. Due to these limitations, users might see their queries run slower on LLAP clusters when Autoscale is enabled. The impact on performance can outweigh the cost benefits of Autoscale.

Starting from July 2021, the Interactive Query workload in HDInsight only supports schedule-based Autoscale. You can no longer enable Autoscale on new Interactive Query clusters. Existing running clusters can continue to run with the known limitations described above.

Microsoft recommends that you move to a schedule-based Autoscale for LLAP. You can analyze your cluster's current usage pattern through the Grafana Hive dashboard. For more information, see Automatically scale Azure HDInsight clusters.

OS version upgrade

HDInsight clusters are currently running on Ubuntu 16.04 LTS. As referenced in Ubuntu’s release cycle, the Ubuntu 16.04 kernel will reach End of Life (EOL) in April 2021. We’ll start rolling out the new HDInsight 4.0 cluster image running on Ubuntu 18.04 in May 2021. Newly created HDInsight 4.0 clusters will run on Ubuntu 18.04 by default once available. Existing clusters on Ubuntu 16.04 will run as is with full support.

HDInsight 3.6 will continue to run on Ubuntu 16.04. It will reach the end of standard support by 30 June 2021, and will change to Basic support starting on 1 July 2021. For more information about dates and support options, see Azure HDInsight versions. Ubuntu 18.04 won't be supported for HDInsight 3.6. If you’d like to use Ubuntu 18.04, you’ll need to migrate your clusters to HDInsight 4.0.

You need to drop and recreate your clusters if you’d like to move existing clusters to Ubuntu 18.04. Plan to create or recreate your cluster after Ubuntu 18.04 support becomes available. We’ll send another notification after the new image becomes available in all regions.

It’s highly recommended that you test your script actions and custom applications deployed on edge nodes on an Ubuntu 18.04 virtual machine (VM) in advance. You can create Ubuntu Linux VM on 18.04-LTS, then create and use a secure shell (SSH) key pair on your VM to run and test your script actions and custom applications deployed on edge nodes.

Disable Stardard_A5 VM size as Head Node for HDInsight 4.0

HDInsight cluster Head Node is responsible for initializing and managing the cluster. Standard_A5 VM size has reliability issues as Head Node for HDInsight 4.0. Starting from the next release in May 2021, customers won't be able to create new clusters with Standard_A5 VM size as Head Node. You can use other 2-core VMs like E2_v3 or E2s_v3. Existing clusters will run as is. A 4-core VM is highly recommended for Head Node to ensure the high availability and reliability of your production HDInsight clusters.

Bug fixes

HDInsight continues to make cluster reliability and performance improvements.

Component version change

Added support for Spark 3.0.0 and Kafka 2.4.1 as Preview. You can find the current component versions for HDInsight 4.0 and HDInsight 3.6 in this doc.

Release date: 02/05/2021

This release applies for both HDInsight 3.6 and HDInsight 4.0. HDInsight release is made available to all regions over several days. The release date here indicates the first region release date. If you don't see following changes, wait for the release being live in your region in several days.

New features

Dav4-series support

HDInsight added Dav4-series support in this release. Learn more about Dav4-series here.

Kafka REST Proxy GA

Kafka REST Proxy enables you to interact with your Kafka cluster via a REST API over HTTPS. Kafka REST Proxy is general available starting from this release. Learn more about Kafka REST Proxy here.