I'm running the following Python code for a fine-tune OpenAI task:

import openai

from openai import cli

import time

import shutil

import json

openai.api_key = "*********************"

openai.api_base = "https://*********************"

openai.api_type = 'azure'

openai.api_version = '2023-05-15'

deployment_name ='*********************'

training_file_name = 'training.jsonl'

validation_file_name = 'validation.jsonl'

# Samples data are fake

sample_data = [

{"prompt": "Questa parte del testo e’ invece in italiano, perche’ Giuseppe Coco vive a Milano, codice postale 09576.", "completion": "[type: LOCATION, start: 36, end: 44, score: 0.85, type: PERSON, start: 54, end: 72, score: 0.85, type: LOCATION, start: 75, end: 81, score: 0.85]"},

{"prompt": "In this fake document, we describe the ambarabacicicoco, of Alfred Johnson, who lives in Paris (France), the zip code is 21076, and his phone number is +32 475348723.", "completion": "[type: AU_TFN, start: 157, end: 166, score: 1.0, type: PERSON, start: 60, end: 74, score: 0.85, type: LOCATION, start: 89, end: 94, score: 0.85, type: LOCATION, start: 97, end: 103, score: 0.85, type: PHONE_NUMBER, start: 153, end: 166, score: 0.75]"},

{"prompt": "This document is a fac simile", "completion": "[]"},

{"prompt": "Here there are no PIIs", "completion": "[]"},

{"prompt": "Questa parte del testo e’ invece in italiano, perche’ Giuseppe Coco vive a Milano, codice postale 09576.", "completion": "[type: LOCATION, start: 36, end: 44, score: 0.85, type: PERSON, start: 54, end: 72, score: 0.85, type: LOCATION, start: 75, end: 81, score: 0.85]"},

{"prompt": "In this fake document, we describe the ambarabacicicoco, of Alfred Johnson, who lives in Paris (France), the zip code is 21076, and his phone number is +32 475348723.", "completion": "[type: AU_TFN, start: 157, end: 166, score: 1.0, type: PERSON, start: 60, end: 74, score: 0.85, type: LOCATION, start: 89, end: 94, score: 0.85, type: LOCATION, start: 97, end: 103, score: 0.85, type: PHONE_NUMBER, start: 153, end: 166, score: 0.75]"},

{"prompt": "This document is a fac simile", "completion": "[]"},

{"prompt": "Here there are no PIIs", "completion": "[]"},

{"prompt": "10 August 2023", "completion": "[type: DATE_TIME, start: 0, end: 14, score: 0.85]"},

{"prompt": "Marijn De Belie, Manu Brehmen (Deloitte Belastingconsulenten)", "completion": "[type: PERSON, start: 0, end: 15, score: 0.85, type: PERSON, start: 17, end: 29, score: 0.85]"},

{"prompt": "The content expressed herein is based on the facts and assumptions you have provided us. We have assumed that these facts and assumptions are correct, complete and accurate.", "completion": "[]"},

{"prompt": "This letter is solely for your benefit and may not be relied upon by anyone other than you.", "completion": "[]"},

{"prompt": "Dear Mr. Mahieu,", "completion": "[type: PERSON, start: 9, end: 15, score: 0.85]"},

{"prompt": "Since 1 January 2018, a capital reduction carried out in accordance with company law rules is partly imputed on the taxable reserves of the SPV", "completion": "[type: DATE_TIME, start: 6, end: 20, score: 0.85]"},

]

# Generate the training dataset file.

print(f'Generating the training file: {training_file_name}')

with open(training_file_name, 'w') as training_file:

for entry in sample_data:

json.dump(entry, training_file)

training_file.write('\n')

# Copy the validation dataset file from the training dataset file.

# Typically, your training data and validation data should be mutually exclusive.

# For the purposes of this example, you use the same data.

print(f'Copying the training file to the validation file')

shutil.copy(training_file_name, validation_file_name)

def check_status(training_id, validation_id):

train_status = openai.File.retrieve(training_id)["status"]

valid_status = openai.File.retrieve(validation_id)["status"]

print(f'Status (training_file | validation_file): {train_status} | {valid_status}')

return (train_status, valid_status)

# Upload the training and validation dataset files to Azure OpenAI.

training_id = cli.FineTune._get_or_upload(training_file_name, True)

validation_id = cli.FineTune._get_or_upload(validation_file_name, True)

# Check the upload status of the training and validation dataset files.

(train_status, valid_status) = check_status(training_id, validation_id)

# Poll and display the upload status once per second until both files succeed or fail to upload.

while train_status not in ["succeeded", "failed"] or valid_status not in ["succeeded", "failed"]:

time.sleep(1)

(train_status, valid_status) = check_status(training_id, validation_id)

# This example defines a fine-tune job that creates a customized model based on curie,

# with just a single pass through the training data. The job also provides

# classification-specific metrics by using our validation data, at the end of that epoch.

create_args = {

"training_file": training_id,

"validation_file": validation_id,

"model": "curie",

"n_epochs": 1,

"compute_classification_metrics": True,

"classification_n_classes": 3

}

# Create the fine-tune job and retrieve the job ID and status from the response.

resp = openai.FineTune.create(**create_args)

job_id = resp["id"]

status = resp["status"]

# You can use the job ID to monitor the status of the fine-tune job.

# The fine-tune job might take some time to start and complete.

print(f'Fine-tuning model with job ID: {job_id}.')

# Get the status of our fine-tune job.

status = openai.FineTune.retrieve(id=job_id)["status"]

# If the job isn't yet done, poll it every 2 seconds.

if status not in ["succeeded", "failed"]:

print(f'Job not in terminal status: {status}. Waiting.')

while status not in ["succeeded", "failed"]:

time.sleep(2)

status = openai.FineTune.retrieve(id=job_id)["status"]

print(f'Status: {status}')

else:

print(f'Fine-tune job {job_id} finished with status: {status}')

# Check if there are other fine-tune jobs in the subscription.

# Your fine-tune job might be queued, so this is helpful information to have

# if your fine-tune job hasn't yet started.

print('Checking other fine-tune jobs in the subscription.')

result = openai.FineTune.list()

print(f'Found {len(result)} fine-tune jobs.')

# Retrieve the name of the customized model from the fine-tune job.

result = openai.FineTune.retrieve(id=job_id)

if result["status"] == 'succeeded':

model = result["fine_tuned_model"]

# Create the deployment for the customized model by using the standard scale type

# without specifying a scale capacity.

print(f'Creating a new deployment with model: {model}')

result = openai.Deployment.create(model=model, scale_settings={"scale_type":"standard", "capacity": None})

# Retrieve the deployment job ID from the results.

deployment_id = result["id"]

Based on this Microsoft official documentation: https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/fine-tuning?pivots=programming-language-python

Now, when I run this script I get the following error: openai.error.InvalidRequestError: The specified base model does not support fine-tuning.

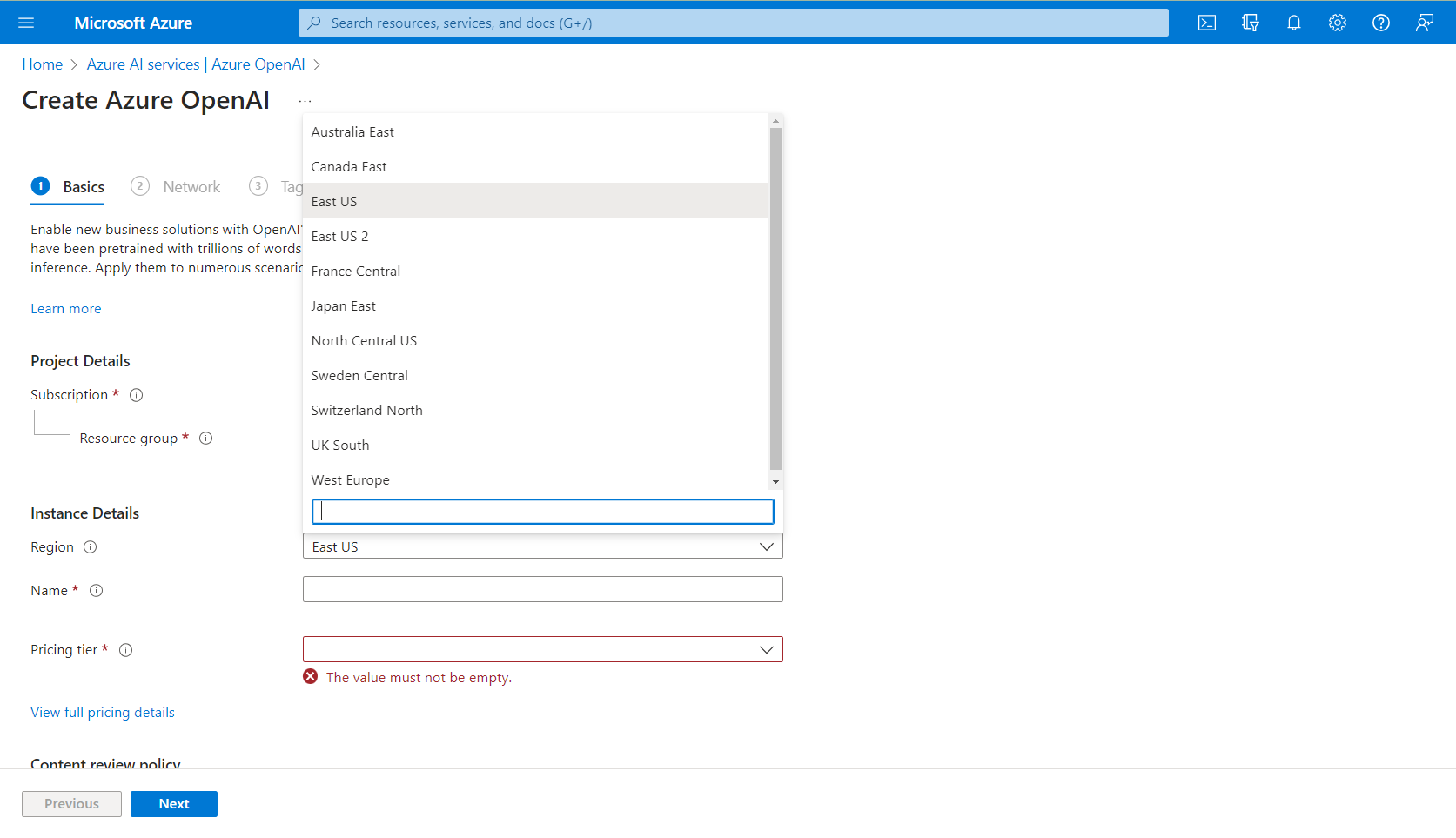

Based on a similar question (https://learn.microsoft.com/en-us/answers/questions/1190892/getting-error-while-finetuning-gpt-3-model-using-a), it seems that the problem is related to the region where my OpenAI service is deployed, indeed my OpenAI service is deployed in East US, and as far as I understood, the only available region for fine-tuning is Central US. The problem is that I don't see Central US as an available region to deploy an OpenAI service:

Note that I also tried "North Central US", and got the same error.

Do you know what could be the reason of this error?