Monitor Azure Kubernetes Service (AKS)

When you have critical applications and business processes relying on Azure resources, you want to monitor those resources for their availability, performance, and operation. This article describes the monitoring data generated by AKS and analyzed with Azure Monitor. If you're unfamiliar with the features of Azure Monitor common to all Azure services that use it, read Monitoring Azure resources with Azure Monitor.

Important

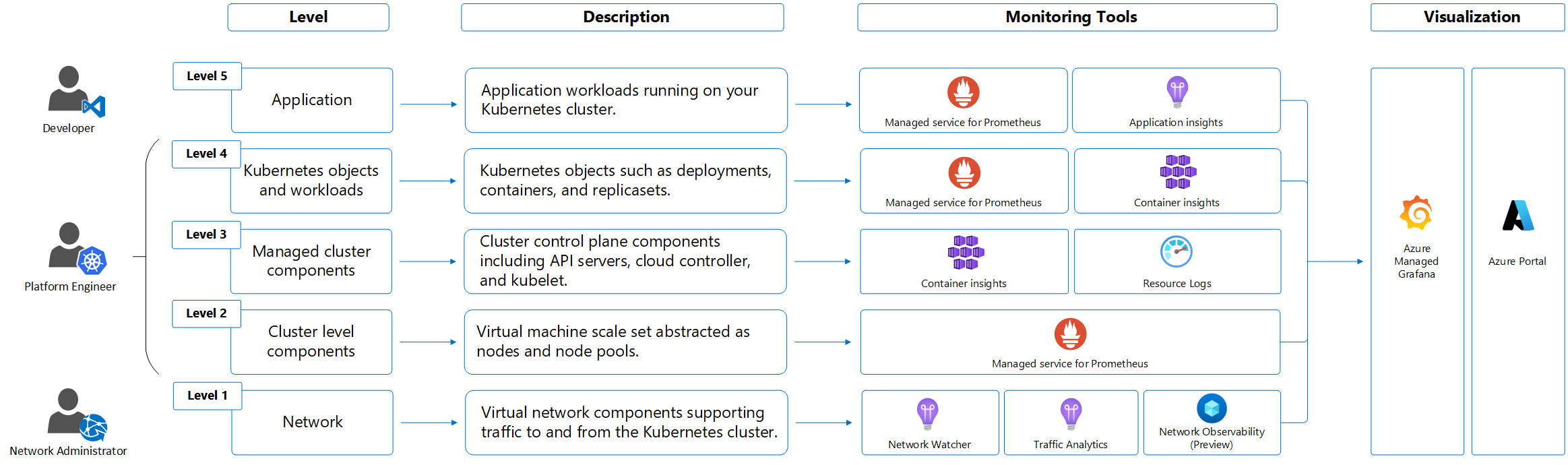

Kubernetes is a complex distributed system with many moving parts so monitoring at multiple levels is required. Although AKS is a managed Kubernetes service, the same rigor around monitoring at multiple levels is still required. This article provides high level information and best practices for monitoring an AKS cluster. See the following for additional details.

- For detailed monitoring of the complete Kubernetes stack, see Monitor Azure Kubernetes Service (AKS) with Azure Monitor

- For collecting metric data from Kubernetes clusters, see Azure Monitor managed service for Prometheus.

- For collecting logs in Kubernetes clusters, see Container insights.

- For data visualization, see Azure Workbooks and Azure Managed Grafana.

Monitoring data

AKS generates the same kinds of monitoring data as other Azure resources that are described in Monitoring data from Azure resources. See Monitoring AKS data reference for detailed information on the metrics and logs created by AKS. Other Azure services and features collect other data and enable other analysis options as shown in the following diagram and table.

| Source | Description |

|---|---|

| Platform metrics | Platform metrics are automatically collected for AKS clusters at no cost. You can analyze these metrics with metrics explorer or use them for metric alerts. |

| Prometheus metrics | When you enable metric scraping for your cluster, Prometheus metrics are collected by Azure Monitor managed service for Prometheus and stored in an Azure Monitor workspace. Analyze them with prebuilt dashboards in Azure Managed Grafana and with Prometheus alerts. |

| Activity logs | Activity log is collected automatically for AKS clusters at no cost. These logs track information such as when a cluster is created or has a configuration change. Send the Activity log to a Log Analytics workspace to analyze it with your other log data. |

| Resource logs | Control plane logs for AKS are implemented as resource logs. Create a diagnostic setting to send them to Log Analytics workspace where you can analyze and alert on them with log queries in Log Analytics. |

| Container insights | Container insights collects various logs and performance data from a cluster including stdout/stderr streams and stores them in a Log Analytics workspace and Azure Monitor Metrics. Analyze this data with views and workbooks included with Container insights or with Log Analytics and metrics explorer. |

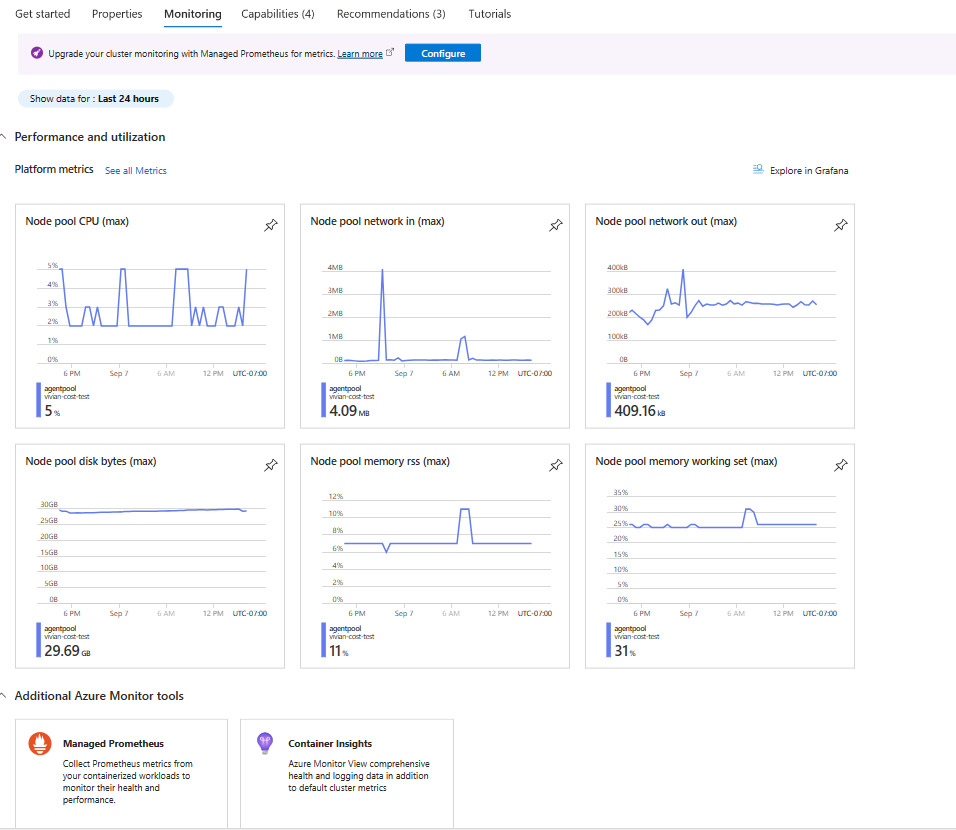

Monitoring overview page in Azure portal

The Monitoring tab on the Overview page offers a quick way to get started viewing monitoring data in the Azure portal for each AKS cluster. This includes graphs with common metrics for the cluster separated by node pool. Click on any of these graphs to further analyze the data in metrics explorer.

The Overview page also includes links to Managed Prometheus and Container insights for the current cluster. If you haven't already enabled these tools, you are prompted to do so. You may also see a banner at the top of the screen recommending that you enable other features to improve monitoring of your cluster.

Tip

Access monitoring features for all AKS clusters in your subscription from the Monitoring menu in the Azure portal, or for a single AKS cluster from the Monitor section of the Kubernetes services menu.

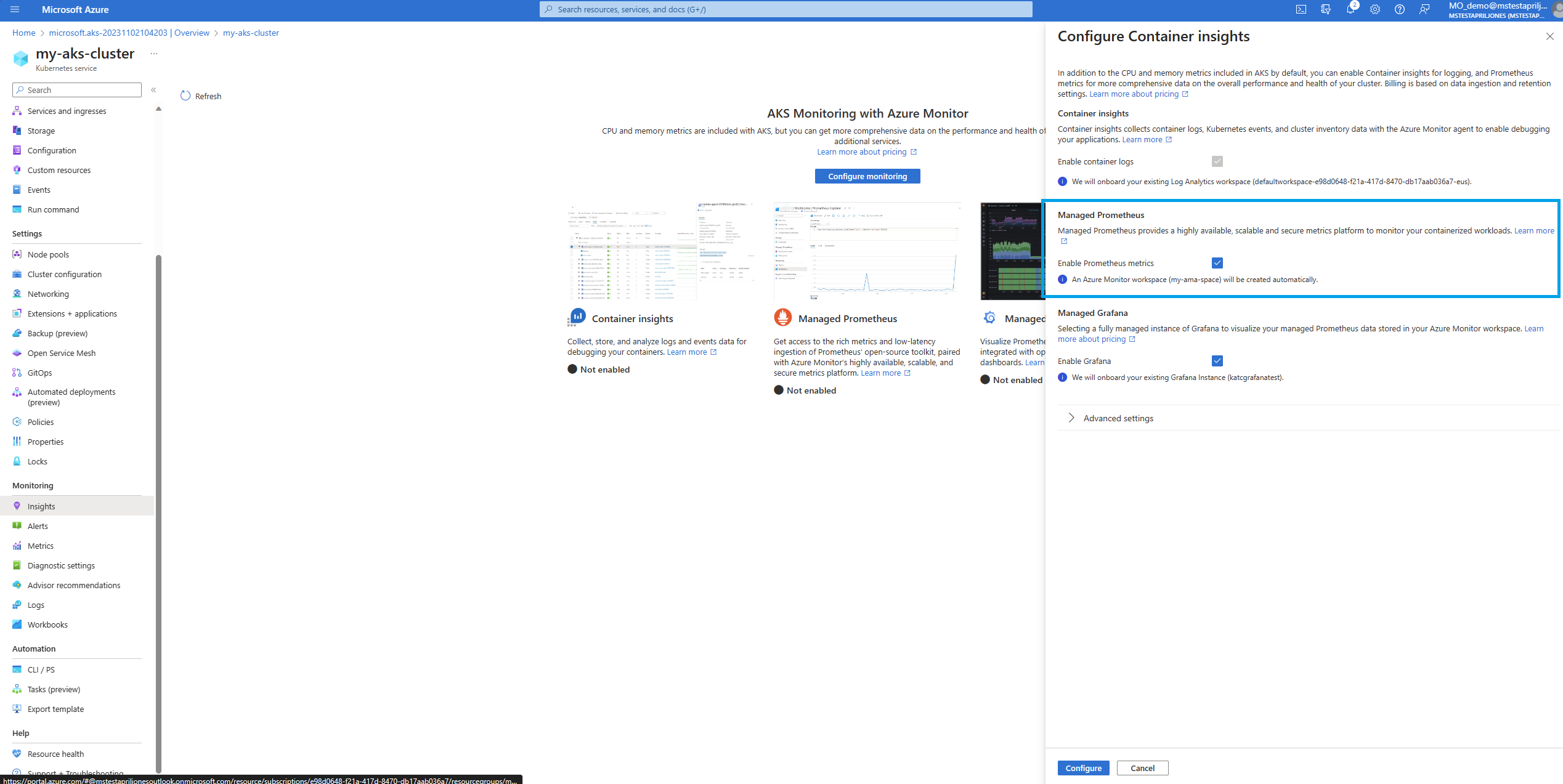

Integrations

The following Azure services and features of Azure Monitor can be used for extra monitoring of your Kubernetes clusters. You can enable these features during AKS cluster creation from the Integrations tab in the Azure portal, Azure CLI, Terraform, Azure Policy, or onboard your cluster to them later. Each of these features may incur cost, so refer to the pricing information for each before you enabled them.

| Service / Feature | Description |

|---|---|

| Container insights | Uses a containerized version of the Azure Monitor agent to collect stdout/stderr logs, and Kubernetes events from each node in your cluster, supporting a variety of monitoring scenarios for AKS clusters. You can enable monitoring for an AKS cluster when it's created by using Azure CLI, Azure Policy, Azure portal or Terraform. If you don't enable Container insights when you create your cluster, see Enable Container insights for Azure Kubernetes Service (AKS) cluster for other options to enable it. Container insights store most of its data in a Log Analytics workspace, and you'll typically use the same log analytics workspace as the resource logs for your cluster. See Design a Log Analytics workspace architecture for guidance on how many workspaces you should use and where to locate them. |

| Azure Monitor managed service for Prometheus | Prometheus is a cloud-native metrics solution from the Cloud Native Compute Foundation and the most common tool used for collecting and analyzing metric data from Kubernetes clusters. Azure Monitor managed service for Prometheus is a fully managed Prometheus-compatible monitoring solution in Azure. If you don't enable managed Prometheus when you create your cluster, see Collect Prometheus metrics from an AKS cluster for other options to enable it. Azure Monitor managed service for Prometheus stores its data in an Azure Monitor workspace, which is linked to a Grafana workspace so that you can analyze the data with Azure Managed Grafana. |

| Azure Managed Grafana | Fully managed implementation of Grafana, which is an open-source data visualization platform commonly used to present Prometheus data. Multiple predefined Grafana dashboards are available for monitoring Kubernetes and full-stack troubleshooting. If you don't enable managed Grafana when you create your cluster, see Link a Grafana workspace details on linking it to your Azure Monitor workspace so it can access Prometheus metrics for your cluster. |

Metrics

Metrics play an important role in cluster monitoring, identifying issues, and optimizing performance in the AKS clusters. Platform metrics are captured using the out of the box metrics server installed in kube-system namespace, which periodically scrapes metrics from all Kubernetes nodes served by Kubelet. You should also enable Azure Managed Prometheus metrics to collect container metrics and Kubernetes object metrics, such as object state of Deployments. See Collect Prometheus metrics from an AKS cluster to send data to Azure Managed service for Prometheus.

AKS also exposes metrics from a critical Control Plane components such as API server, ETCD, Scheduler through Azure Managed Prometheus. This feature is currently in preview and more details can be found here.

Logs

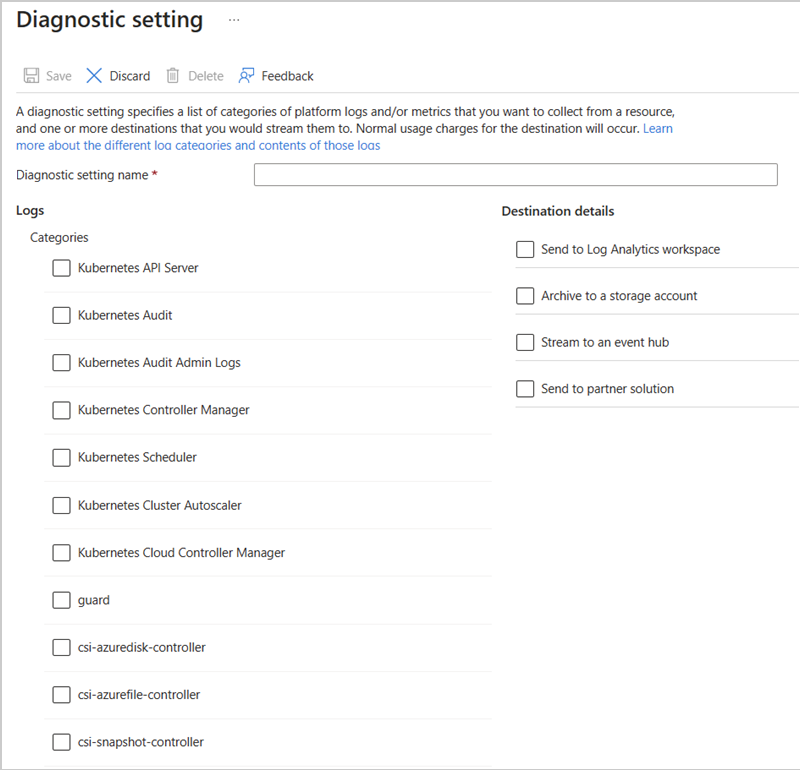

AKS control plane/resource logs

Control plane logs for AKS clusters are implemented as resource logs in Azure Monitor. Resource logs aren't collected and stored until you create a diagnostic setting to route them to one or more locations. You'll typically send them to a Log Analytics workspace, which is where most of the data for Container insights is stored.

See Create diagnostic settings for the detailed process for creating a diagnostic setting using the Azure portal, CLI, or PowerShell. When you create a diagnostic setting, you specify which categories of logs to collect. The categories for AKS are listed in AKS monitoring data reference.

Important

There can be substantial cost when collecting resource logs for AKS, particularly for kube-audit logs. Consider the following recommendations to reduce the amount of data collected:

- Disable kube-audit logging when not required.

- Enable collection from kube-audit-admin, which excludes the get and list audit events.

- Enable resource-specific logs as described below and configure

AKSAudittable as basic logs.

See Monitor Kubernetes clusters using Azure services and cloud native tools for further recommendations and Cost optimization and Azure Monitor for further strategies to reduce your monitoring costs.

AKS supports either Azure diagnostics mode or resource-specific mode for resource logs. This specifies the tables in the Log Analytics workspace where the data is sent. Azure diagnostics mode sends all data to the AzureDiagnostics table, while resource-specific mode sends data to AKS Audit, AKS Audit Admin, and AKS Control Plane as shown in the table at Resource logs.

Resource-specific mode is recommended for AKS for the following reasons:

- Data is easier to query because it's in individual tables dedicated to AKS.

- Supports configuration as basic logs for significant cost savings.

For more information on the difference between collection modes including how to change an existing setting, see Select the collection mode.

Note

The ability to select the collection mode isn't available in the Azure portal in all regions yet. For those regions where it's not yet available, use CLI to create the diagnostic setting with a command such as the following:

az monitor diagnostic-settings create --name AKS-Diagnostics --resource /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/myresourcegroup/providers/Microsoft.ContainerService/managedClusters/my-cluster --logs '[{""category"": ""kube-audit"",""enabled"": true}, {""category"": ""kube-audit-admin"", ""enabled"": true}, {""category"": ""kube-apiserver"", ""enabled"": true}, {""category"": ""kube-controller-manager"", ""enabled"": true}, {""category"": ""kube-scheduler"", ""enabled"": true}, {""category"": ""cluster-autoscaler"", ""enabled"": true}, {""category"": ""cloud-controller-manager"", ""enabled"": true}, {""category"": ""guard"", ""enabled"": true}, {""category"": ""csi-azuredisk-controller"", ""enabled"": true}, {""category"": ""csi-azurefile-controller"", ""enabled"": true}, {""category"": ""csi-snapshot-controller"", ""enabled"": true}]' --workspace /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourcegroups/myresourcegroup/providers/microsoft.operationalinsights/workspaces/myworkspace --export-to-resource-specific true

Sample log queries

Important

When you select Logs from the menu for an AKS cluster, Log Analytics is opened with the query scope set to the current cluster. This means that log queries will only include data from that resource. If you want to run a query that includes data from other clusters or data from other Azure services, select Logs from the Azure Monitor menu. See Log query scope and time range in Azure Monitor Log Analytics for details.

If the diagnostic setting for your cluster uses Azure diagnostics mode, the resource logs for AKS are stored in the AzureDiagnostics table. You can distinguish different logs with the Category column. For a description of each category, see AKS reference resource logs.

| Description | Log query |

|---|---|

| Count logs for each category (Azure diagnostics mode) |

AzureDiagnostics | where ResourceType == "MANAGEDCLUSTERS" | summarize count() by Category |

| All API server logs (Azure diagnostics mode) |

AzureDiagnostics | where Category == "kube-apiserver" |

| All kube-audit logs in a time range (Azure diagnostics mode) |

let starttime = datetime("2023-02-23"); let endtime = datetime("2023-02-24"); AzureDiagnostics | where TimeGenerated between(starttime..endtime) | where Category == "kube-audit" | extend event = parse_json(log_s) | extend HttpMethod = tostring(event.verb) | extend User = tostring(event.user.username) | extend Apiserver = pod_s | extend SourceIP = tostring(event.sourceIPs[0]) | project TimeGenerated, Category, HttpMethod, User, Apiserver, SourceIP, OperationName, event |

| All audit logs (resource-specific mode) |

AKSAudit |

| All audit logs excluding the get and list audit events (resource-specific mode) |

AKSAuditAdmin |

| All API server logs (resource-specific mode) |

AKSControlPlane | where Category == "kube-apiserver" |

To access a set of prebuilt queries in the Log Analytics workspace, see the Log Analytics queries interface and select resource type Kubernetes Services. For a list of common queries for Container insights, see Container insights queries.

AKS data plane/Container Insights logs

Container Insights collect various types of telemetry data from containers and Kubernetes clusters to help you monitor, troubleshoot, and gain insights into your containerized applications running in your AKS clusters. For a list of tables and their detailed descriptions used by Container insights, see the Azure Monitor table reference. All these tables are available for log queries.

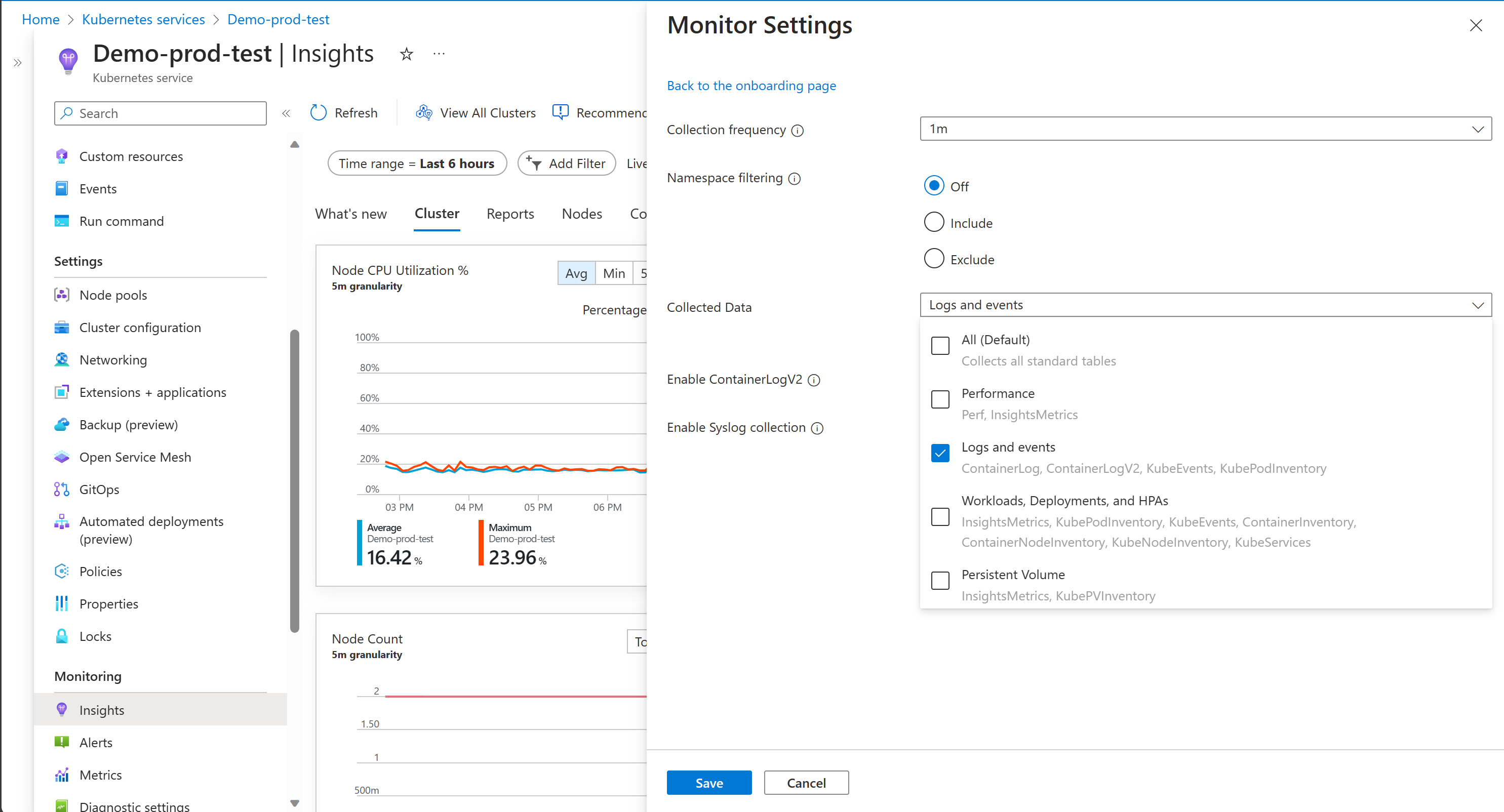

Cost optimization settings allow you to customize and control the metrics data collected through the container insights agent. This feature supports the data collection settings for individual table selection, data collection intervals, and namespaces to exclude the data collection through Azure Monitor Data Collection Rules (DCR). These settings control the volume of ingestion and reduce the monitoring costs of container insights. Container insights Collected Data can be customized through the Azure portal, using the following options. Selecting any options other than All (Default) leads to the container insights experience becoming unavailable.

| Grouping | Tables | Notes |

|---|---|---|

| All (Default) | All standard container insights tables | Required for enabling the default container insights visualizations |

| Performance | Perf, InsightsMetrics | |

| Logs and events | ContainerLog or ContainerLogV2, KubeEvents, KubePodInventory | Recommended if you enabled managed Prometheus metrics |

| Workloads, Deployments, and HPAs | InsightsMetrics, KubePodInventory, KubeEvents, ContainerInventory, ContainerNodeInventory, KubeNodeInventory, KubeServices | |

| Persistent Volumes | InsightsMetrics, KubePVInventory |

The Logs and events grouping captures the logs from the ContainerLog or ContainerLogV2, KubeEvents, KubePodInventory tables, but not the metrics. The recommended path to collect metrics is to enable Azure Monitor managed service Prometheus for Prometheus from your AKS cluster and to use Azure Managed Grafana for data visualization. For more information, see Manage an Azure Monitor workspace.

ContainerLogV2 schema

Azure Monitor Container Insights provides a schema for container logs known as ContainerLogV2, which is the recommended option. This format includes the following fields to facilitate common queries for viewing data related to AKS and Azure Arc-enabled Kubernetes clusters:

- ContainerName

- PodName

- PodNamespace

In addition, this schema is compatible with Basic Logs data plan, which offers a low-cost alternative to standard analytics logs. The Basic log data plan lets you save on the cost of ingesting and storing high-volume verbose logs in your Log Analytics workspace for debugging, troubleshooting, and auditing, but not for analytics and alerts. For more information, see Manage tables in a Log Analytics workspace. ContainerLogV2 is the recommended approach and is the default schema for customers onboarding container insights with Managed Identity Auth using ARM, Bicep, Terraform, Policy, and Azure portal. For more information about how to enable ContainerLogV2 through either the cluster's Data Collection Rule (DCR) or ConfigMap, see Enable the ContainerLogV2 schema.

Visualization

Data visualization is an essential concept that makes it easier for system administrators and operational engineers to consume the collected information. Instead of looking at raw data, they can use visual representations, which quickly display the data and reveal trends that might be hidden when looking at raw data. You can use Grafana Dashboards or native Azure workbooks for data visualization.

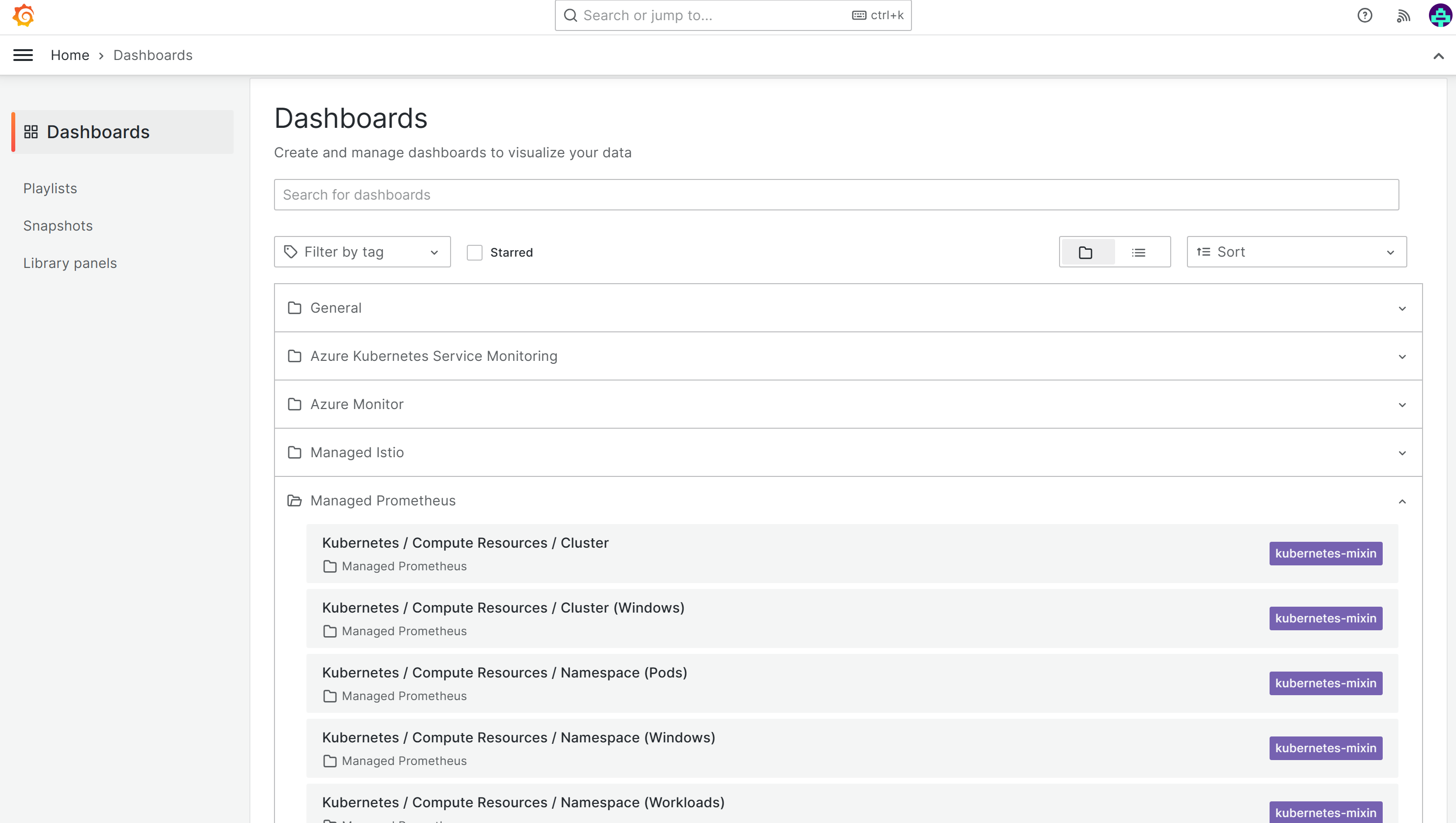

Azure Managed Grafana

The most common way to analyze and present Prometheus data is with a Grafana Dashboard. Azure Managed Grafana includes prebuilt dashboards for monitoring Kubernetes clusters including several that present similar information as Container insights views. There are also various community-created dashboards to visualize multiple aspects of a Kubernetes cluster from the metrics collected by Prometheus.

Workbooks

Azure Monitor Workbooks is a feature in Azure Monitor that provides a flexible canvas for data analysis and the creation of rich visual reports. Workbooks help you to create visual reports that help in data analysis. Reports in Container insights are recommended out-of-the-box for Azure workbooks. Azure provides built-in workbooks for each service, including Azure Kubernetes Service (AKS), which you can access from the Azure portal. On the Azure Monitor menu in the Azure portal, select Containers. In the Monitoring section, select Insights, choose a particular cluster, and then select the Reports tab. You can also view them from the workbook gallery in Azure Monitor.

For instance, the Cluster Optimization Workbook provides multiple analyzers that give you a quick view of the health and performance of your Kubernetes cluster. It has multiple analyzers that each provide different information related to your cluster. The workbook requires no configuration once Container insights is enabled on the cluster. Salient capabilities include the ability to detect liveness probe failures and their frequencies, identify and group event anomalies that indicate recent increases in event volume for more accessible analysis, and identify containers with high or low CPU and memory limits and requests, along with suggested limit and request values for these containers running in your AKS clusters. For more information about these workbooks, see Reports in Container insights.

Alerts

Azure Monitor alerts help you detect and address issues before users notice them by proactively notifying you when Azure Monitor collected data indicates there might be a problem with your cloud infrastructure or application. They allow you to identify and address issues in your system before your customers notice them. You can set alerts on metrics, logs, and the activity log. Different types of alerts have benefits and drawbacks.

There are two types of metric rules used by Container insights based on either Prometheus metrics or platform metrics.

Prometheus metrics based alerts

When you enable collection of Prometheus metrics for your cluster, then you can download a collection of recommended Prometheus alert rules. This includes the following rules:

| Level | Alerts |

|---|---|

| Pod level | KubePodCrashLooping Job didn't complete in time Pod container restarted in last 1 hour Ready state of pods is less than 80% Number of pods in failed state are greater than 0 KubePodNotReadyByController KubeStatefulSetGenerationMismatch KubeJobNotCompleted KubeJobFailed Average CPU usage per container is greater than 95% Average Memory usage per container is greater than 95% KubeletPodStartUpLatencyHigh |

| Cluster level | Average PV usage is greater than 80% KubeDeploymentReplicasMismatch KubeStatefulSetReplicasMismatch KubeHpaReplicasMismatch KubeHpaMaxedOut KubeCPUQuotaOvercommit KubeMemoryQuotaOvercommit KubeVersionMismatch KubeClientErrors CPUThrottlingHigh KubePersistentVolumeFillingUp KubePersistentVolumeInodesFillingUp KubePersistentVolumeErrors |

| Node level | Average node CPU utilization is greater than 80% Working set memory for a node is greater than 80% Number of OOM killed containers is greater than 0 KubeNodeUnreachable KubeNodeNotReady KubeNodeReadinessFlapping KubeContainerWaiting KubeDaemonSetNotScheduled KubeDaemonSetMisScheduled KubeletPlegDurationHigh KubeletServerCertificateExpiration KubeletClientCertificateRenewalErrors KubeletServerCertificateRenewalErrors KubeQuotaAlmostFull KubeQuotaFullyUsed KubeQuotaExceeded |

Platform metric based alerts

The following table lists the recommended metric alert rules for AKS clusters. These alerts are based on platform metrics for the cluster.

| Condition | Description |

|---|---|

| CPU Usage Percentage > 95 | Fires when the average CPU usage across all nodes exceeds the threshold. |

| Memory Working Set Percentage > 100 | Fires when the average working set across all nodes exceeds the threshold. |

Log based alerts

Log alerts allow you to alert on your data plane and control plane logs. Run queries at predefined intervals and create an alert based on the results. You may check for the count of certain records or perform calculations based on numeric columns.

See How to create log alerts from Container Insights and How to query logs from Container Insights. Log alerts can measure two different things, which can be used to monitor in different scenarios:

- Result count: Counts the number of rows returned by the query and can be used to work with events such as Windows event logs, Syslog, and application exceptions.

- Calculation of a value: Makes a calculation based on a numeric column and can be used to include any number of resources. An example is CPU percentage.

Depending on the alerting scenario required, log queries need to be created comparing a DateTime to the present time by using the now operator and going back one hour. To learn how to build log-based alerts, see Create log alerts from Container insights.

Network Observability

Network observability is an important part of maintaining a healthy and performant Kubernetes cluster. By collecting and analyzing data about network traffic, you can gain insights into how your cluster is operating and identify potential problems before they cause outages or performance degradation.

When the Network Observability add-on is enabled, it collects and converts useful metrics into Prometheus format, which can be visualized in Grafana. When enabled, the collected metrics are automatically ingested into Azure Monitor managed service for Prometheus. A Grafana dashboard is available in the Grafana public dashboard repo to visualize the network observability metrics collected by Prometheus. For more information, see Network Observability setup for detailed instructions.

Next steps

- See Monitoring AKS data reference for a reference of the metrics, logs, and other important values created by AKS.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for