Vector storage in Azure AI Search

Azure AI Search provides vector storage and configurations for vector search and hybrid search. Support is implemented at the field level, which means you can combine vector and nonvector fields in the same search corpus.

Vectors are stored in a search index. Use the Create Index REST API or an equivalent Azure SDK method to create the vector store.

Considerations for vector storage include the following points:

- Design a schema to fit your use case based on the intended vector retrieval pattern.

- Estimate index size and check search service capacity.

- Manage a vector store

- Secure a vector store

Vector retrieval patterns

In Azure AI Search, there are two patterns for working with search results.

Generative search. Language models formulate a response to the user's query using data from Azure AI Search. This pattern includes an orchestration layer to coordinate prompts and maintain context. In this pattern, search results are fed into prompt flows, received by chat models like GPT and Text-Davinci. This approach is based on Retrieval augmented generation (RAG) architecture, where the search index provides the grounding data.

Classic search using a search bar, query input string, and rendered results. The search engine accepts and executes the vector query, formulates a response, and you render those results in a client app. In Azure AI Search, results are returned in a flattened row set, and you can choose which fields to include search results. Since there's no chat model, it's expected that you would populate the vector store (search index) with nonvector content that's human readable in your response. Although the search engine matches on vectors, you should use nonvector values to populate the search results. Vector queries and hybrid queries cover the types of query requests you can formulate for classic search scenarios.

Your index schema should reflect your primary use case. The following section highlights the differences in field composition for solutions built for generative AI or classic search.

Schema of a vector store

An index schema for a vector store requires a name, a key field (string), one or more vector fields, and a vector configuration. Nonvector fields are recommended for hybrid queries, or for returning verbatim human readable content that doesn't have to go through a language model. For instructions about vector configuration, see Create a vector store.

Basic vector field configuration

Vector fields are distinguished by their data type and vector-specific properties. Here's what a vector field looks like in a fields collection:

{

"name": "content_vector",

"type": "Collection(Edm.Single)",

"searchable": true,

"retrievable": true,

"dimensions": 1536,

"vectorSearchProfile": "my-vector-profile"

}

Vector fields have specific data types. Currently, Collection(Edm.Single) is the most common, but using narrow data types can save on storage.

Vector fields must be searchable and retrievable, but they can't be filterable, facetable, or sortable, or have analyzers, normalizers, or synonym map assignments.

Vector fields must have dimensions set to the number of embeddings generated by the embedding model. For example, text-embedding-ada-002 generates 1,536 embeddings for each chunk of text.

Vector fields are indexed using algorithms indicated by a vector search profile, which is defined elsewhere in the index and thus not shown in the example. For more information, see vector search configuration.

Fields collection for basic vector workloads

Vector stores require more fields besides vector fields. For example, a key field ("id" in this example) is an index requirement.

"name": "example-basic-vector-idx",

"fields": [

{ "name": "id", "type": "Edm.String", "searchable": false, "filterable": true, "retrievable": true, "key": true },

{ "name": "content_vector", "type": "Collection(Edm.Single)", "searchable": true, "retrievable": true, "dimensions": 1536, "vectorSearchProfile": null },

{ "name": "content", "type": "Edm.String", "searchable": true, "retrievable": true, "analyzer": null },

{ "name": "metadata", "type": "Edm.String", "searchable": true, "filterable": true, "retrievable": true, "sortable": true, "facetable": true }

]

Other fields, such as the "content" field, provide the human readable equivalent of the "content_vector" field. If you're using language models exclusively for response formulation, you can omit nonvector content fields, but solutions that push search results directly to client apps should have nonvector content.

Metadata fields are useful for filters, especially if metadata includes origin information about the source document. You can't filter on a vector field directly, but you can set prefilter or postfilter modes to filter before or after vector query execution.

Schema generated by the Import and vectorize data wizard

We recommend the Import and vectorize data wizard for evaluation and proof-of-concept testing. The wizard generates the example schema in this section.

The bias of this schema is that search documents are built around data chunks. If a language model formulates the response, as is typical for RAG apps, you want a schema designed around data chunks.

Data chunking is necessary for staying within the input limits of language models, but it also improves precision in similarity search when queries can be matched against smaller chunks of content pulled from multiple parent documents. Finally, if you're using semantic ranker, the semantic ranker also has token limits, which are more easily met if data chunking is part of your approach.

In the following example, for each search document, there's one chunk ID, chunk, title, and vector field. The chunkID and parent ID are populated by the wizard, using base 64 encoding of blob metadata (path). Chunk and title are derived from blob content and blob name. Only the vector field is fully generated. It's the vectorized version of the chunk field. Embeddings are generated by calling an Azure OpenAI embedding model that you provide.

"name": "example-index-from-import-wizard",

"fields": [

{"name": "chunk_id", "type": "Edm.String", "key": true, "searchable": true, "filterable": true, "retrievable": true, "sortable": true, "facetable": true, "analyzer": "keyword"},

{ "name": "parent_id", "type": "Edm.String", "searchable": true, "filterable": true, "retrievable": true, "sortable": true},

{ "name": "chunk", "type": "Edm.String", "searchable": true, "filterable": false, "retrievable": true, "sortable": false},

{ "name": "title", "type": "Edm.String", "searchable": true, "filterable": true, "retrievable": true, "sortable": false},

{ "name": "vector", "type": "Collection(Edm.Single)", "searchable": true, "retrievable": true, "dimensions": 1536, "vectorSearchProfile": "vector-1707768500058-profile"}

]

Schema for RAG and chat-style apps

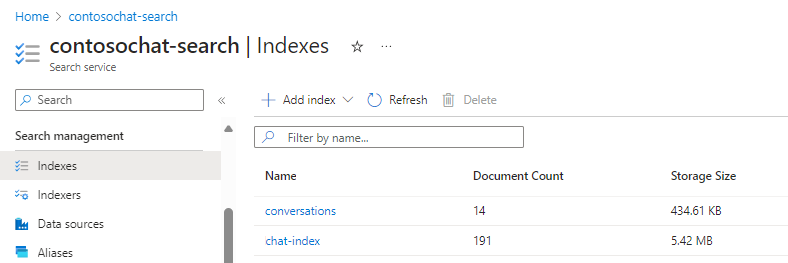

If you're designing storage for generative search, you can create separate indexes for the static content that you indexed and vectorized, and a second index for conversations that can be used in prompt flows. The following indexes are created from the chat-with-your-data-solution-accelerator accelerator.

Fields from the chat index that support generative search experience:

"name": "example-index-from-accelerator",

"fields": [

{ "name": "id", "type": "Edm.String", "searchable": false, "filterable": true, "retrievable": true },

{ "name": "content", "type": "Edm.String", "searchable": true, "filterable": false, "retrievable": true },

{ "name": "content_vector", "type": "Collection(Edm.Single)", "searchable": true, "retrievable": true, "dimensions": 1536, "vectorSearchProfile": "my-vector-profile"},

{ "name": "metadata", "type": "Edm.String", "searchable": true, "filterable": false, "retrievable": true },

{ "name": "title", "type": "Edm.String", "searchable": true, "filterable": true, "retrievable": true, "facetable": true },

{ "name": "source", "type": "Edm.String", "searchable": true, "filterable": true, "retrievable": true },

{ "name": "chunk", "type": "Edm.Int32", "searchable": false, "filterable": true, "retrievable": true },

{ "name": "offset", "type": "Edm.Int32", "searchable": false, "filterable": true, "retrievable": true }

]

Fields from the conversations index that supports orchestration and chat history:

"fields": [

{ "name": "id", "type": "Edm.String", "key": true, "searchable": false, "filterable": true, "retrievable": true, "sortable": false, "facetable": false },

{ "name": "conversation_id", "type": "Edm.String", "searchable": false, "filterable": true, "retrievable": true, "sortable": false, "facetable": true },

{ "name": "content", "type": "Edm.String", "searchable": true, "filterable": false, "retrievable": true },

{ "name": "content_vector", "type": "Collection(Edm.Single)", "searchable": true, "retrievable": true, "dimensions": 1536, "vectorSearchProfile": "default-profile" },

{ "name": "metadata", "type": "Edm.String", "searchable": true, "filterable": false, "retrievable": true },

{ "name": "type", "type": "Edm.String", "searchable": false, "filterable": true, "retrievable": true, "sortable": false, "facetable": true },

{ "name": "user_id", "type": "Edm.String", "searchable": false, "filterable": true, "retrievable": true, "sortable": false, "facetable": true },

{ "name": "sources", "type": "Collection(Edm.String)", "searchable": false, "filterable": true, "retrievable": true, "sortable": false, "facetable": true },

{ "name": "created_at", "type": "Edm.DateTimeOffset", "searchable": false, "filterable": true, "retrievable": true },

{ "name": "updated_at", "type": "Edm.DateTimeOffset", "searchable": false, "filterable": true, "retrievable": true }

]

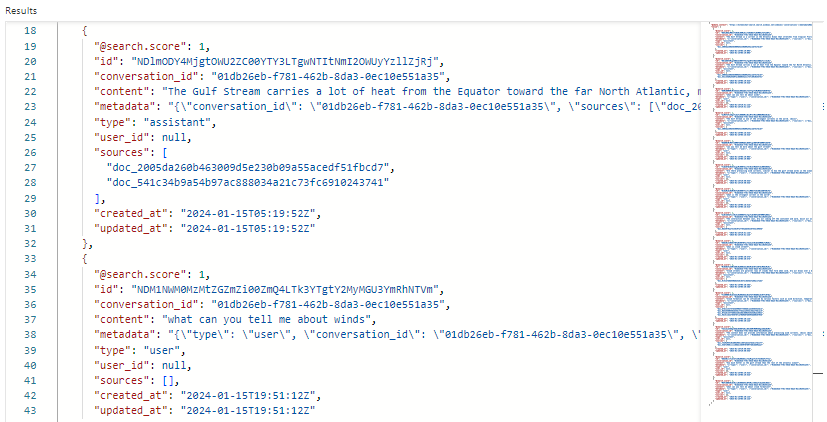

Here's a screenshot showing search results in Search Explorer for the conversations index. The search score is 1.00 because the search was unqualified. Notice the fields that exist to support orchestration and prompt flows. A conversation ID identifies a specific chat. "type" indicates whether the content is from the user or the assistant. Dates are used to age out chats from the history.

Physical structure and size

In Azure AI Search, the physical structure of an index is largely an internal implementation. You can access its schema, load and query its content, monitor its size, and manage capacity, but the clusters themselves (inverted and vector indexes), and other files and folders are managed internally by Microsoft.

The size and substance of an index is determined by:

- Quantity and composition of your documents

- Attributes on individual fields. For example, more storage is required for filterable fields.

- Index configuration, including vector configuration that specifies how the internal navigation structures are created based on whether you choose HNSW or exhaustive KNN for similarity search.

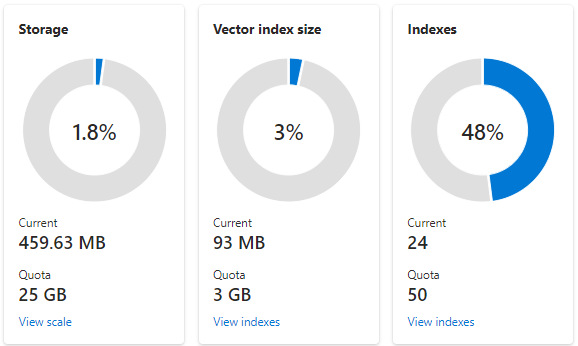

Azure AI Search imposes limits on vector storage, which helps maintain a balanced and stable system for all workloads. To help you stay under the limits, vector usage is tracked and reported separately in the Azure portal, and programmatically through service and index statistics.

The following screenshot shows an S1 service configured with one partition and one replica. This particular service has 24 small indexes, with one vector field on average, each field consisting of 1536 embeddings. The second tile shows the quota and usage for vector indexes. A vector index is an internal data structure created for each vector field. As such, storage for vector indexes is always a fraction of the storage used by the index overall. Other nonvector fields and data structures consume the rest.

Vector index limits and estimations are covered in another article, but two points to emphasize up front is that maximum storage varies by service tier, and also by when the search service was created. Newer same-tier services have significantly more capacity for vector indexes. For these reasons, take the following actions:

Check the deployment date of your search service. If it was created before April 3, 2024, consider creating a new search service for greater capacity.

Choose a scalable tier if you anticipate fluctuations in vector storage requirements. The Basic tier is fixed at one partition on older search services. Consider Standard 1 (S1) and above for more flexibility and faster performance, or create a new search service that uses higher limits and more partitions at every nillable tier.

Basic operations and interaction

This section introduces vector run time operations, including connecting to and securing a single index.

Note

When managing an index, be aware that there is no portal or API support for moving or copying an index. Instead, customers typically point their application deployment solution at a different search service (if using the same index name), or revise the name to create a copy on the current search service, and then build it.

Continuously available

An index is immediately available for queries as soon as the first document is indexed, but won't be fully operational until all documents are indexed. Internally, an index is distributed across partitions and executes on replicas. The physical index is managed internally. The logical index is managed by you.

An index is continuously available, with no ability to pause or take it offline. Because it's designed for continuous operation, any updates to its content, or additions to the index itself, happen in real time. As a result, queries might temporarily return incomplete results if a request coincides with a document update.

Notice that query continuity exists for document operations (refreshing or deleting) and for modifications that don't affect the existing structure and integrity of the current index (such as adding new fields). If you need to make structural updates (changing existing fields), those are typically managed using a drop-and-rebuild workflow in a development environment, or by creating a new version of the index on production service.

To avoid an index rebuild, some customers who are making small changes choose to "version" a field by creating a new one that coexists alongside a previous version. Over time, this leads to orphaned content in the form of obsolete fields or obsolete custom analyzer definitions, especially in a production index that is expensive to replicate. You can address these issues on planned updates to the index as part of index lifecycle management.

Endpoint connection

All vector indexing and query requests target an index. Endpoints are usually one of the following:

| Endpoint | Connection and access control |

|---|---|

<your-service>.search.windows.net/indexes |

Targets the indexes collection. Used when creating, listing, or deleting an index. Admin rights are required for these operations, available through admin API keys or a Search Contributor role. |

<your-service>.search.windows.net/indexes/<your-index>/docs |

Targets the documents collection of a single index. Used when querying an index or data refresh. For queries, read rights are sufficient, and available through query API keys or a data reader role. For data refresh, admin rights are required. |

How to connect to Azure AI Search

Make sure you have permissions or an API access key. Unless you're querying an existing index, you need admin rights or a contributor role assignment to manage and view content on a search service.

Start with the Azure portal. The person who created the search service can view and manage the search service, including granting access to others through the Access control (IAM) page.

Move on to other clients for programmatic access. We recommend the quickstarts and samples for first steps:

Secure access to vector data

Azure AI Search implements data encryption, private connections for no-internet scenarios, and role assignments for secure access through Microsoft Entra ID. The full range of enterprise security features are outlined in Security in Azure AI Search.

Manage vector stores

Azure provides a monitoring platform that includes diagnostic logging and alerting. We recommend the following best practices: