Azure Mixed Reality cloud services overview

Unlock what every human is an expert at—the three-dimensional, physical world around us—with Azure mixed reality services. Help people create, learn, and collaborate more effectively by capturing and surfacing digital information within the context of their work and world. Bring 3D to mobile devices, headsets, and other untethered devices. Using Azure, help ensure that your most sensitive information is protected.

Mixed Reality services

Mixed Reality cloud services like Azure Remote Rendering and Azure Spatial Anchors help developers build compelling immersive experiences on a variety of platforms. These services allow you to integrate spatial awareness into your projects when you're making applications for 3D training, predictive equipment maintenance, and design review, all in the context of your users’ environments.

Note

- Azure Spatial Anchors (ASA) will be retired on November 20, 2024. Learn more.

- Azure Remote Rendering (ARR) will be retired on September 30, 2025. Learn more.

Azure Remote Rendering

Azure Remote Rendering, or ARR, is a service that lets you render highly complex 3D models in real time and stream them to a device. ARR is now generally available and can be added to your Unity or Native C++ projects targeting HoloLens 2 or Windows desktop PC.

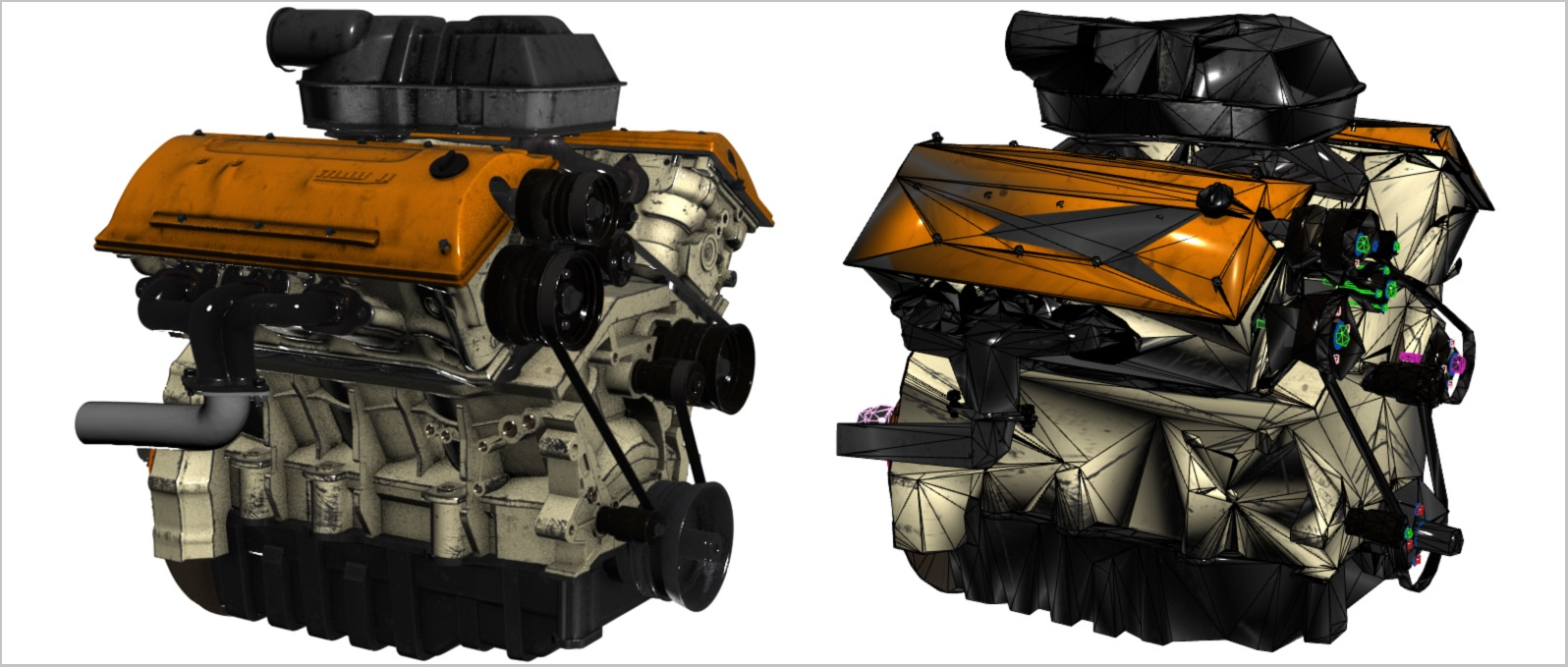

ARR is an essential component of any Mixed Reality application that runs on an untethered device, as they have less computational rendering power. Take the following side-by-side engine model comparison as an example: the high-fidelity model on the left has over 18 million triangles, while the reduced model on the right has only around 200,000. In scenarios where every detail matters—industrial plant management, design review for assets like truck engines, pre-operative surgery planning, and more—3D visualization brings that detail to life. It's what helps designers, engineers, doctors, and students better understand complex information and make the right call. But this simplification can result in a loss of important detail that's needed in key business and design decisions.

ARR solves this problem by moving the rendering workload to high-end GPUs in the cloud. A cloud-hosted graphics engine then takes over and renders the image, encodes it as a video stream, and streams the model directly to the target device.

- For complex models that are too much for one high-end GPU to handle, ARR distributes the workload to multiple GPUs and merges the result into a single image, making the process entirely transparent to the user.

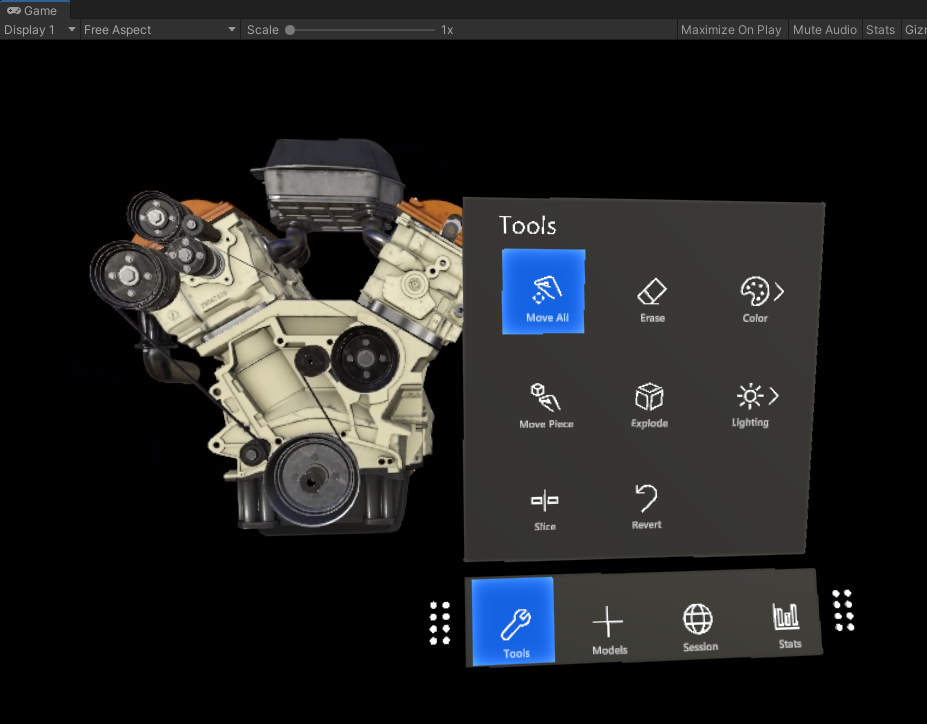

As an added bonus, ARR doesn't restrict what kind of user interface you can use in your app. At the end of a frame, your locally rendered content is automatically combined with the remote image as seen in the image below:

Azure Spatial Anchors

Azure Spatial Anchors, or ASA, is a cross-platform service that allows you to build spatially aware mixed reality applications. With Azure Spatial Anchors, you can map, persist, and share holographic content across multiple devices at real-world scale. AOA is now in public preview for you to try out in your apps.

Azure Spatial Anchors is a uniquely tailored solution for common use cases in Mixed Reality, including:

- Way-finding: Where two or more spatial anchors could be connected to create a task list or points of interest a user must interact with.

- Multi-user experiences: Where users could pass moves back and forth by interacting with objects in the same virtual space.

- Persisting virtual content in the real-world: Where users could place virtual objects in the real-world that are viewable from other supported devices.

The service can be developed in a host of environments and deployed to a large group of devices and platforms. This gives them special dispensation for their own list of available platforms:

- Unity for HoloLens

- Unity for iOS

- Unity for Android

- Native iOS

- Native Android

- C++/WinRT and DirectX for HoloLens

- Xamarin for iOS

- Xamarin for Android

Cognitive Services

Speech

Discover how Speech enables the integration of speech processing capabilities into any app or service. Convert spoken language into text or produce natural sounding speech from text using standard (or customizable) voice fonts. Try any service free—and quickly build speech-enabled apps and services with the following capabilities.

Vision

Recognize, identify, caption, index, and moderate your pictures, videos, and digital ink content.Learn how Vision makes it possible for apps and services to accurately identify and analyze content within images, videos, and digital ink.

Standalone Unity services

The standalone services listed below don't apply to Mixed Reality, but can be helpful in a wide range of development contexts. If you're developing in Unity, each of these services can be integrated into your new or existing projects.

Device support

| Azure Cloud Service | HoloLens 1st Gen | Immersive headsets |

| Language translation | ✔️ | ✔️ |

| Computer vision | ✔️ | ✔️ |

| Custom vision | ✔️ | ✔️ |

| Cross-device notifications | ✔️ | ✔️ |

| Face recognition | ✔️ | ✔️ |

| Functions and storage | ✔️ | ✔️ |

| Streaming video | ❌ | ✔️ |

| Machine learning | ✔️ | ✔️ |

| Functions and storage | ✔️ | ✔️ |

| Application insights | ✔️ | ✔️ |

| Object detection | ✔️ | ✔️ |

| Microsoft Graph | ✔️ | ✔️ |

| Bot integration | ✔️ | ✔️ |

See also

- Azure Spatial Anchor tutorials for HoloLens 2 - 1 of 3 Getting started with Azure Spatial Anchors

- Azure Speech Services tutorials for HoloLens 2 - 1 of 4 Integrating and using speech recognition and transcription