Hi @Clifford Gentiles ,

Welcome to Microsoft Q&A platform and thanks for posting your question here.

Thank you for responding on additional details required. In the requirement , there are few things which we need to modify in order to make it feasible to achieve the same.

1. The input data is combination of two JSONs , however the two jsons are not enclosed within a JSON. In order to treat this as a JSON file, we need to convert the two JSON into nested JSON :

{"row1":{"array1":["value1a","value1b"],"object2":"value2","object3":"value3","object4":"value4","object5":"value5"}, "row2":{"array6":["value6a","value6b"],"object7":"value7","object8":"value8","object9":"value9","object10":"value10"}}

2. The expected output is again a combination of 4 JSONs . But if you check in any JSON validator it will throw error as it is not enclosed in a key value pair structure. So, we should try to transform the data to a valid JSON format. Please let me know if this output format is good to meet the requirement or not:

{"json":{"json1":{"update":{"_id":"value2_value3","_index":1},"docJSON":{"doc":{"array1":["value1a","value1b"],"object2":"value2","object3":"value3","object4":"value4","object5":"value5"},"doc_as_upsert":"true"}},"json2":{"json2":{"update":{"_id":"value7_value8","_index":1},"docJSON":{"doc":{"array6":["value6a","value6b"],"object7":"value7","object8":"value8","object9":"value9","object10":"value10"},"doc_as_upsert":"true"}}}}}

Kindly follow the below steps in mapping dataflow:

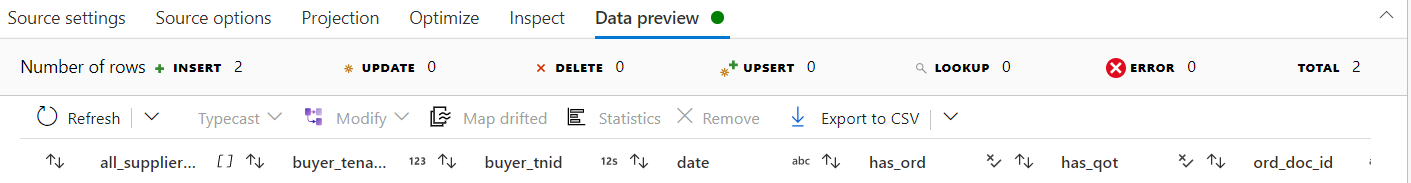

1. Add source transformation pointing the data to the input JSON datasource. Select 'Array of document' in the document form in Source options tab.

2. Add Derived column transformation to create a column called JSON with following expression: @(json1=@(update=@({_id}=row1.object2 + '_' + row1.object3, {_index}=1), docJSON=@(doc=row1, doc_as_upsert='true')), json2=@(json2=@(update=@({_id}=row2.object7 + '_' + row2.object8, {_index}=1), docJSON=@(doc=row2, doc_as_upsert='true'))))

3. Add select transformation to deselect row1 and row2 and only keep JSON column

4. Add sink transformation and create JSON dataset and provide 'Output to single file' in 'File name option' . Provide file name in 'Output to single file' textbox. Set single partition in optimize tab.

5. Create a new ADF pipeline and call the dataflow and execute it.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators