Containers – The Next Small Thing

While application systems are always getting larger, the trend is for them to be made from smaller and smaller components. To deploy and operate microservices and lightweight application components, development and DevOps teams are increasingly considering using containers, an operating system virtualisation technology.

The trend of creating applications from a larger number of smaller components has meant some of them have become so small they no longer need a whole server to themselves – even a very small one. However, it can be time consuming to code services to manage multiple instances of themselves so they can co-exist on a shared server, especially when a large number of instances may only be required for a short period of time.

Container technology has emerged as a solution to this challenge. It allows a single operating system to run multiple instances of an application service in way that makes them each think they’ve got a server dedicated to themselves. By having multiple instance of a service share a single server and operating system, the cost of running them decreases as does the effort to deploy them. If this concept sounds similar to server virtualisation and how virtual machines work then it’s because it is. This raises the question - how do containers compare to virtual machines and when would you use them?

The Goal of Virtual Machines

Server virtualisation allows a single physical server to host multiple virtual machines. Each virtual machine has its own instance of an operating system, can have a unique combination of software installed and at an infrastructure level is unaware of the other virtual machines running around it. The rationale behind server virtualisation is that no individual virtual machine needs to use all the processor and memory resources in a physical server so rather than leave resources unused, hypervisor software allows multiple virtual machines to run concurrently and collectively use all of a physical host server’s resources.

The cost savings from server virtualisation can be significant so it’s no surprise that it became popular when organisations wanted to replace large numbers of small, old and minimally used servers with a smaller number of more heavily used servers. As virtual machine technology evolved, it also began making it easier for system administrators to provide high availability and deploy new virtual machines.

The Changing Nature of Software Development

Reduced costs and increased flexibility have made virtual machines so popular that every cloud service now uses them and so do most on-premises workloads. It’s very rare today for anyone to deploy a physical server to do anything but host more virtual machines.

Yet despite their benefits, while virtual machines were becoming the new norm the approaches to software development were changing. No longer do development patterns only advocate deploying servers hosting a collection of large application services, they increasingly promote deploying a larger number of smaller and independent services, such as microservices. Each of these services can be tiny, perhaps just a few megabytes of code which themselves only use a few hundred megabytes of memory.

This microservices based approach to software development brings a new challenge, efficiently managing scalability. While server virtualisation hypervisors allow virtual machines to be programmatically deployed and destroyed, using one virtual machine to host one small instance of a microservice is an expensive model even if it’s an ideal one. Just like one physical server was no longer the right way to host a small server workload, using dedicated virtual machines is becoming an inefficient way to scale out app-tiers to cope with ad-hoc increases in workloads.

Using Containers to Virtualise the Operating System

Conceptually, using ten virtual machines to host ten instances of an identical application service can be the right approach. It makes expanding an application tier easy as all that needs to happen is for a new virtual machine to be deployed and placed in a network load balancer’s pool. By letting the infrastructure handling scalability, developers can focus on creating application functionality rather than how their services manage the coexistence of multiple instances of themselves. The most significant problem with this approach though is cost.

To run each virtual machine, it must have its own locally installed operating system, such as Windows or Linux. This uses several gigabytes of storage and memory, and a few processor cycles, to present itself as being available for applications to use. Deploying 100 instances of a microservice may easily mean 100GB of memory and 1.5TB of storage are required before any application software even starts!

To solve this problem of having many minimally used operating systems, container platform technologies allows a single server to share its operating system with multiple isolated instances of an application service.

The Role of a Container Platform

A container platform is a software management service that runs on top of a server’s operating system, regardless of whether that server is virtual or physical. Popular container platforms today include Docker, Kubernetes and Windows Server, and there is also the Azure Container Service provided in Microsoft’s public cloud.

These platforms share an operating system’s kernel with multiple isolated user spaces, where applications services run. Each isolated user space sees the server’s resources as though they were its own and are unaware of anything else running on the server. To allow access to the outside world, each container is usually given its own network address.

The containers which run on these platforms are a bundle of just enough software components needed to run a service. In the Windows world, this might be some Windows and custom libraries, and a JSON configuration file. Once created, a container is stored as an image in an image library, often known as a repository. A more familiar word for a container to some might be a template.

After a container image has been created, the platform can be instructed to deploy any number of isolated and independent instances of it either through manual commands or automated API calls. System administrators can also configure these platforms to ensure at least once instance of a container is always running or that additional instances are automatically deployed during busy periods.

Differences Between Virtual Machines and Containers

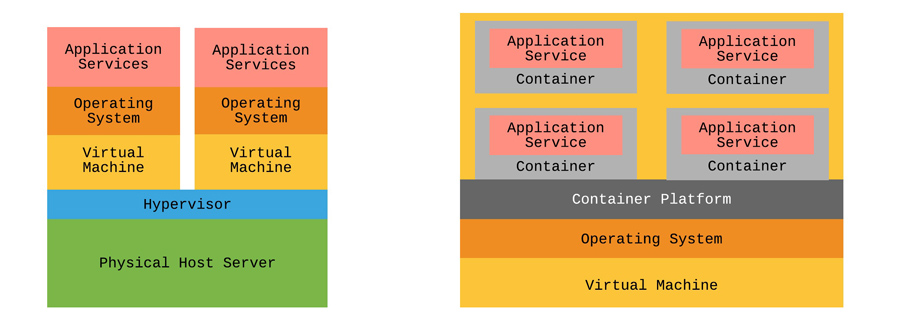

While virtual machines and containers were originally created to solve different problems, they’re both possible solutions to the challenge of efficiently scaling application platforms. The diagram below, which shows conceptually how they each work, introduces some of their fundamental differences and should start generating thoughts about which is the most suitable for different requirements.

Containers may reduce the number of operating systems deployed but they significantly increase the impact of a single operating system becoming unavailable, whether intentionally or unintentionally. Yet conversely, using more virtual machines may increase resiliency but an operating system’s footprint today can often far exceed that of the application software installed on it.

Other Considerations

This article has introduced the concept of containers and compared it to virtual machines. It gives an opinion on the strengths and limitations of each, but there are other signification matters to consider which is the most appropriate. Container technologies like Docker provide very strong command line interfaces that help them integrate far more tightly into a DevOps workflow than most server virtualisation platforms could ever dream of. There are also public repositories of free containers available that mean the time to deploy a new working software capability can be not much more than the time to download it. If we had to generalise, then it may be fair to say that containers are part of the developer’s domain whereas virtual machines are part of the system administrator’s.

Conclusions

Containers and their operating system virtualisation technology bring a new tool to the modern technology toolbox. They work well when multiple identical instances of lightweight software components need deploying whereas virtual machines are a tested solution for deploying a single resource intensive service or deploying several smaller services together. The next few years will show which option is most needed – or perhaps most preferred.

Further Reading from Microsoft

- A solid introduction to container technology

- An overview of the container technology in Windows Server 2016

- The home page for the Azure Container Service

Gavin Payne is an independent consultant who helps businesses grow faster by evolving their analytics, data and cloud capabilities. He is a Microsoft Certified Architect and Microsoft Certified Master, and a regular speaker at community and industry events. https://www.gavinpayne.co.uk

Comments

- Anonymous

September 28, 2017

Thanks for that, a nice clear article that suggests you know what you're talking about, which isn't always the case with technical articles.Oh and I had one passing thought, is something like Sandboxie on Windows (which BTW seems to be a somewhat of a shadow of its former self) a bit like a half-way house to a container system and even more lightweight? How much do containers add on top of that and how many extra limitations appear? (If you don't know it Sandboxie redirects file and registry writes to a local repository, so isolating programs that think they are just running under the local o/s.) - Anonymous

September 29, 2017

You should also include Azure Service Fabric as the container platform built and used by Microsoft. It is now generally available on both Windows and Linux and as Mark Russinovich showed in his recent Ignite talk https://myignite.microsoft.com/sessions/54962?source=sessions - @ 42 mins can deploy millions of containers in less than 2 minutes.