Hello @Niels Deckers and welcome to Microsoft Q&A.

While Data Factory may be good for moving large amounts of data, it is not necessarily the best when doing many many tiny calls, as you have found.

Out of curiosity, why have you chosen to run this in Data Factory, when you had a Python script to do the same?

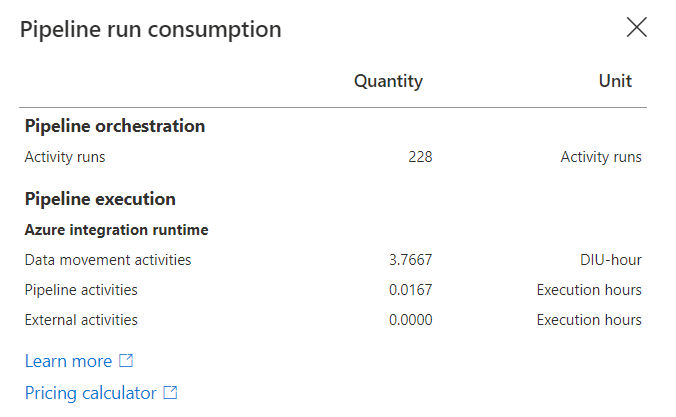

I think we can make a hybrid solution, part Data Factory and part Python. The Custom Activity leverages Azure Batch compute. This can run Python among other things, and can be fed Datasets, or perhaps the output of the Lookup Activity. I'm not 100% certain, but I think this single activity would have less overhead than spinning up 228 separate activity runs.

Does this appeal to you?

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is