Azure Data Factory - Data Wrangling with Data Flow - Array bug.

I have a tricky firewall log file to wrangle using Azure data factory. The file consists of 4 tab-separated columns. Date and Time, Source, IP and Data.

The Data column consists of key-value pairs separated with equal signs and text delimited by double-quotes. The challenge is that the data column is inconsistent and contains any number of key-value pair combinations.

Three lines from the source file.

2022-02-13 00:59:59 Local7.Notice 192.168.40.1 date=2022-02-13 time=00:59:59 devname="NoHouse" devid="FG100ETK18006624" eventtime=1644706798637882880 tz="+0200" logid="0000000013" type="traffic" subtype="forward" level="notice" vd="root" srcip=192.168.41.200 srcport=58492 srcintf="port1" srcintfrole="undefined" dstip=216.239.36.55 dstport=443 dstintf="wan1" dstintfrole="undefined" srccountry="Reserved" dstcountry="United States" sessionid=137088638 proto=6 action="client-rst" policyid=5 policytype="policy" poluuid="c2a960c4-ac1b-51e6-8011-6f00cb1fddf2" policyname="All LAN over WAN1" service="HTTPS" trandisp="snat" transip=196.213.203.122 transport=58492 appcat="unknown" applist="block-p2p" duration=6 sentbyte=3222 rcvdbyte=1635 sentpkt=14 rcvdpkt=8 srchwvendor="Microsoft" devtype="Computer" osname="Debian" mastersrcmac="00:15:5d:29:b4:06" srcmac="00:15:5d:29:b4:06" srcserver=0

2022-02-13 00:59:59 Local7.Notice 192.168.40.1 date=2022-02-13 time=00:59:59 devname="NoHouse" devid="FG100ETK18006624" eventtime=1644706798657887422 tz="+0200" logid="0000000013" type="traffic" subtype="forward" level="notice" vd="root" srcip=192.168.41.200 srcport=58496 srcintf="port1" srcintfrole="undefined" dstip=216.239.36.55 dstport=443 dstintf="wan1" dstintfrole="undefined" srccountry="Reserved" dstcountry="United States" sessionid=137088640 proto=6 action="client-rst" policyid=5 policytype="policy" poluuid="c2a960c4-ac1b-51e6-8011-6f00cb1fddf2" policyname="All LAN over WAN1" service="HTTPS" trandisp="snat" transip=196.213.203.122 transport=58496 appcat="unknown" applist="block-p2p" duration=6 sentbyte=3410 rcvdbyte=1791 sentpkt=19 rcvdpkt=11 srchwvendor="Microsoft" devtype="Computer" osname="Debian" mastersrcmac="00:15:5d:29:b4:06" srcmac="00:15:5d:29:b4:06" srcserver=0

2022-02-13 00:59:59 Local7.Notice 192.168.40.1 date=2022-02-13 time=00:59:59 devname="NoHouse" devid="FG100ETK18006624" eventtime=1644706798670487613 tz="+0200" logid="0001000014" type="traffic" subtype="local" level="notice" vd="root" srcip=192.168.41.180 srcname="GKHYPERV01" srcport=138 srcintf="port1" srcintfrole="undefined" dstip=192.168.41.255 dstport=138 dstintf="root" dstintfrole="undefined" srccountry="Reserved" dstcountry="Reserved" sessionid=137088708 proto=17 action="deny" policyid=0 policytype="local-in-policy" service="udp/138" trandisp="noop" app="netbios forward" duration=0 sentbyte=0 rcvdbyte=0 sentpkt=0 rcvdpkt=0 appcat="unscanned" srchwvendor="Intel" osname="Windows" srcswversion="10 / 2016" mastersrcmac="a0:36:9f:9b:de:b6" srcmac="a0:36:9f:9b:de:b6" srcserver=0

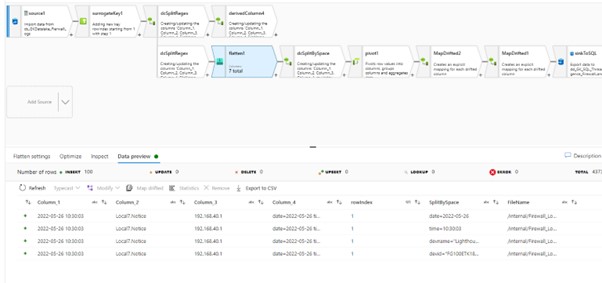

My strategy for wrangling this data set is as follows.

- Source the data file from azure data Lake Using a tab-delimited csv data set. This successfully delivers the source data in four columns to my data flow.

- Add a surrogate key transformation to add an incrementing key value to each row of data.

- Add a derived column, with the following function.

regexSplit(Column_4, '\s(?=(?:[^"](["])[^"]\1)[^"]$)')

This splits the data by spaces ignoring the spaces between semicolons.

- Then the unfold creates a new record for each item in the array while preserving the other column values.

unfold(SplitBySpace)

- Then split the key-value pairs into their represented value and key by the Delimiter equals.

- The final step would then be to unpivot the data back into columns with the respected values grouped by the surrogate key added in step 2.

This all sounds good but unfortunately step 5 fails with the following error. “Indexing is only allowed on the array and map types”.

The output after step 4.

The unfold function returns an array according to the inspect tab, see below. I would expect a string here!!

Now in step 5, I split by “=” with the expression split(unfoldSplitBySpace, '=') but this errors in the expression builder with the message “Split expect string type of argument”

Changing the expression to split(unfoldSplitBySpace1, '=') remove the error from the expression Builder.

BUT THEN the spark execution engines errors with “Indexing is only allowed on the array and map types”

The problem.

According to the Azure Data Factory UI, the output of the Unfold() function is an array type but when accessing the array elements or any other function the spark engine does not recognise the object as in array type.

Is this a bug in the execution or do I have a problem in my understanding of how the data factory and a spark engine understand arrays?

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is