Hello @Ashwin Chithran ,

Welcome to the Microsoft Q&A platform, and thanks for posting your query.

This can be achieved by using lookup, foreach, and copy activities.

Steps:

We need 3 datasets here.

1) Create Synapse analytics dataset for lookup activity (source server)

2) Create Synapse analytics dataset for copy source (source server)

3) Create Azure SQL dataset for copy sink (destination server)

Leave table as empty for Synapse dataset (for lookup activity)

Screenshot for analytics dataset for lookup activity:

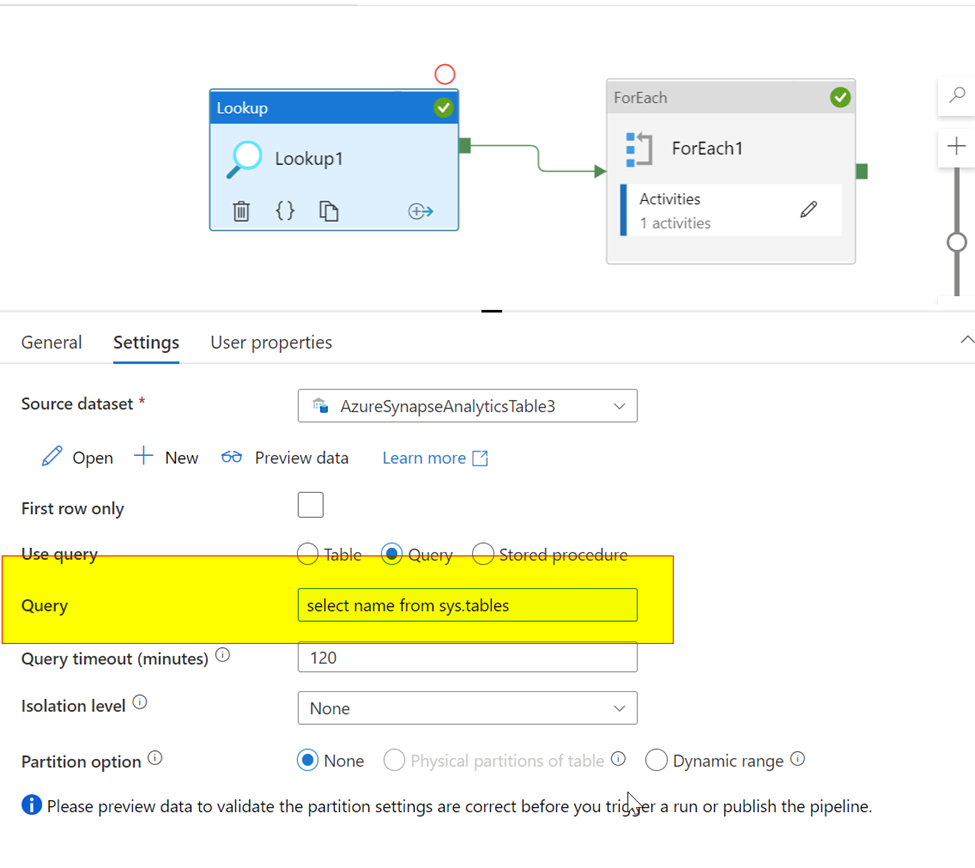

Lookup:

On the canvas drag the lookup activity.

Select query option and enter: select name from sys.tables

This query will give all the list of tables from your synapse database.

Foreach:

Drag the foreach and connect to lookup.

On the settings tab enter: @activity('Lookup1').output.value

Click the pencil icon on the activities and drag the copy activity. Connect the source dataset (synapse)

Copy source dataset:

On the source dataset, add a string parameter and dynamically map that parameter in the connection settings tab

Parametername: Inputtable_name

Table: @dataset().Inputtable_name

Copy data activity:

On the source, You will see the Parameter.

Then Add, @item().name

in the data set properties(we are taking the name from the lookup activity, which is our source table names)

On the sink:( In the copy activity)

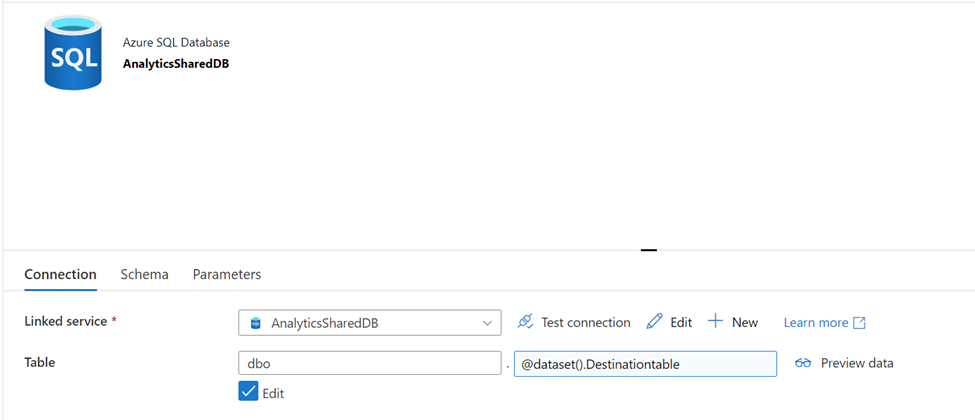

Sink dataset:

Similarly, add a string parameter and dynamically map that parameter in the connection settings tab on the sink dataset

Parametername: Destinationtable

Table: @dataset().Destinationtable

On the Sink, You will see the Parameter.

Add- @item().name

And select autocreate table option.

Run the pipeline.

I have only two tables in the source, that’s the reason you are seeing two copy activities in the monitor section.

I hope this helps. Please let me know if you have any further questions.

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators