Hello @Boris Akselrud ,

Thanks for the additional details.

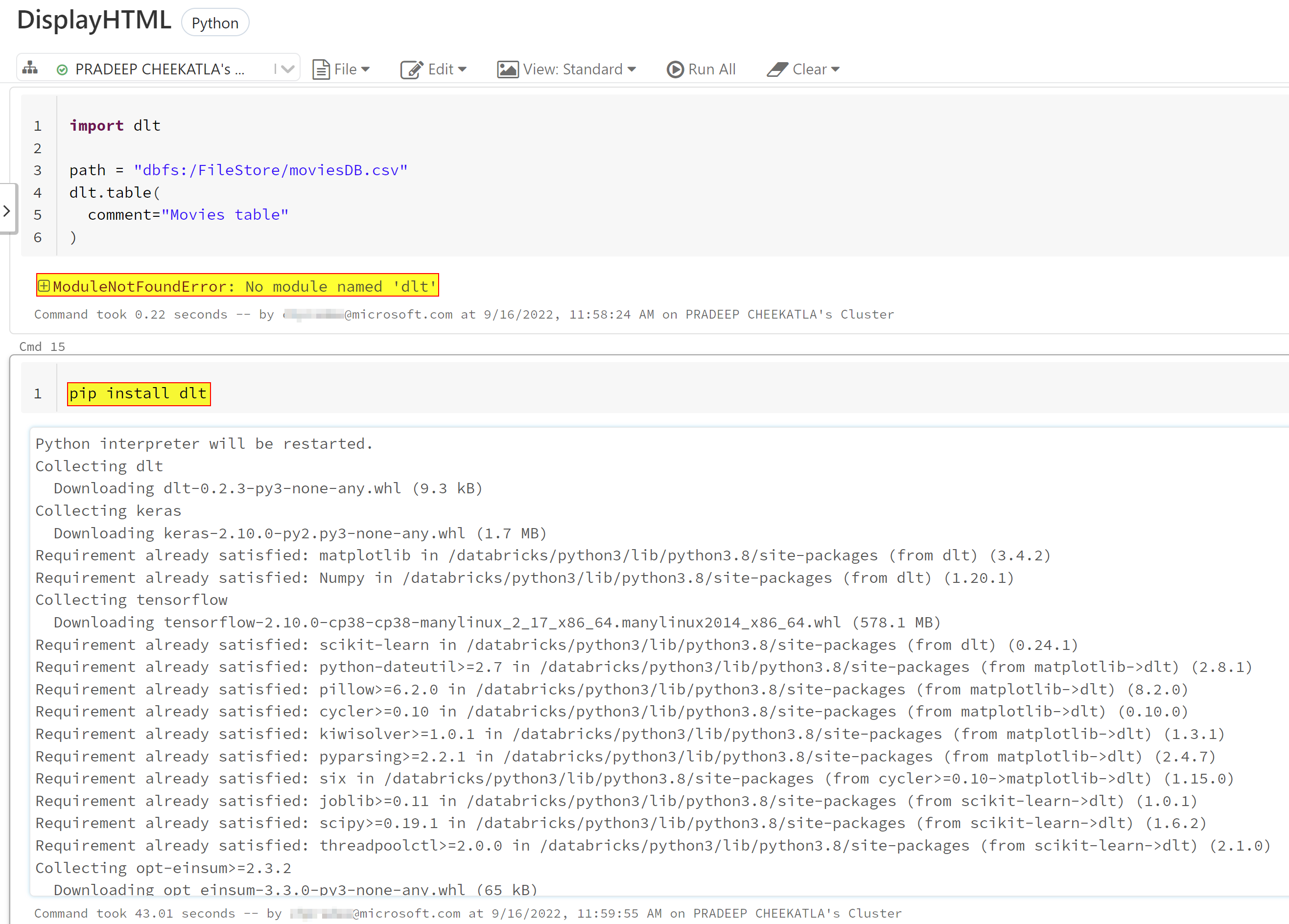

This is an excepted when you are running interactive notebooks.

Make to sure to install

dltusing the commandpip install dlt:

For different methods to install packages in Azure Databricks, refer to How to install a library on a databricks cluster using some command in the notebook?

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a junotification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is jhow you can be part of Q&A Volunteer Moderators