Hello @Ricardo Cauduro ,

Welcome to the MS Q&A platform.

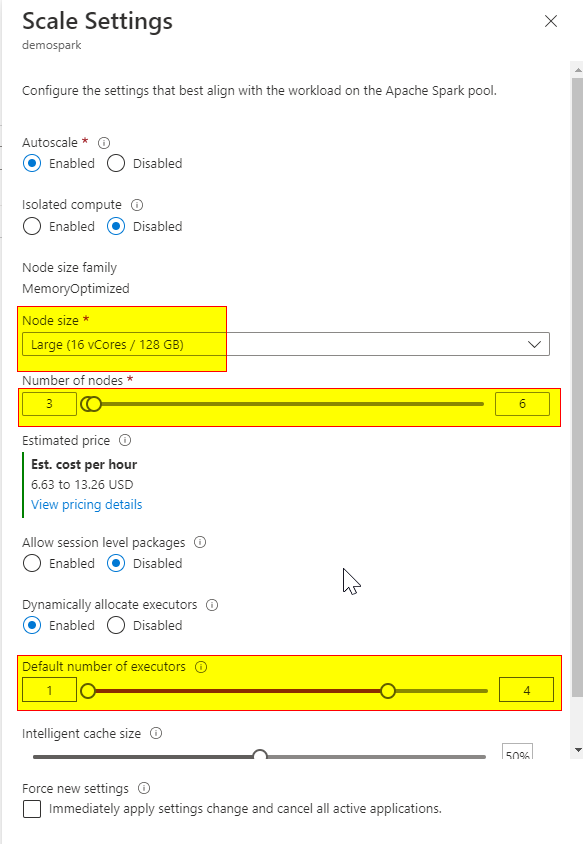

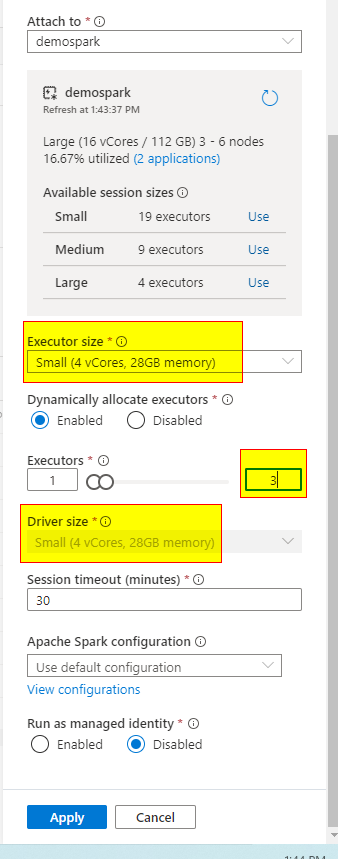

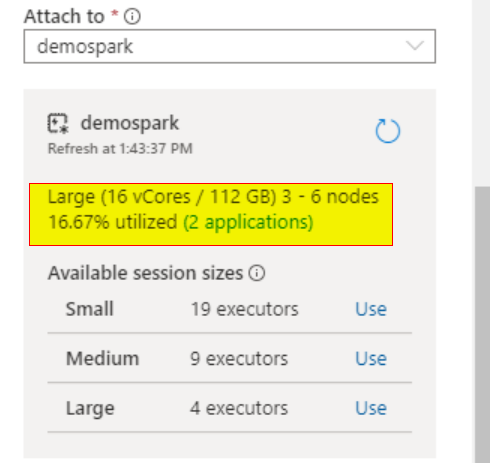

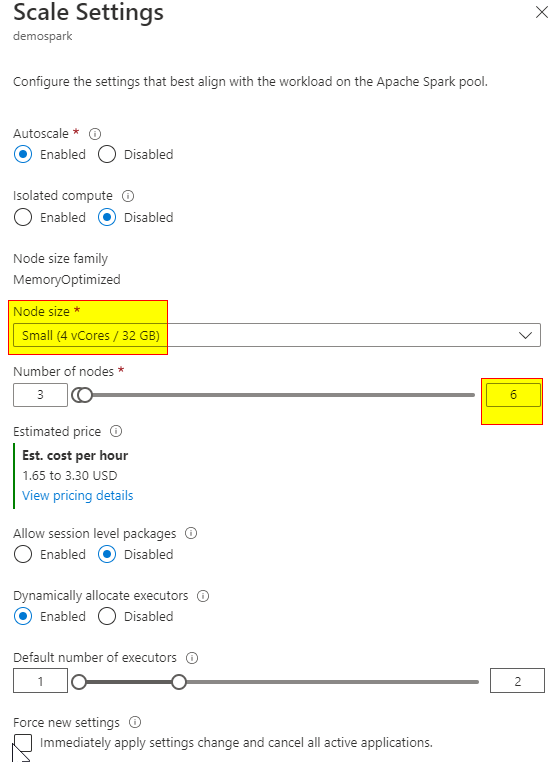

Sorry, it looks like your understanding is wrong. The default number of V-cores on the sparkpools depends on the Spark pool node size and the number of Nodes.

For example: If we choose a node size small(4 vcore/32 GB) and the number of nodes 6, then the total number of Vcores will be 4*6 = 24 Vcores.

Regarding your statement: "I thought that each application needed 12 vcores, so for 4 applications I´ll needed 48 vcores, then 50 vcores of quota should be enough"

To run a single notebook (application), the minimum number of Vcores depends on the code. Some notebooks may use 12 Vcores, and some may use 20 Vcores. These are depending on the workload.

When I had the small pool (4 vcore) and 5 nodes, one of my notebook took all 20 Vcores. and when I try to run a new notebook, I saw the below error as the vcores were exhausted by my 1st notebook session. I need to close the current session to run the 2nd notebook.

AVAILABLE_COMPUTE_CAPACITY_EXCEEDED: Livy session has failed. Session state: Error. Error code:

AVAILABLE_COMPUTE_CAPACITY_EXCEEDED. Your job requested 20 vcores. However,

the pool only has 0 vcores available out of quota of 20 vcores. Try ending

the running job(s) in the pool, reducing the numbers of vcores requested,

increasing the pool maximum size or using another pool. Source: User.

Here is one more example provided in the documentation(with respect to the nodes):

- You create a Spark pool called SP1; it has a fixed cluster size of 20 nodes.

- You submit a notebook job, J1 that uses 10 nodes, a Spark instance, SI1 is created to process the job.

- You now submit another job, J2, that uses 10 nodes because there is still capacity in the pool and the instance, the J2, is processed by SI1.

- If J2 had asked for 11 nodes, there would not have been capacity in SP1 or SI1. In this case, if J2 comes from a notebook, then the job will be rejected; if J2 comes from a batch job, then it will be queued.

Reference document: https://learn.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-concepts

I hope this helps. Please let me know if you have any further questions.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators