External table issue

Hello Team,

I am using df.write command and the table is getting created.

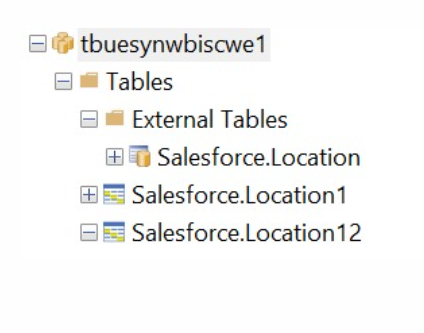

If you refer the below screenshot the table got created in Tables folder in dedicated sql pool. But i required in the External Tables folder.

The script is used :

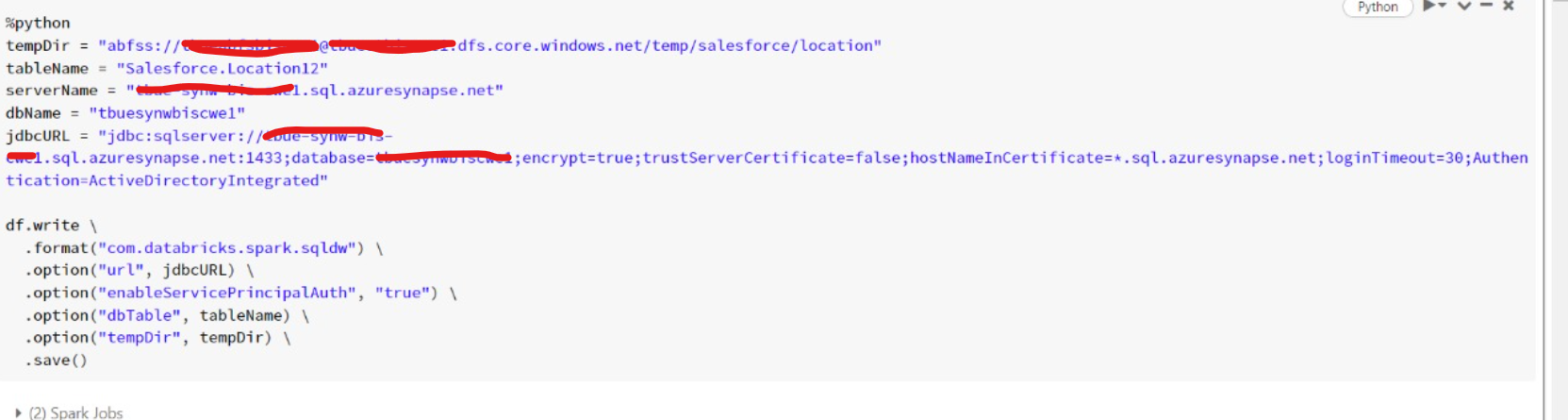

%python

tempDir = "abfss://*****@Storagename.dfs.core.windows.net/temp/salesforce/location"

tableName = "Salesforce.Location120"

serverName = "xyz.sql.azuresynapse.net"

dbName = "tbuesynwbiscwe1"

jdbcURL = "jdbc:sqlserver://xyz.sql.azuresynapse.net:1433;database=abc;encrypt=true;trustServerCertificate=true;hostNameInCertificate=.sql.azuresynapse.net;loginTimeout=30;Authentication=ActiveDirectoryIntegrated;external.table.purge=true;DataSource=tbueabfsbiscwe1_tbuestbiscwe1_dfs_core_windows_net;FileFormat=SynapseParquetFormat"

df.write \

.format("com.databricks.spark.sqldw") \

.option("url", jdbcURL) \

.option("enableServicePrincipalAuth", "true") \

.mode("overwrite") \

.option("dbTable", tableName) \

.option("tempDir", tempDir) \

.save()

Regards

Rk

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is