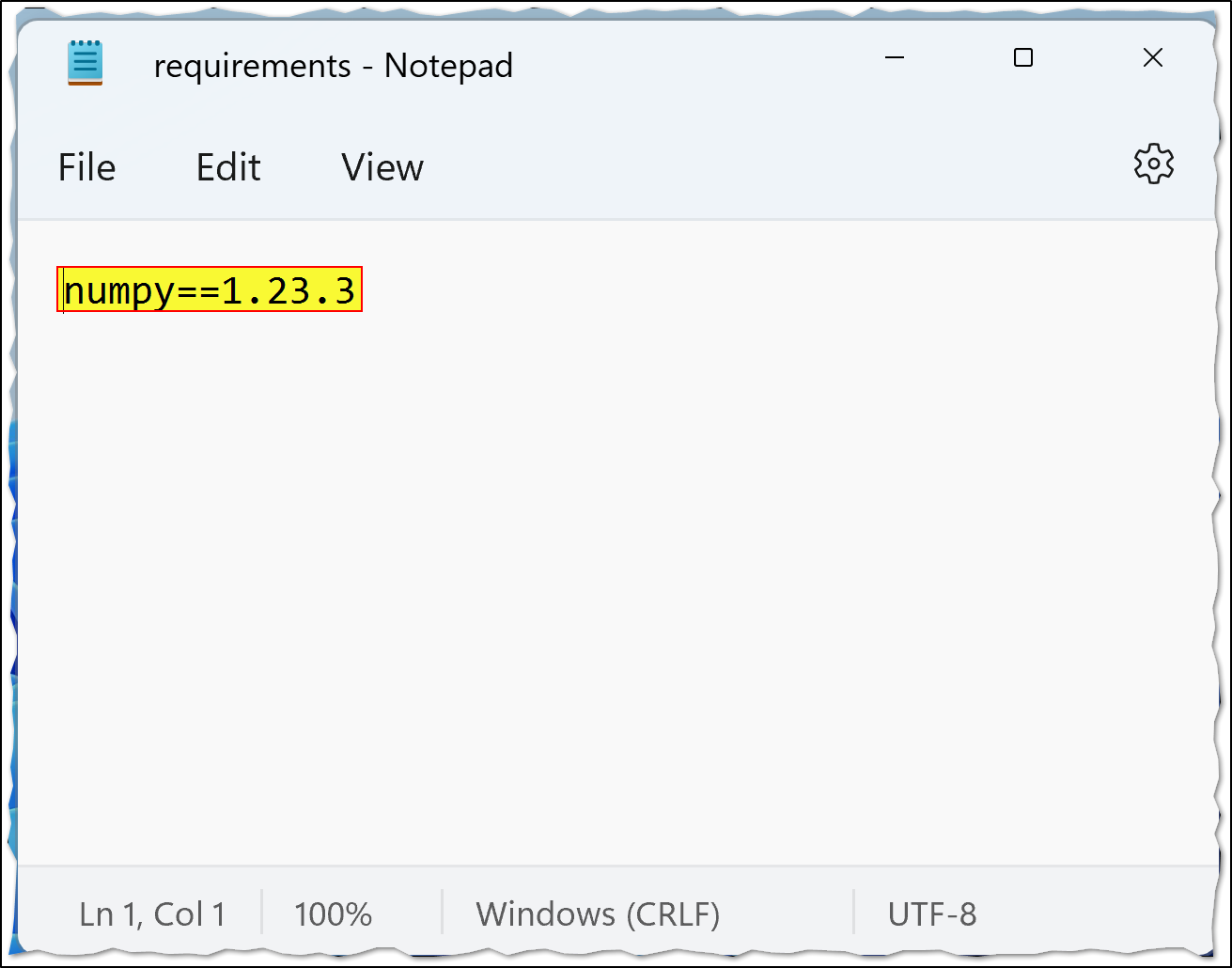

In addition to the above, I have also tried to add a package via the 'Requirements files' option, using a Requirements.txt file. This has also failed. Message as follows:

ProxyLivyApiAsyncError

LibraryManagement - Spark Job for sparkpoolcustom in workspace **** in subscription **** failed with status:

{"id":9,"appId":"application_****","appInfo":{"driverLogUrl":"http://vm-****/node/containerlogs/container_****/trusted-service-user","sparkUiUrl":"http://vm-****/proxy/application_****/","isSessionTimedOut":null,"isStreamingQueryExists":"false","impulseErrorCode":null,"impulseTsg":null,"impulseClassification":null},"state":"dead","log":["Elapsed: -","","An HTTP error occurred when trying to retrieve this URL.","HTTP errors are often intermittent, and a simple retry will get you on your way.","'https://conda.anaconda.org/conda-forge/linux-64'","","","22/10/07 13:35:00 ERROR b\"Warning: you have pip-installed dependencies in your environment file, but you do not list pip itself as one of your conda dependencies. Conda may not use the correct pip to install your packages, and they may end up in the wrong place. Please add an explicit pip dependency. I'm adding one for you, but still nagging you.\\nCollecting package metadata (repodata.json): ...working... failed\\n\"","22/10/07 13:35:00 INFO Cleanup following folders and files from staging directory:","22/10/07 13:35:04 INFO Staging directory cleaned up successfully"],"registeredSources":null}

This is incredibly frustrating, especially given that it takes about 10 minutes for the spark pool to respond each time you try!

Cheers,

Matty

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.