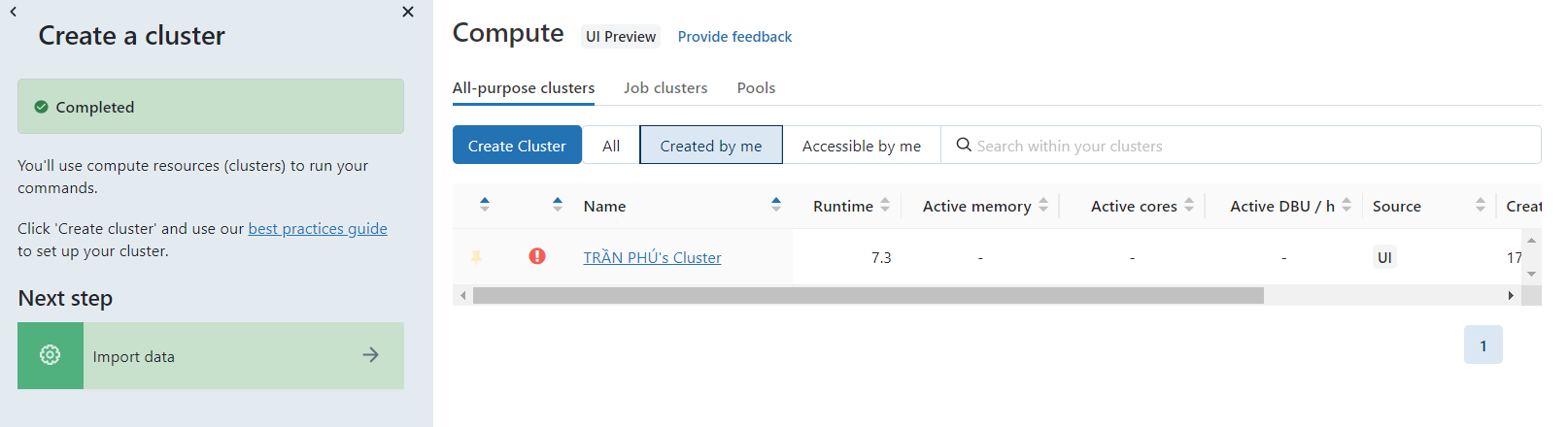

Hello @TRẦN ĐỨC PHÚ ,

Thanks for the question and using MS Q&A platform.

This is a known issue - when you create a cluster with

multi node.

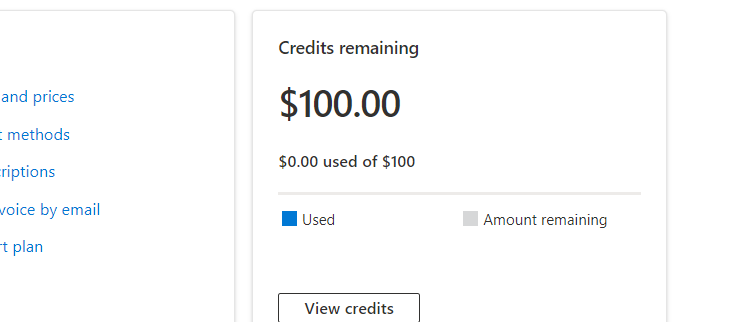

Azure Databricks Cluster - multi node is not available under the Azure free trial/Student/Pass subscription.

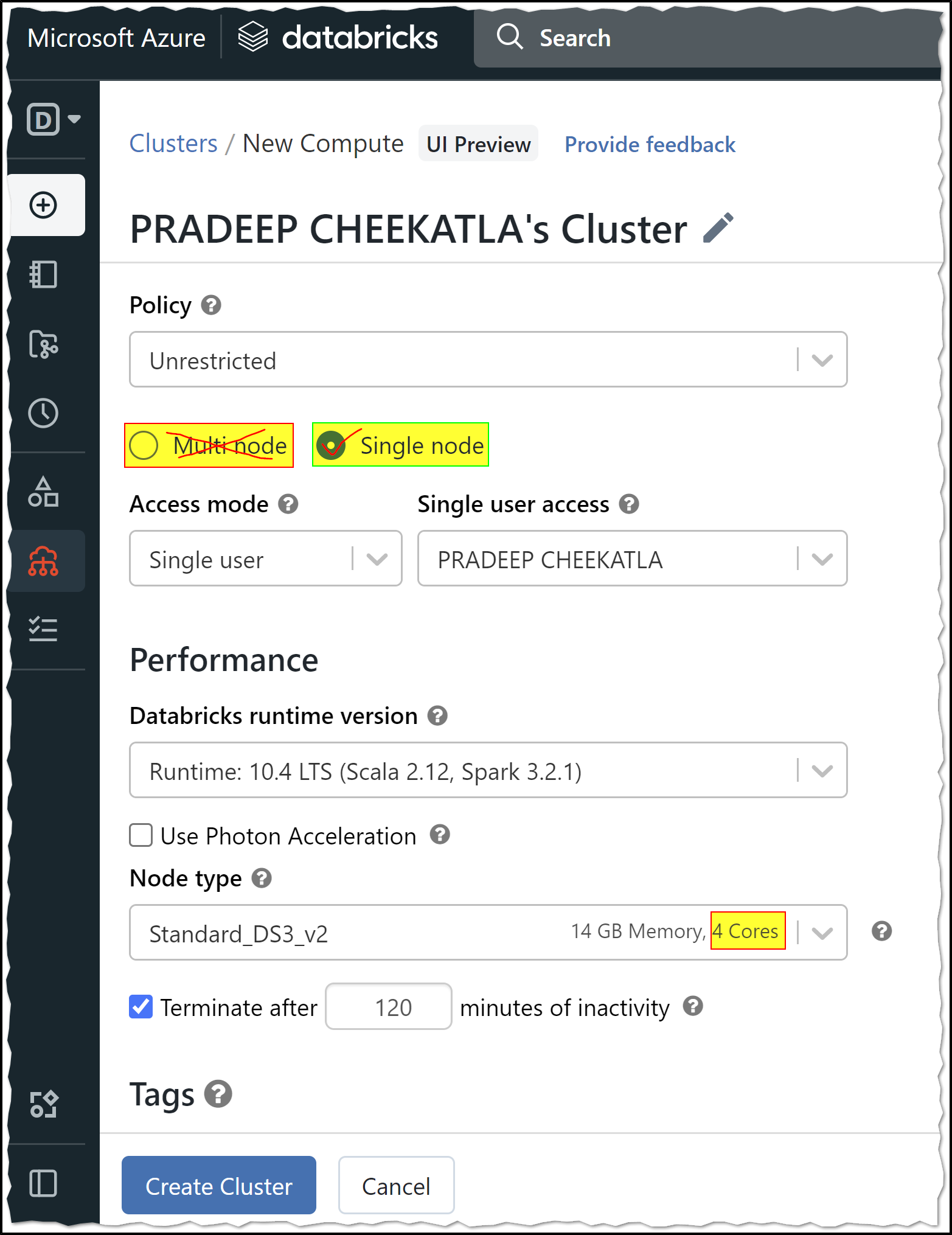

Reason: Azure free trial/Student/Pass subscription has a limit of 4 cores, and you cannot create Databricks cluster multi node using a Student Subscription because it requires more than 8 cores.

You need to upgrade to a Pay-As-You-Go subscription to create Azure Databricks clusters with multi mode.

Note: Azure Student subscriptions aren't eligible for limit or quota increases. If you have a Student subscription, you can upgrade to a Pay-As-You-Go subscription.

You can use Azure Student subscription to create a Single node cluster which will have one Driver node with 4 cores.

A Single Node cluster is a cluster consisting of a Spark driver and no Spark workers. Such clusters support Spark jobs and all Spark data sources, including Delta Lake. In contrast, Standard clusters require at least one Spark worker to run Spark jobs.

Single Node clusters are helpful in the following situations:

- Running single node machine learning workloads that need Spark to load and save data

- Lightweight exploratory data analysis (EDA)

Reference: Azure Databricks - Single Node clusters

Hope this helps. Do let us know if you any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is jhow you can be part of Q&A Volunteer Moderators