Update for anyone experiencing the same issue.

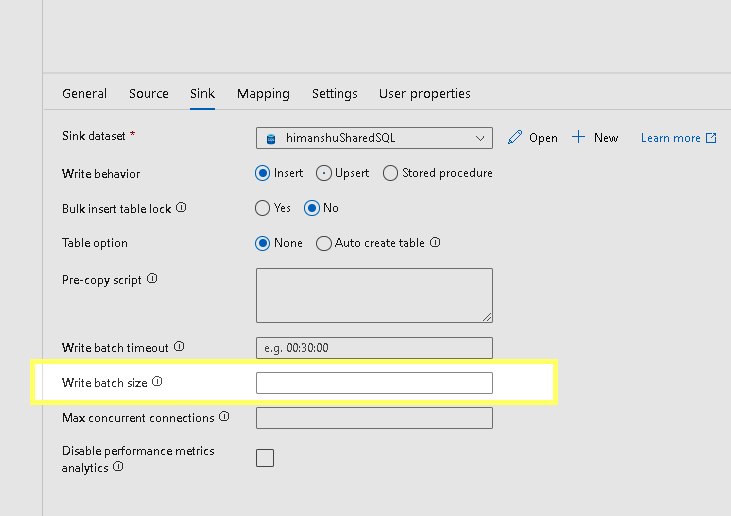

The ADF Copy Data activity is dynamically setting the "Write Batch Size" setting, so where the source file is over a certain size it is processing in batches, i.e. 75k records is processed as 8 10k batches.

What is not apparent when you run the pipeline is the fact that the Copy Data activity will pass the data to the SQL Procedure and call the procedure 8 time, this is not reflected in the activity logs or the input output logs for the activity, it feeds back as one single run, i.e. RowsRead & RowsCopied read as 75 k not 8 entries totally 75k.

This means that if the SQL procedure you are passing through has a delete or truncate step or contains logic that includes derivation on the whole dataset you will get unexpected or incorrect results.

I have altered our pipeline to copy the data to a transient table, I then call another SQL procedure that acts as a wrapper to pass the data from that table to my original load procedures so that they can operate as designed.

Regards

Nigel

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is