For some reason its not allowing me to post the below as comments though its withing the 1600 character range.

Thanks for your suggestion @PRADEEPCHEEKATLA .

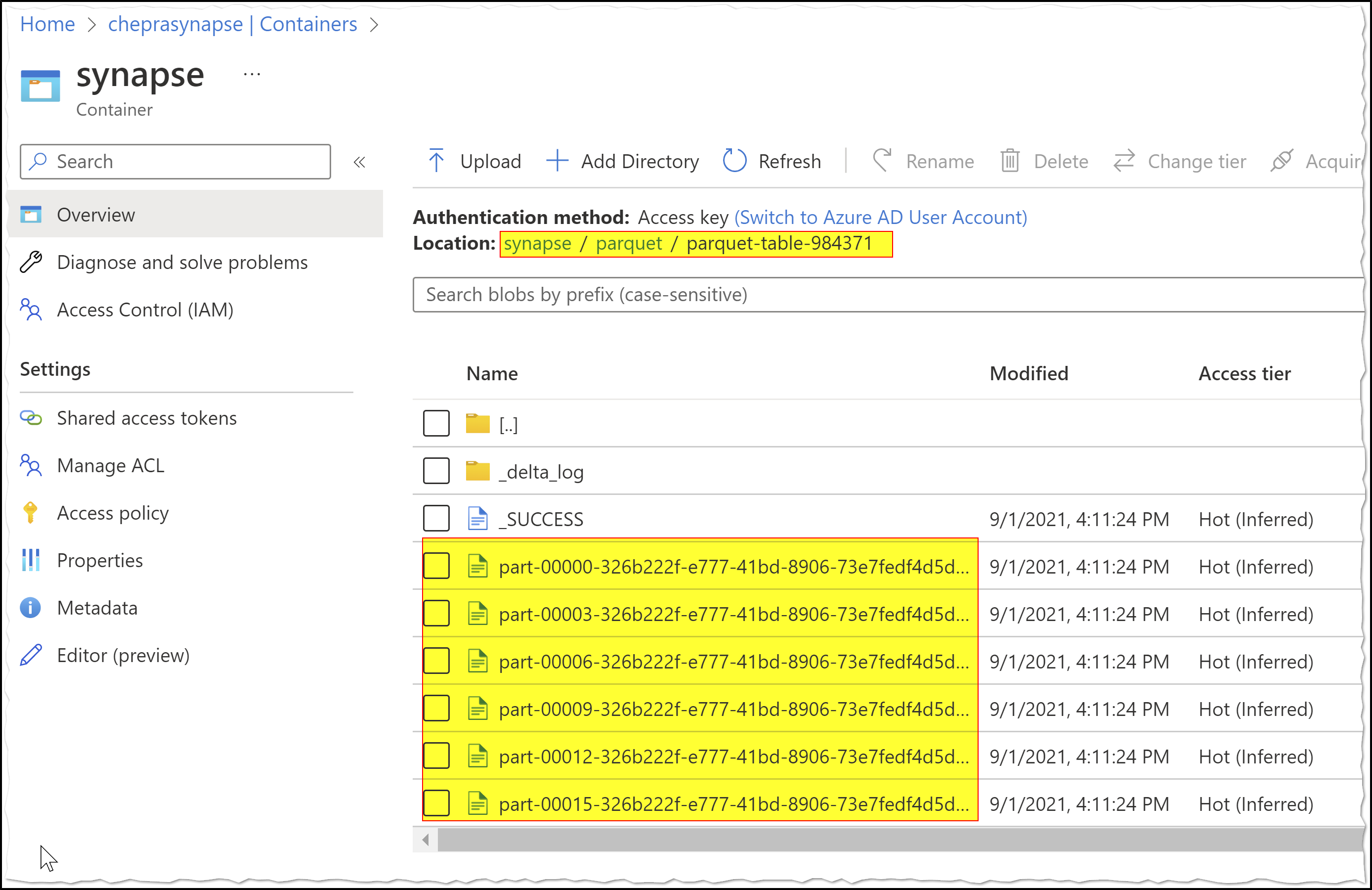

My file is present at : Container_name/Project_name/Year/Month/File.parquet/

_SUCCESS

_committed_1387639002

_started_1387639002

part-00000-tid-3006757700744507944-2dececd1-5fa7-429a-9fbe-3b21c1415734-16-1-c000.snappy.parquet

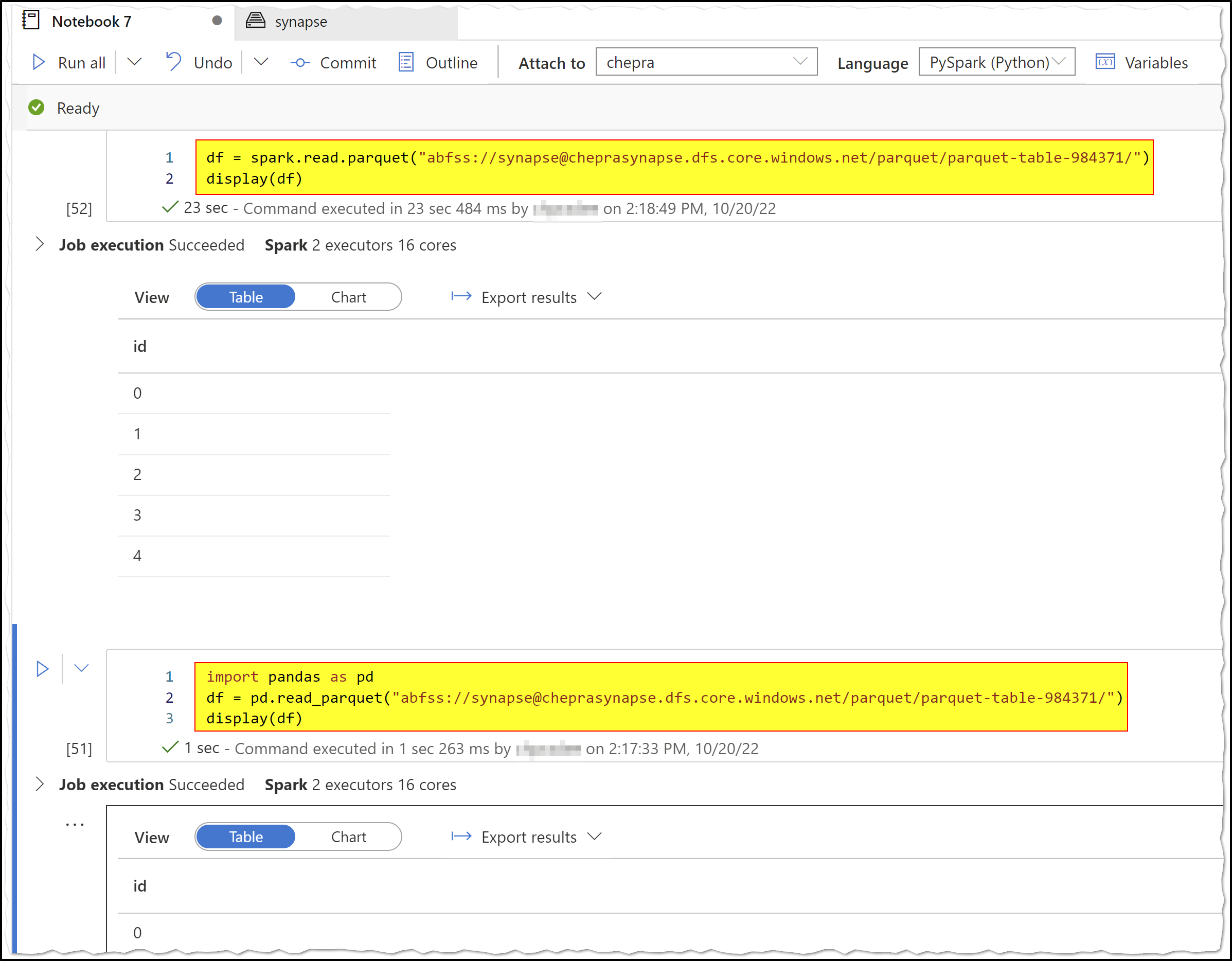

After importing all libraries,

handler = pyarrowfs_adlgen2.AccountHandler.from_account_name('acc_name', azure.identity.DefaultAzureCredential())

fs = pyarrow.fs.PyFileSystem(handler)

print("Path is {}".format("Container_name/Project_name/Year/Month/File.parquet/" ))

ds = pyarrow.dataset.dataset( "Container_name/Project_name/Year/Month/File.parquet/" , filesystem=fs)

data from blob= ds.to_table()

Error message

azure.core.exceptions.HttpResponseError: (InvalidResourceName) The specifed resource name contains invalid characters.

Code: InvalidResourceName

Message: The specifed resource name contains invalid characters.

Then I tried this,

ds = pyarrow.dataset.dataset( "Container_name/Project_name/Year/Month/File.parquet/part-00000-tid-3006757700744507944-2dececd1-5fa7-429a-9fbe-3b21c1415734-16-1-c000.snappy.parquet" , filesystem=fs)

Same error message as above

Then I renamed the partition as

ds = pyarrow.dataset.dataset( "Container_name/Project_name/Year/Month/File.parquet/renamed.parquet" , filesystem=fs)

#or

ds = pyarrow.dataset.dataset( "Container_name/Project_name/Year/Month/File.parquet/renamed.snappy.parquet" , filesystem=fs)

Same error as above.

Is there anything wrong that I am doing?

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.