Hi @John K ,

Sorry for the delayed response.

Here are the two options provided by my internal team on this.

Option 1

a.Disconnect the current export job to ADLS

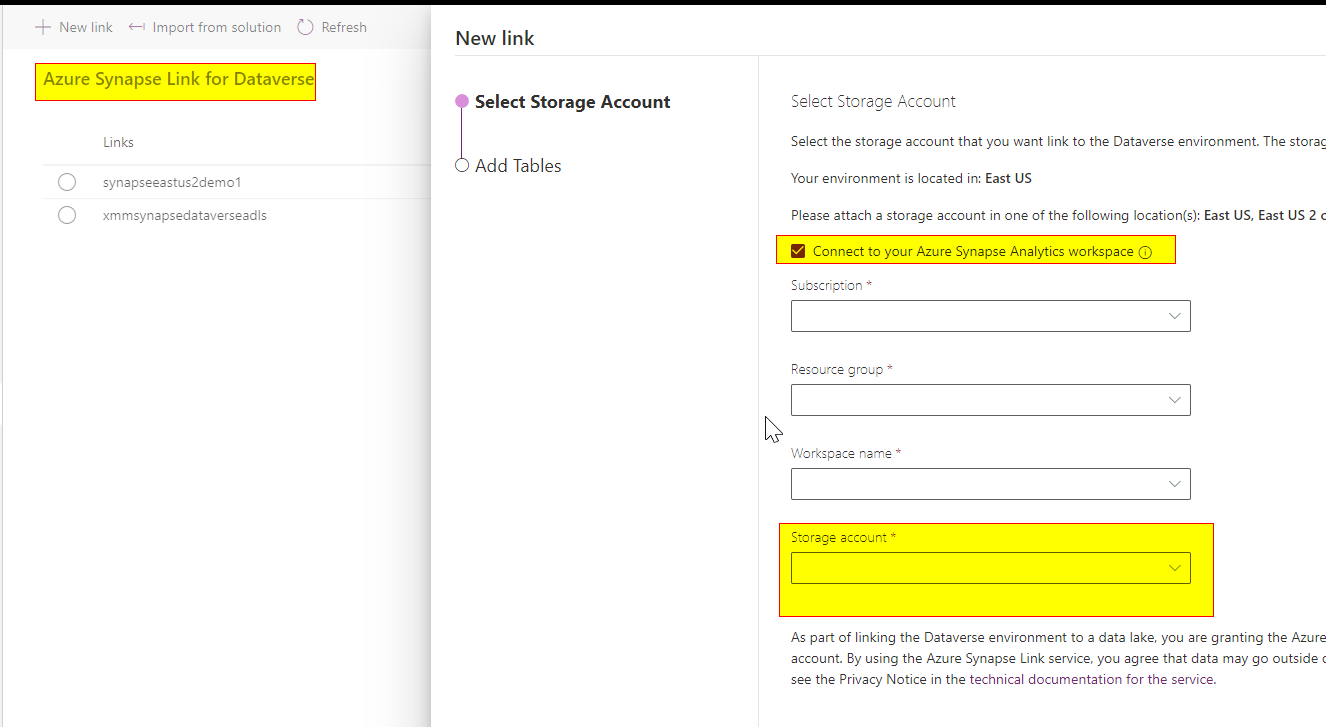

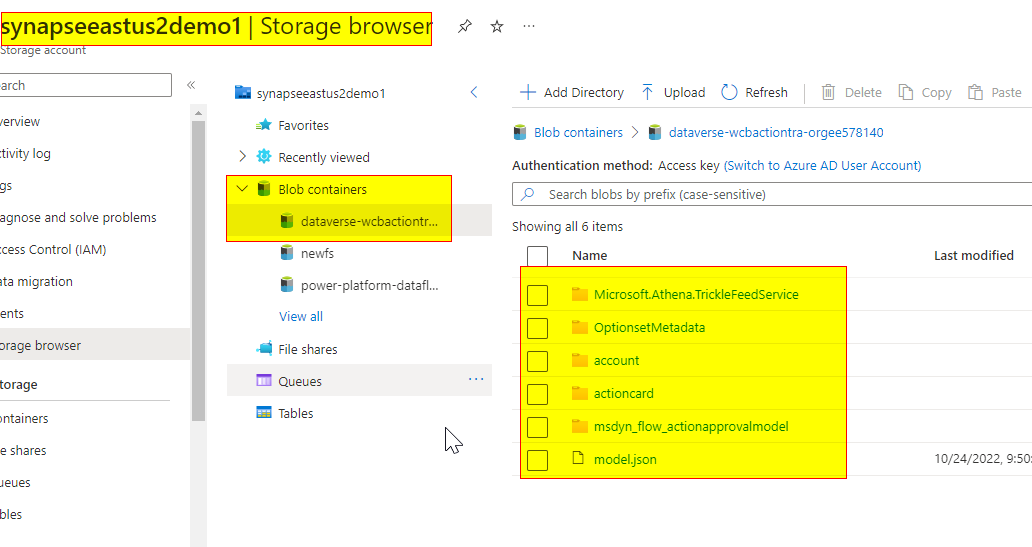

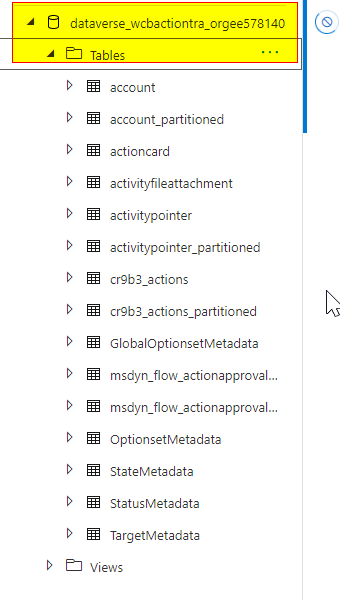

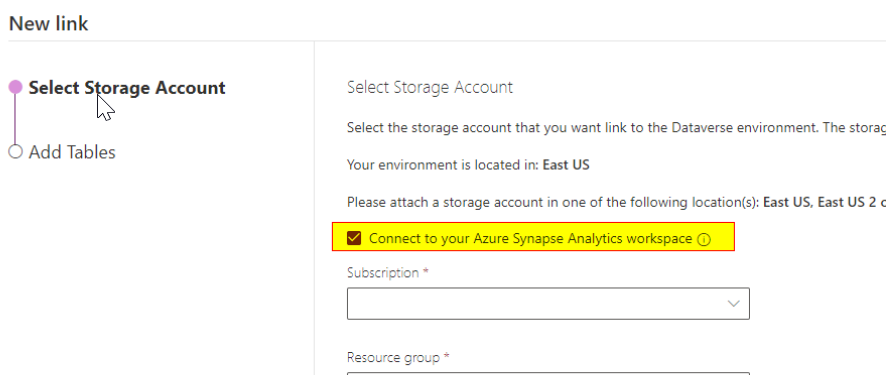

b.Setup a Synapse workspace

c.Reconnect the integration with Synapse Link and map it to the synapse workspace ( see the below screenshot )

d.Use Pipeline Copy Activity to move data from Synapse Serverless SQL (Dynamics Entities) into SQL IaaS using SHIR

Option 2

a.Use Data Flows to read from Dataverse and Stage them in an Azure Storage account in CSV/Parquet format

b.Use Copy Activity to read from the Staged copy and send it to SQL IaaS.

I hope this helps.

------------------------------

- Please don't forget to click on

and upvote

and upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators