Hello @D1SM4L , I think I found the real cause.

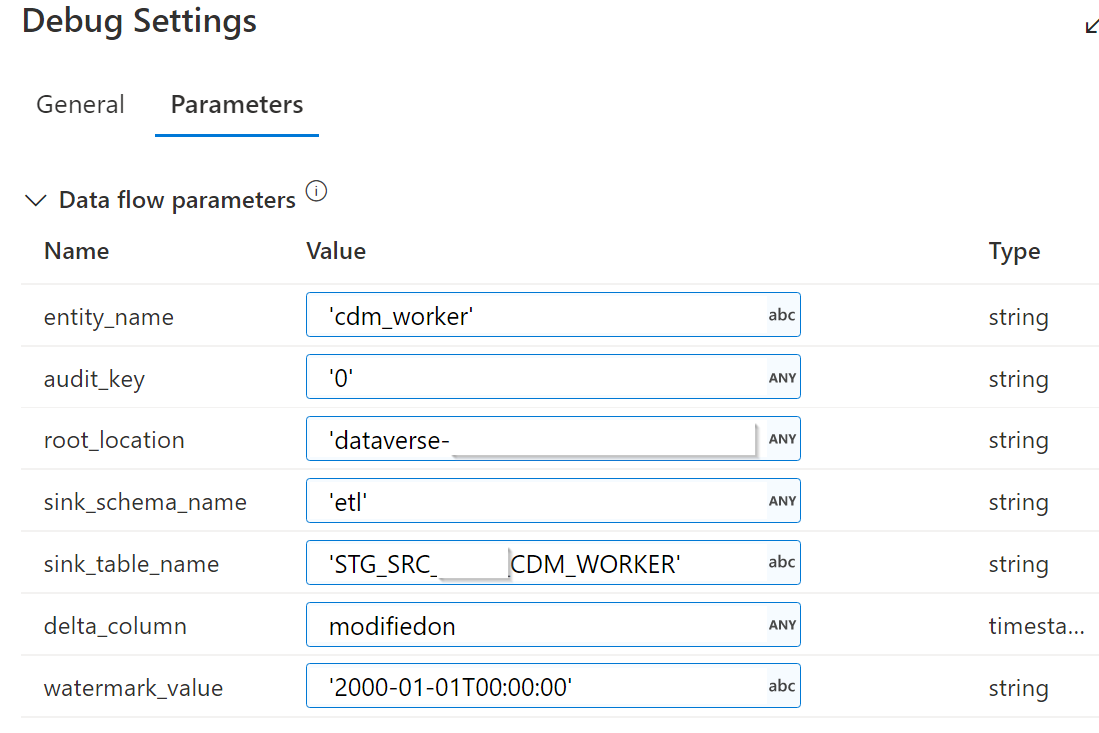

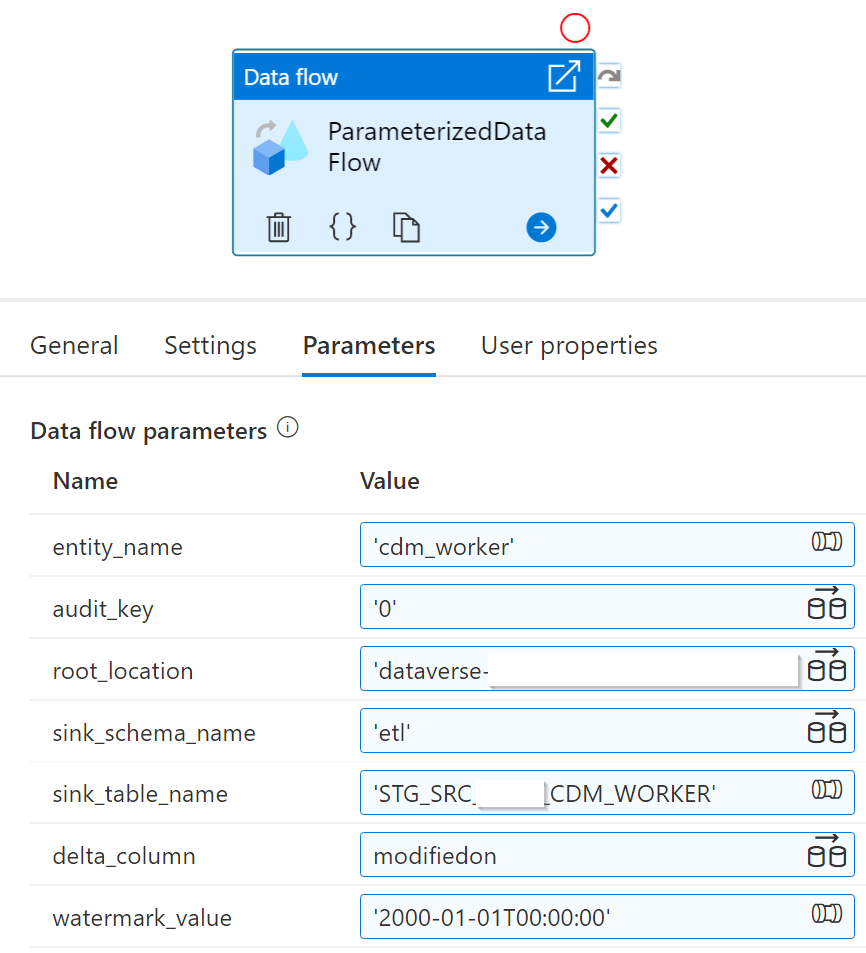

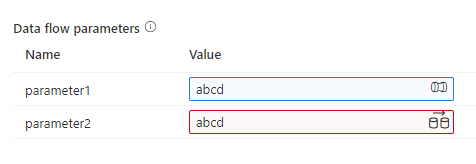

After some more consideration, I noticed in your screenshot you have both pipeline expressions and data flow expressions, as denoted by the 2 different icons on the right of each value.

This is important to note, because they have different syntax -- different rules on how they should be written. For example, quotation. Data Flow requires single quotes around string while pipeline expression does not require quotes around string except when a function is involved ( abcd is okay, but @concat(ab,cd) needs to be @concat('ab','cd') ) . See below example of 2 parameters, both string without quotes.

Since the pipeline allowed without quotes, this must mean that 'cdm_worker' became " 'cdm_worker' ". Err, that didn't explain well. The single quotes got considered as part of the string, instead of delimiters.

Firstly, please try making all of them the same type. All Data Flow expression type is probably best.

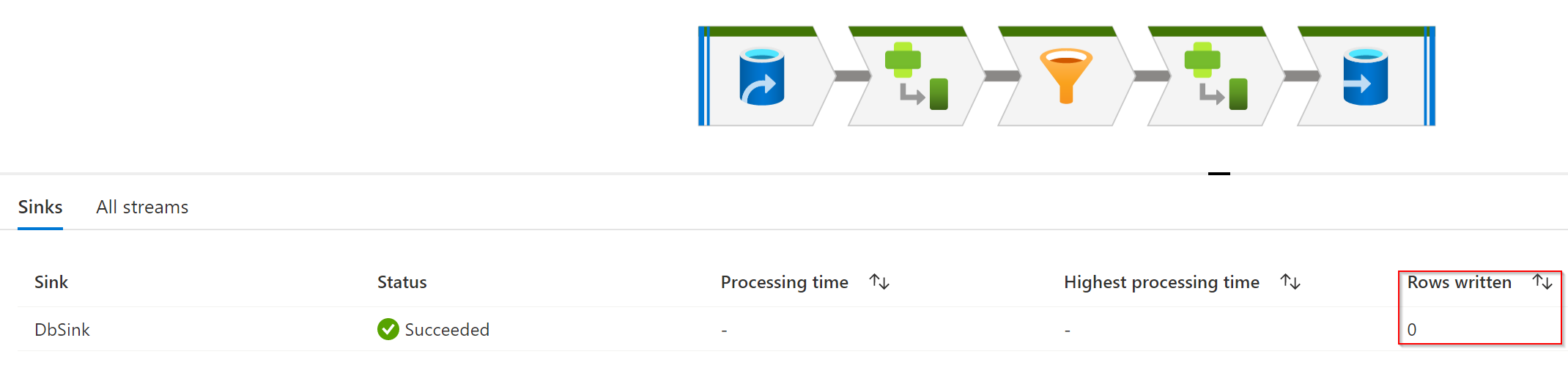

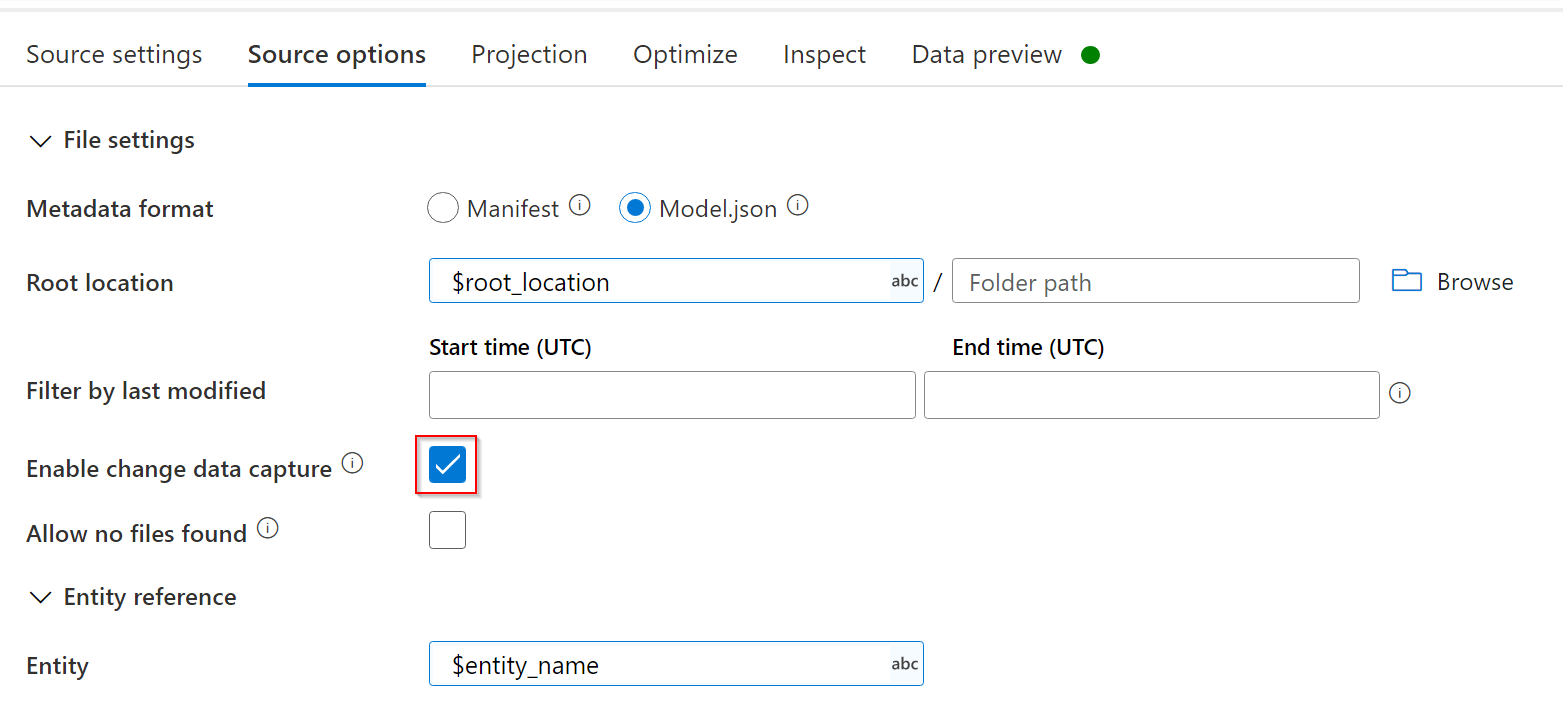

Now, this manifested as difference between Data Flow debug and pipeline run, because this choice of pipeine-expression-vs-dataflow-expression doesn't happen until you put a Data Flow activity in a pipeline.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators