Hello @Devender ,

Just to add what @AnnuKumari-MSFT called out .

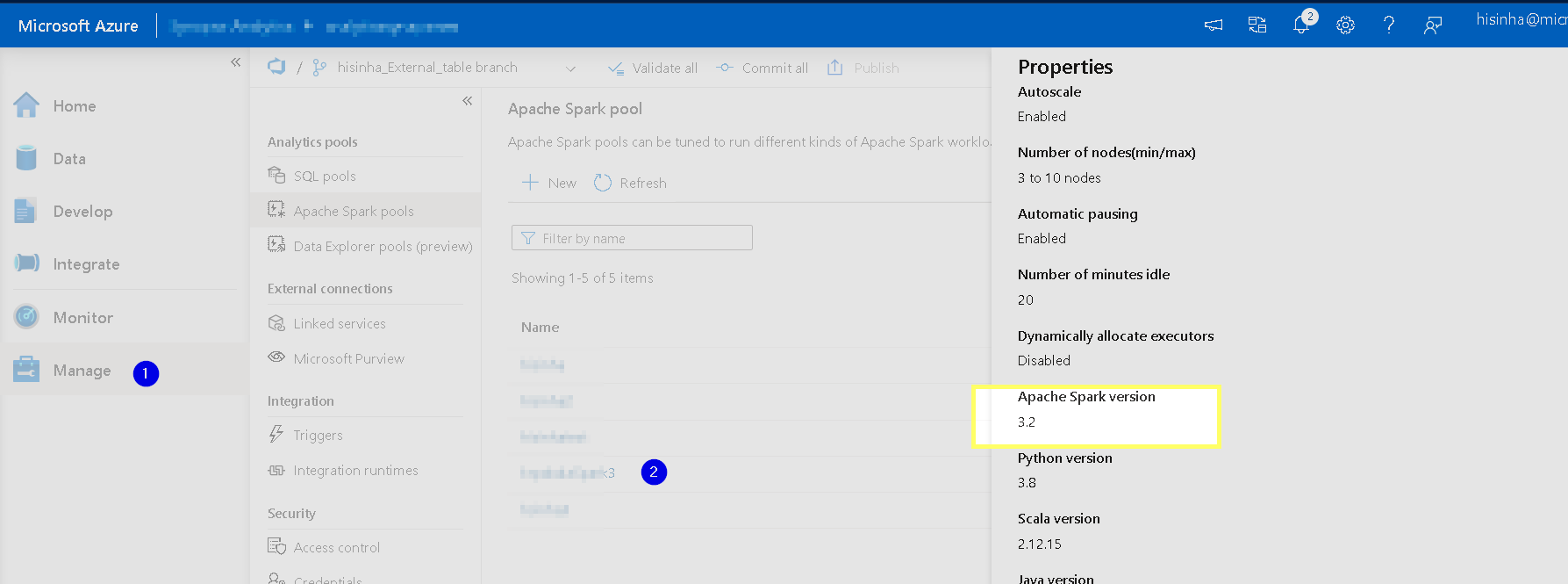

The SQL MERGE feature was implement in SPARK 3.0 and above , so please make sure that you check that ( I took my half day ) .

I was checking on Synapse Spark pool .

This is what I tried .

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import expr

from delta.tables import *Create spark session

table1 = [(1,'100','Yes',10),(2,'','Yes',10),(3,'50','',10)]

table2 = [(1,'Good'),(2,''),(3,'bad')]

table1columns= ["STUDENTID","ATTENDANCE","PLAYSPORTS","GIVEMARKS"]

table2columns= ["COLLEGEID","PERFORMANCE"]

df1 = spark.createDataFrame(data = table1, schema = table1columns)

df2 = spark.createDataFrame(data = table2, schema = table2columns)

df1.printSchema()

df1.show(truncate=False)

df2.printSchema()

df2.show(truncate=False)

df1.write.mode("overwrite").format("delta").saveAsTable('campaign_data')

df2.write.mode("overwrite").format("delta").saveAsTable('Institute_data')

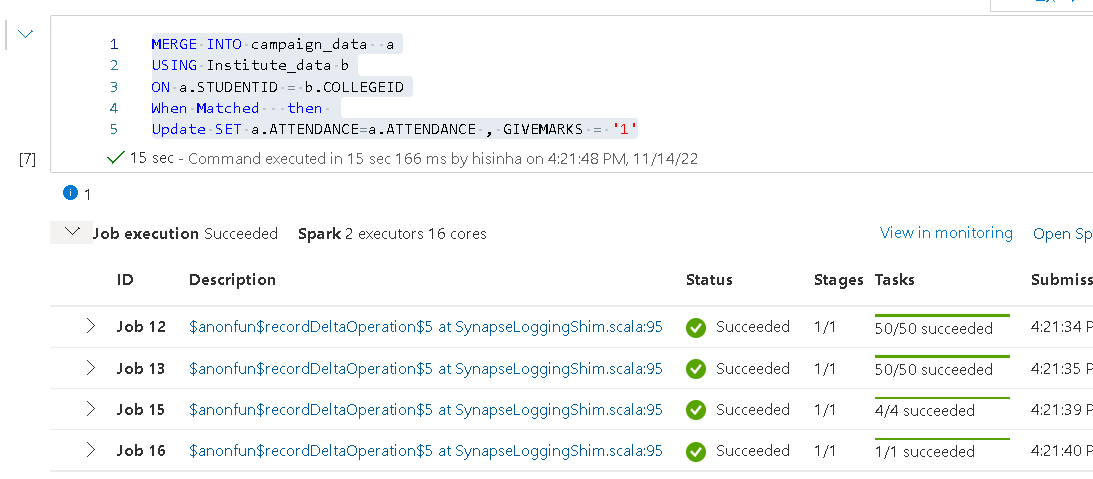

On a different notebook i tried this pysql ( You can add the logic as you mentioned )

MERGE INTO campaign_data a

USING Institute_data b

ON a.STUDENTID = b.COLLEGEID

When Matched then

Update SET a.ATTENDANCE=a.ATTENDANCE , GIVEMARKS = '1'

Please do let me if you have any queries.

Thanks

Himanshu

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators