Hi @Anonymous ,

Thankyou for using Microsoft Q&A platform and thanks for posting your question here.

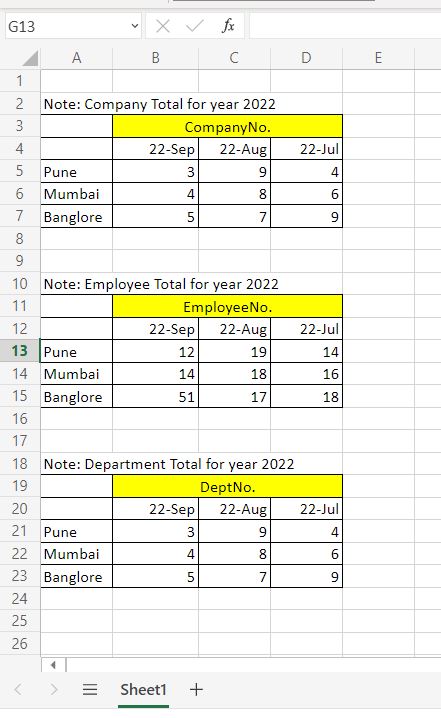

As I understand your query, you want to fetch the data from three datasets within same excel sheet based on the input dates. Please let me know if my understanding about the ask is incorrect.

To achieve the requirement, we need to use two dataflows where we need to use .csv files as intermediate storage . You can follow the below steps in mapping dataflow:

1. Add source transformation and point the dataset to the excel sheet having schema imported and preview the data.

2. Add filter transformation to filter out the rows having 'Note' values as it is not required as well as the dataset name i.e companyno, empno,deptno. Use this expression: not(like(Column_1,'Note%')) || not(isNull(toDate(Column_2)))

3. Add surrogate key transformation to create an identity column Id which will assign an incremental unique value to corresponding rows.

4. Add conditional split transformation to bifurcate the data into multiple groups based on the below conditions: Id>=1 && Id<= 4 , Id>=5 && Id<= 9 and default that will have Id>=10

5. Add select transformation and deselect Id column from all three streams.

6. Add sink transformation to each of the streams and select inline dataset so that it doesn't create too many physical datasets. Provide folderpath , filename in outputtosinglefile option and set single partition.

In second dataflow,

- Add these three sources as inline datasets. In data preview tab, click on map drifted option which will automatically create a derived column transformation to redefine the schema

- Add unpivot transformation to all three branches and ungroup by using

_c0column. Provide columnnames in unpivot key tab and unpivoted column tab. - Create a parameter in the dataflow named

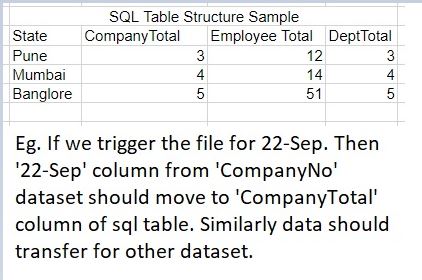

inputdatewith string datatype. Add an alter row transformation and select 'Update if' condition having this expression:dates==$inputdate - Add sink transformation having SQL dataset. In the settings tab, desect 'Allow insert' and select 'Allow update' . In key columns, select 'state' . In mapping tab, uncheck automapping and map the columns properly. For first branch, map

_c0-->state,datacol-->companytotal. Similarly, for second branch, map_c0-->state,datacol-->employeeand for third branch,_c0-->state,datacol-->depttotal

Now, create an ADF pipeline and call both the dataflows.

- Create a pipeline parameter :

Inputdatehaving string datatype. In dataflow2, parameter tab, provide value for the dataflow parameter from the pipeline parameter as@pipeline().parameters.Inputdateand check the expression checkbox. - Run the dataflow by providing the date for which you want to extract the data.

- Check the SQL table.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators