Hi @Sowmya Thota ,

Welcome to Microsoft Q&A platform and thanks for posting your question here.

As per my understanding, you want to copy files from specific folders of ADLS to another folder within ADLS using ADF pipelines. Please let me know if my understanding is incorrect.

You can try one of the below approaches:

Approach 1:

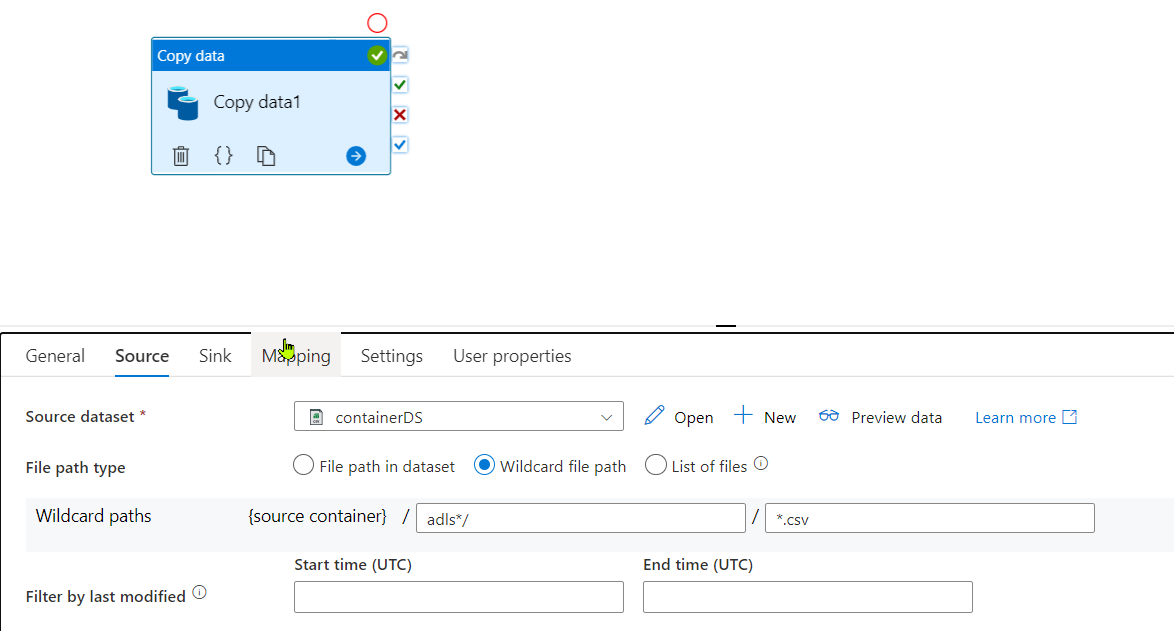

Drag a copy activity in ADF pipeline and in source settings, select 'wildcard file path' in 'File path type'. If the folders from which you want to copy the data have some common prefix like 'adls_folder1' , 'adls_folder2' etc, then in wildcard folder path provide this value: adls*/ , if you want to copy all csv files then provide wildcard filename as : '.csv' else provide ''

For more details, kindly visit: Folder and file filter examples

Approach 2:

- Use Getmetadata activity having dataset with blank file path. Select 'child items' in the Field list. This will fetch the list of all folders in ADLS.

- Use filter activity to filter out the folders you want to extract data from by providing expression eg: '@contains(item().name,'adls')' .

- Use Foreach activity to iterate through these folders. Inside foreach activity, use execute pipeline activity and create a child pipeline.

- In the child pipeline, use get metadata activity having parameterized dataset . which would take foldername from pipeline parameter being passed from parent pipeline. Select 'child items' in the field list. This will fetch the list of files present in each folders.

- Use Foreach activity to iterate through each files and add a copy activity within the foreach having parameterized source and sink datasets .

For more details, kindly check out : https://www.youtube.com/watch?v=36UrhwoOKUk&list=PLsJW07-_K61KkcLWfb7D2sM3QrM8BiiYB&index=13

You can follow the same steps explained in the video , except if condition , you can directly use copy activity

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators