Hi,

I am trying to access my blob with Databricks through SAS.

In my spark code I use

spark.conf.set("fs.azure.account.auth.type.<storage-account>.dfs.core.windows.net", "SAS")

spark.conf.set("fs.azure.sas.token.provider.type.<storage-account>.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider")

spark.conf.set("fs.azure.sas.fixed.token.<storage-account>.dfs.core.windows.net", "<token>")

and then my

df = spark.read.csv("abfs://<container-name>@<storage-account>.dfs.core.windows.net/<file-path>")

When I try to run the csv I get following msg:

Operation failed: "Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.", 403, HEAD, https://safobapreprddwh.dfs.core.windows.net/configurations/?upn=false&action=getAccessControl&timeout=90&sv=2021-06-08&ss=b&srt=s&sp=rwdlaciytfx&se=2022-11-17T17:52:13Z&st=2022-11-17T09:52:13Z&spr=https&sig=XXXX

I have seen that many ppl have faced similar problem and I have tried their solution without any success.

This is what I have done so far:

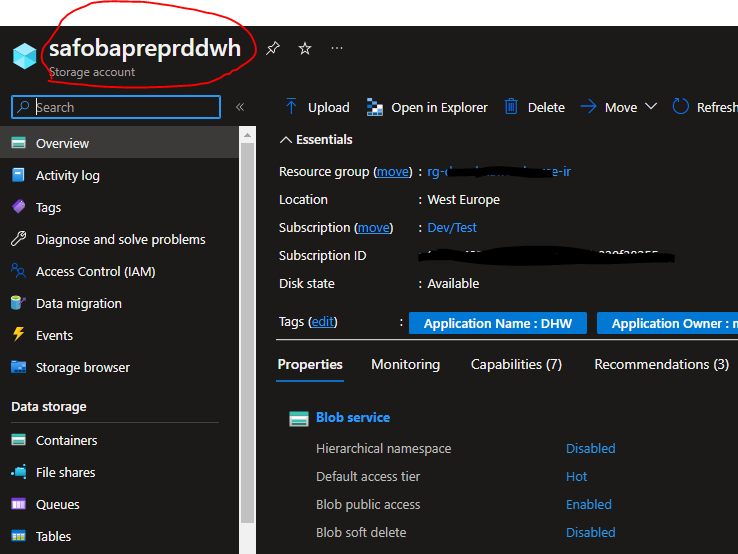

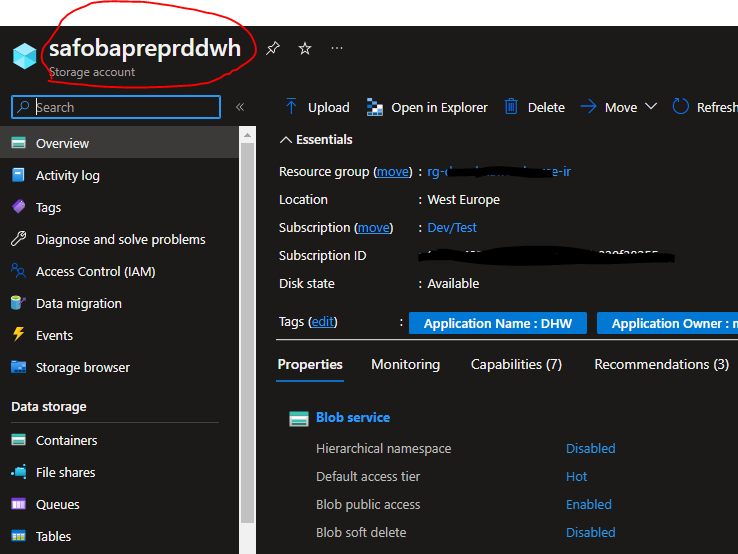

Storage account name is taken from here

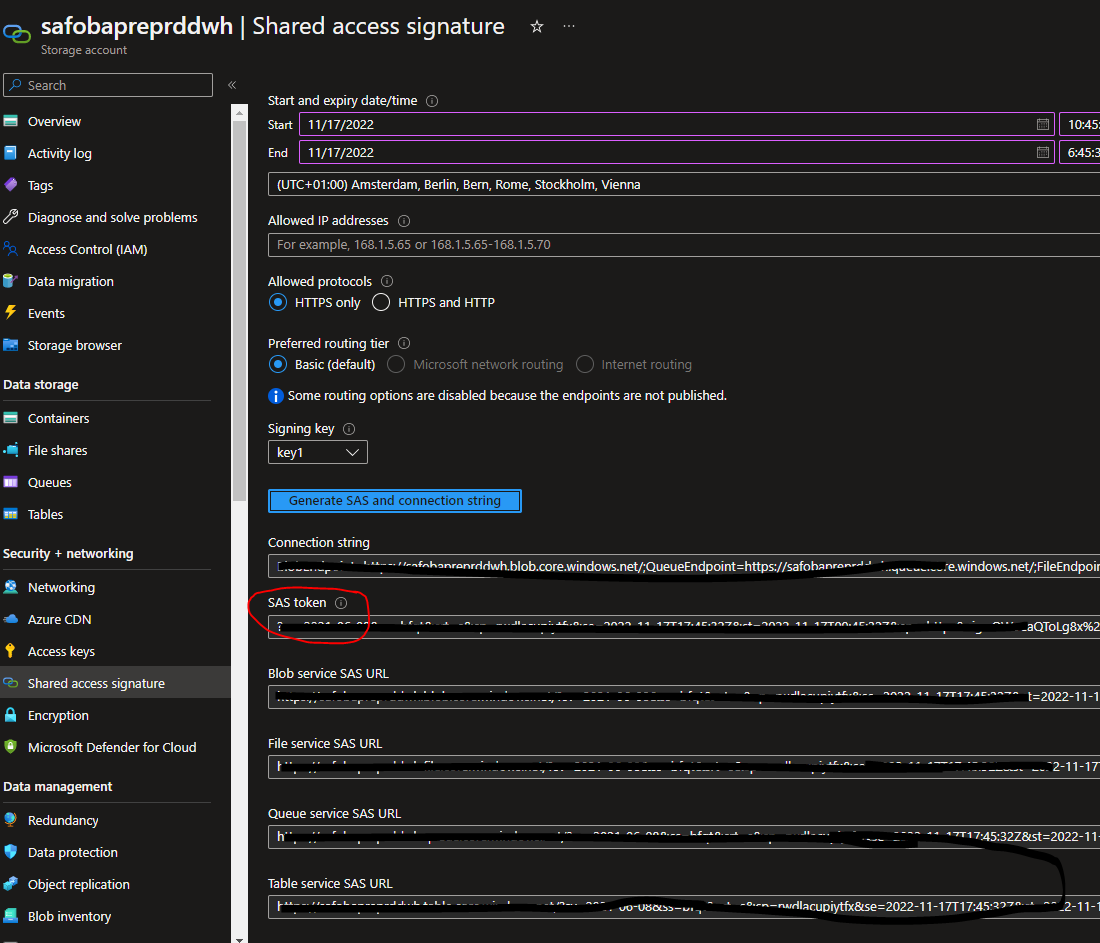

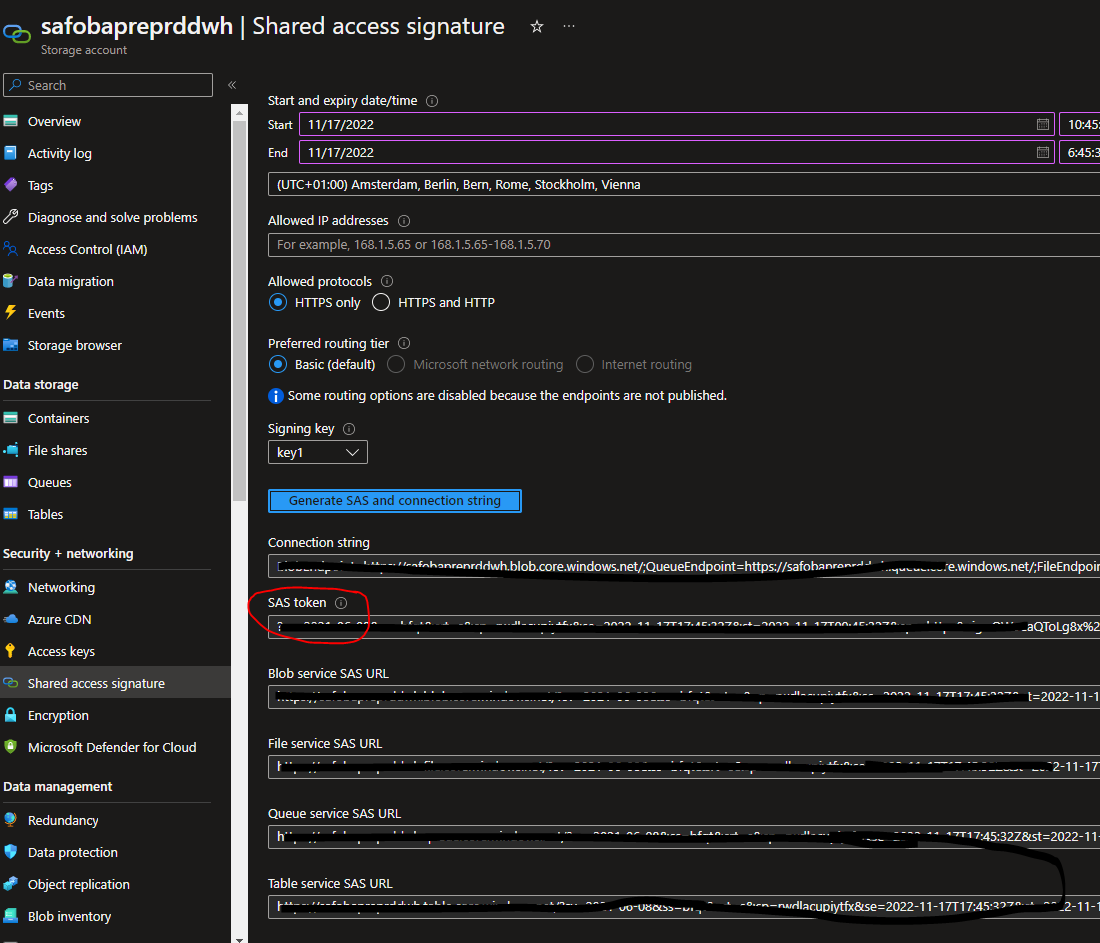

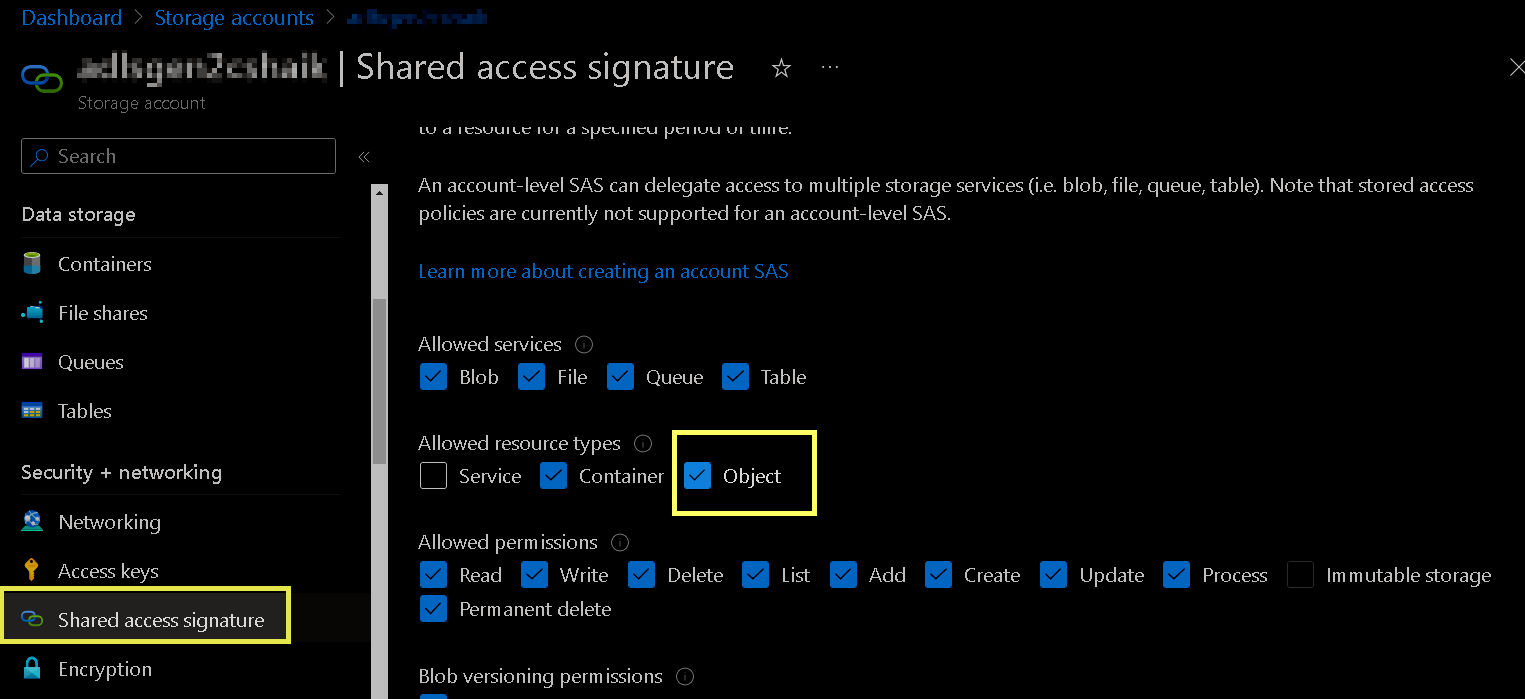

In storage account I go to Security + networking section and generate SAS token

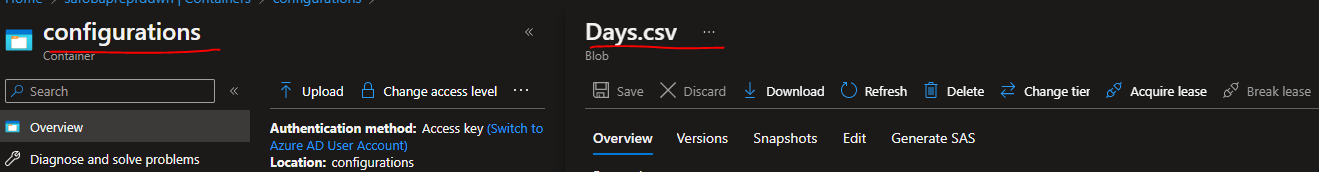

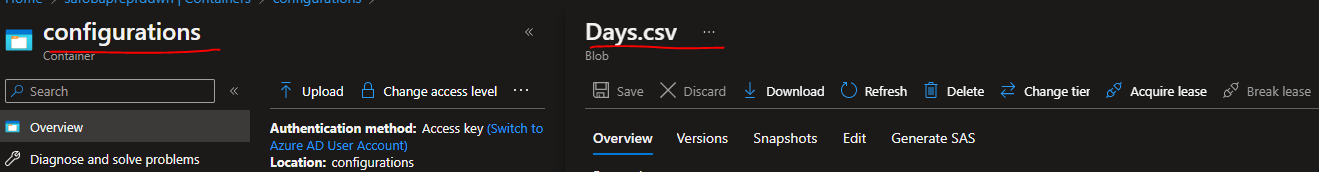

My container name and blob are:

with those values I put them in my spark conf and try to read my csv:

spark.conf.set("fs.azure.account.auth.type.safobapreprddwh.dfs.core.windows.net", "SAS")

spark.conf.set("fs.azure.sas.token.provider.type.safobapreprddwh.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider")

spark.conf.set("fs.azure.sas.fixed.token.safobapreprddwh.dfs.core.windows.net", "sv=2021-06-08&ss=bfqt&sr<My_SAS_Token>")

df = spark.read.csv("abfs://******@safobapreprddwh.dfs.core.windows.net/Days.csv")

Do I need to configure something?

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how