Hello @Amar Agnihotri ,

Thanks for the question and using MS Q&A platform.

Thank you for the detailed example. That really helped alot!

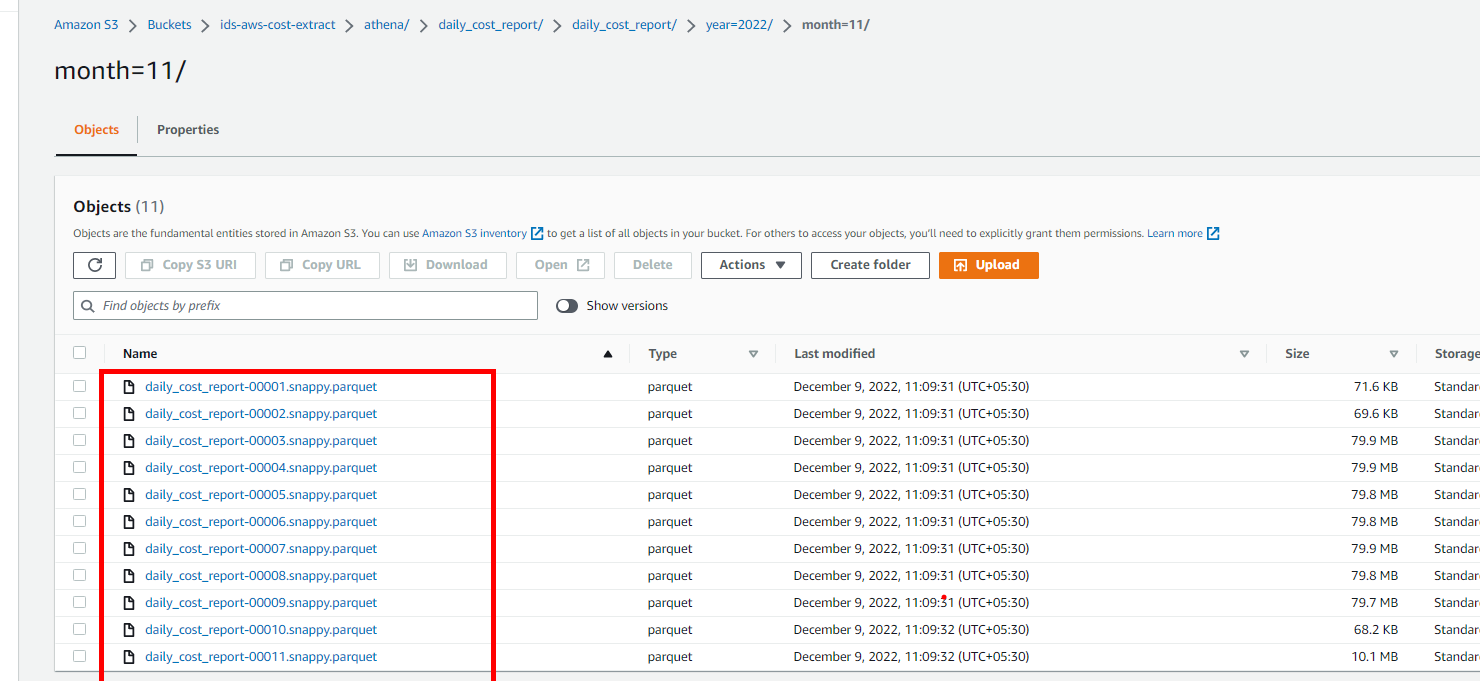

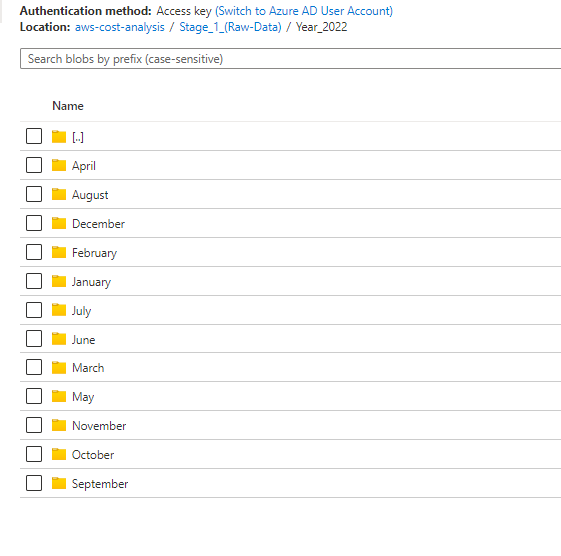

I think I get it. If current day of the month is > 8, then only pull current month's folder. Otherwise, pull past month's folder AND current month's folder.

Since the source folders all differ in only the month number, this can be done in a single parameterized source dataset. It needs 2 copy activities though.

Changing the folder names from month numbers to month words is a little tricker, but having the 2 separate copy activities makes this MUCH easier.

Actually, I had another thought, we can use only 1 copy activity if we put it in a loop. Anyway, the logical bit to determine the month number(s).

To determine how many months

@if( less( 8 , dayOfMonth(utcnow())), 'onlyThisMonth' , 'thisAndPastMonth')

To get current month number

formatDateTime(utcnow(),'%M')

To get past month number

formatDateTime(GetPastTime(1,'month'),'%M')

To get current month name

formatDateTime(utcnow(),'MMMM')

To get the past month name

formatDateTime(GetPastTime(1,'month'),'MMMM')

Now with those alone, you could set up an if conditional activity, and separate copy activities as appropriate.

I mentioned another way, getting fancy. This would involve creating an array variable, and filling it with objects packaging the month number and month name together in a json object. Then in a forEach loop you could just do @item().number and @item().name and fill in the folder names in the copy activity, concatenating in the dataset.

So for this lets try like:

{"number":"1", "name":"January"}

{"number":"12", "name":"December"}

The expression to make this, coupled with the logic for deciding how many months will get big and messy. The record maker is like

@array(json(concat('{"name":"','November','","number":','11','}')))

The code:

@if( less( 8 , dayOfMonth(utcnow())),

array(json(concat('{"number":"',formatDateTime(utcnow(),'%M'),'","name":"',formatDateTime(utcnow(),'MMMM'),'"}'))),

union(

array(json(concat('{"number":"',formatDateTime(utcnow(),'%M'),'","name":"',formatDateTime(utcnow(),'MMMM'),'"}'))),

array(json(concat('{"number":"',formatDateTime(GetPastTime(1,'month'),'%M'),'","name":"',formatDateTime(GetPastTime(1,'month'),'MMMM'),'"}')))

)

)

Please do let me if you have any queries.

Thanks

Martin

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators