Hello @Alan Jesús Segundo Nava ,

Welcome to the MS Q&A platform.

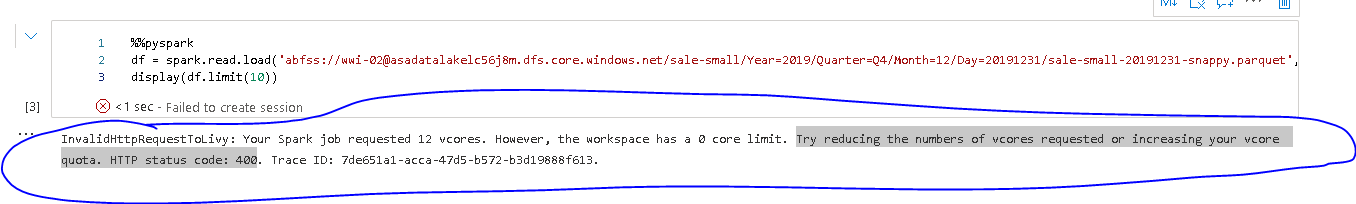

As per the error message, the number of V-cores are exhausted on the spark session. When you define a Spark pool, you are effectively defining a quota per user for that pool.

The vcores limit depends on the node size and the number of nodes. To solve this problem, you have to reduce your usage of the pool resources before submitting a new resource request by running a notebook or a job.

(or)

Please scale up the node size and the number of nodes.

This error message has been discussed on this similar thread.

I hope this helps. Please let me know if you have any further questions.

- Please don't forget to click on

and upvote

and upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators