Hi,

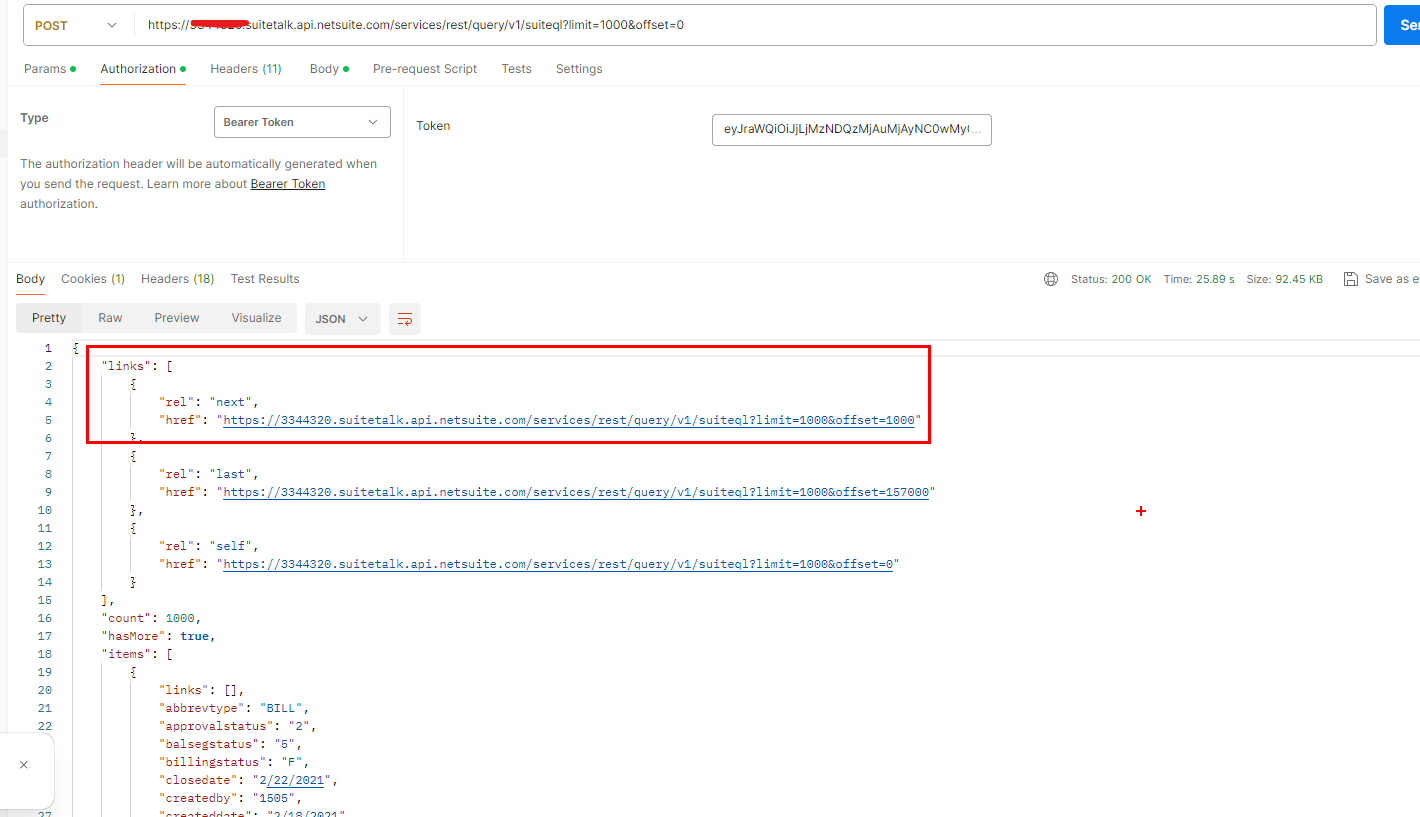

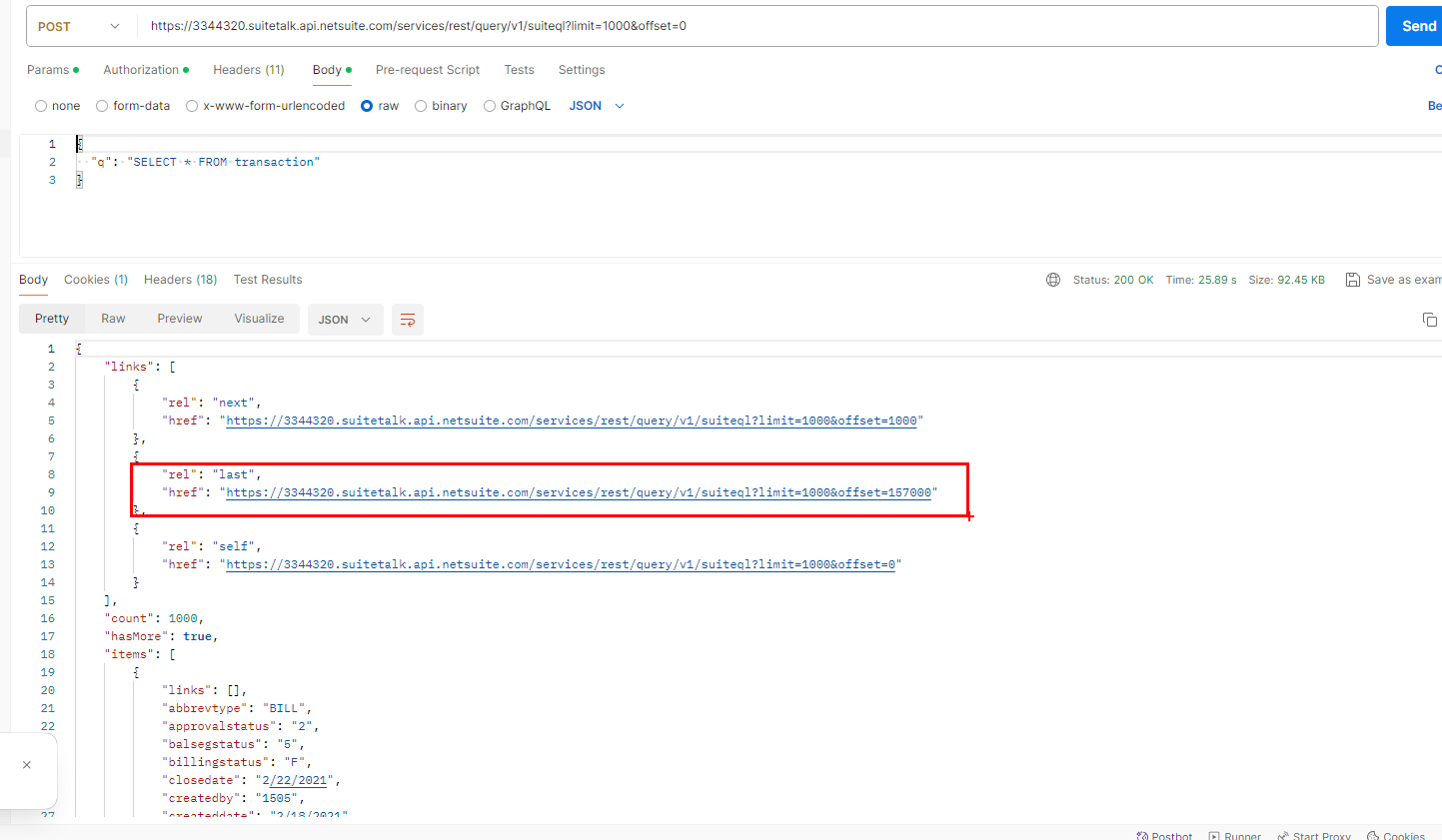

I am trying to build up an adf pipeline to pull data from NetSuite. The result of the api call of NetSuite is like this in postman

As we can see that this returns next link with the data and now we have to us this next link for pagination in adf.

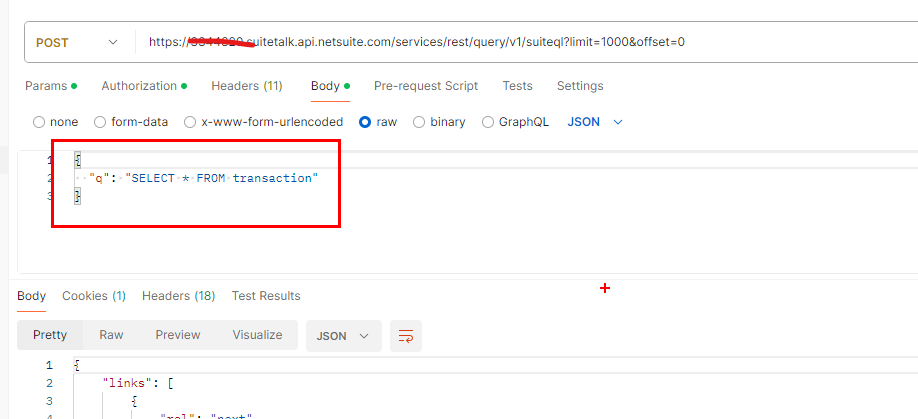

Now the issue is , In NetSuite call we have to pass some query in body section like

So i can't use the copy activity directly with the REST source dataset as it will not give me the option to pass this query.

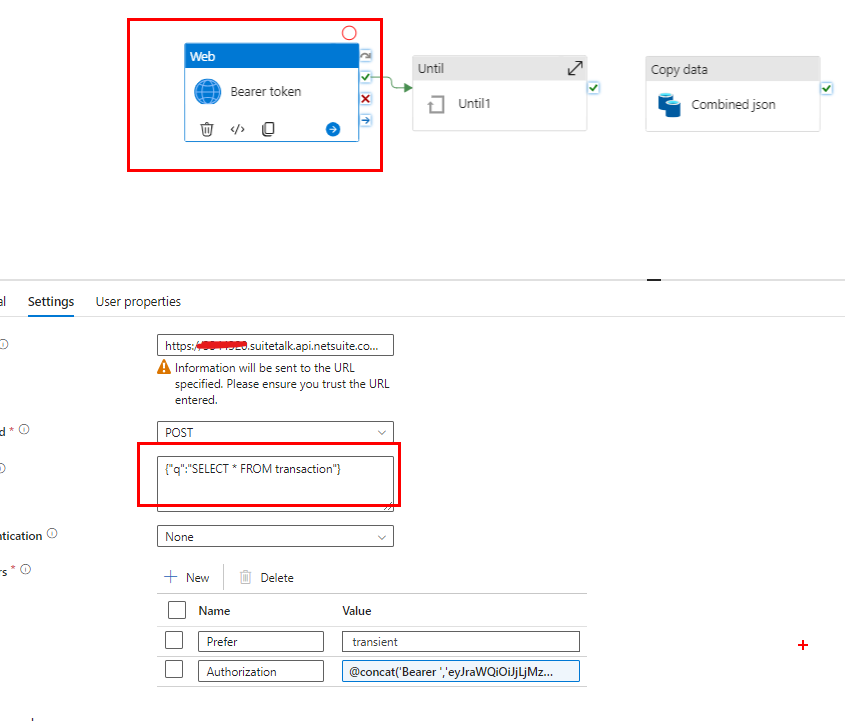

So i am using web activity in adf and passing this query to get the data

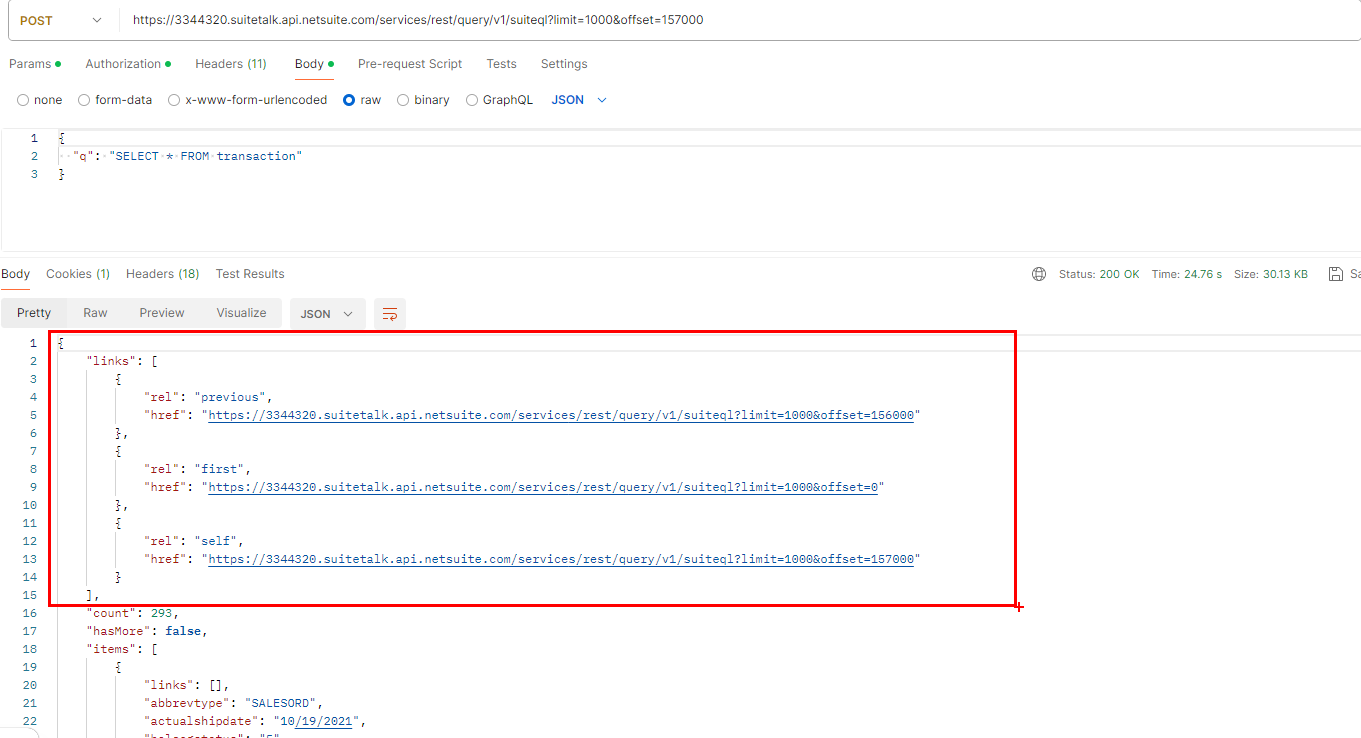

Now since the api only pulls first 1000 records only in one call so i have to use next link again n again in the iterative call until next link becomes unavailable . For ex - see the result when i used the last link for call

and the result is this

We didn't get any next link now .

What i want to do is to iterate over through each next link and save the output in a separate json file . Like if the total number of records are 10000 then i will perform 10000/1000 = 10 iterations (since 1000 records are coming in one iterations). Out put of each iterations will be copied in a json file in datalake as File1.json, File2.json and so one.

Once all the output of the iterations will be saved then i will use copy activity to combined all the output into a single json file.

I know how to save the output of web activity into a json file in blob. But, i am confused about if i should use until loop or foreach activity for the iterations. Also, how can i save the next link in a variable dynamically so that in each iteration it will be directly pass on to the web activity for the next call.

I am seeking for help here . Can anyone suggest the best way to achieve this and also the workflow of activities to be used

Thanks