I am running a sweep job to perform hyperparameter tunning in Azure ML using Python SDK V2. Even though the job runs successfully, it's not showing me the best run and showing this error:

something went wrong when calculating the best trial for this sweep job. Please see the trials tab for accurate best trial reporting.

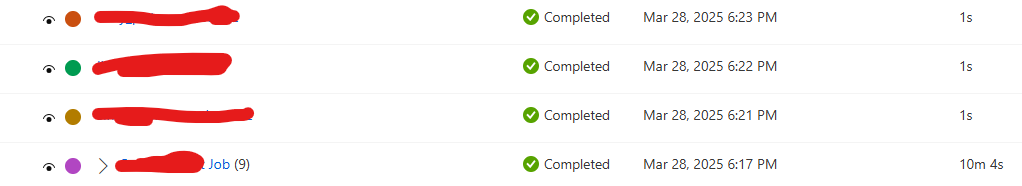

But then, the sample space is run for different combinations of the parameter values and the resultant job completetion looks like this:

The individual experiments must come under the ... Job in the fourth line. And finally sweep job telling me what the best trial was.

I used the same code as below for another run, and that job ran fine:

Can someone please let me know where I am going wrong.

The code is as below:

Training script:

training_job = command(name='credit_default_train8',

display_name='Credit Default Job',

description='Credit default training job',

environment=env,

code=training_folder,

inputs={

'train_data' : Input(type='uri_folder', path='azureml://datastores/workspaceblobstore/paths/train_data'),

'test_data' : Input(type='uri_folder', path='azureml://datastores/workspaceblobstore/paths/test_data'),

'n_estimators' : 100,

'learning_rate' : 0.001,

},

# outputs={

# 'model' : Output(type='uri_folder', mode = 'rw_mount')

# },

command='''python train.py \

--train_data ${{inputs.train_data}} --test_data ${{inputs.test_data}} \

--n_estimators ${{inputs.n_estimators}} --learning_rate ${{inputs.learning_rate}}'''

)#--model ${{outputs.model}}

Command:

training_job = command(name='credit_default_train8',

display_name='Credit Default Job',

description='Credit default training job',

environment=env,

code=training_folder,

inputs={

'train_data' : Input(type='uri_folder', path='azureml://datastores/workspaceblobstore/paths/train_data'),

'test_data' : Input(type='uri_folder', path='azureml://datastores/workspaceblobstore/paths/test_data'),

'n_estimators' : 100,

'learning_rate' : 0.001,

},

# outputs={

# 'model' : Output(type='uri_folder', mode = 'rw_mount')

# },

command='''python train.py \

--train_data ${{inputs.train_data}} --test_data ${{inputs.test_data}} \

--n_estimators ${{inputs.n_estimators}} --learning_rate ${{inputs.learning_rate}}'''

)#--model ${{outputs.model}}

from azure.ai.ml.entities import AmlCompute

cluster_name = 'cpu-cluster'

try:

ml_client.compute.get(name=cluster_name)

print(f'You already have a cluster with name {cluster_name}')

except:

compute_cluster = AmlCompute(name=cluster_name,

description='Compute Cluster to run sweep job',

min_instances=0,

max_instances=4,

idle_time_before_scale_down=60,

size='Standard_E16s_v3')

print(f'Creating a new cluster with name {cluster_name}')

ml_client.compute.begin_create_or_update(compute_cluster)

from azure.ai.ml.sweep import MedianStoppingPolicy

stop_policy = MedianStoppingPolicy(delay_evaluation=5,

evaluation_interval=1)

from azure.ai.ml.sweep import Choice, Normal

command_job_for_sweep = training_job(

n_estimators = Choice([100, 150, 200]),

learning_rate = Choice([0.1, 0.001, 1])

)

sweep_job = \

command_job_for_sweep.sweep(

primary_metric='f1_score',

goal='Maximize',

early_termination_policy=stop_policy,

compute=cluster_name,

sampling_algorithm='grid')

sweep_job.experiment_name = 'Sweep-job'

sweep_job.set_limits(max_concurrent_trials=4, timeout=1800, max_total_trials=10)

returned_sweep_job = ml_client.jobs.create_or_update(sweep_job)