Hi @Mahesh Madhusanka ,

Thank you for asking this question.

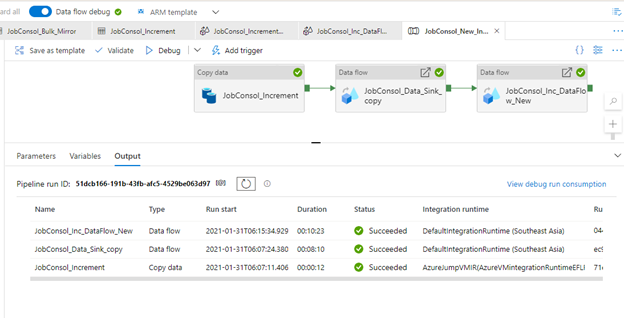

- According to the description, I see that you have 1 copy activity, and 2 DataFlows, all are executing in sequence. As the DataFlow activities you need to execute in sequence, ADF will spins up separate Spark clusters for each DataFlow . Each cluster will takes 3-5 minutes start-time. You can reduce the runtime by setting a TTL in the Azure IR configuration. ADF will reuse the compute resources resulting in a faster cluster start up time. Details would be found here, https://learn.microsoft.com/en-us/azure/data-factory/concepts-data-flow-performance

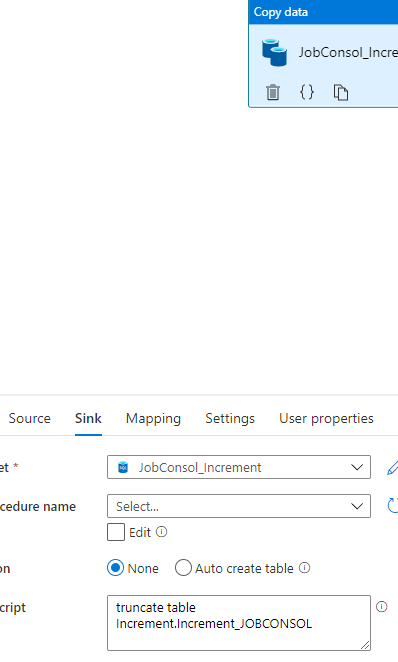

- I see you have a 1#-DataFlow without any transformation, just source and sink in it. You can reduce the cluster start-up time + execution time by using CopyActivity instead.

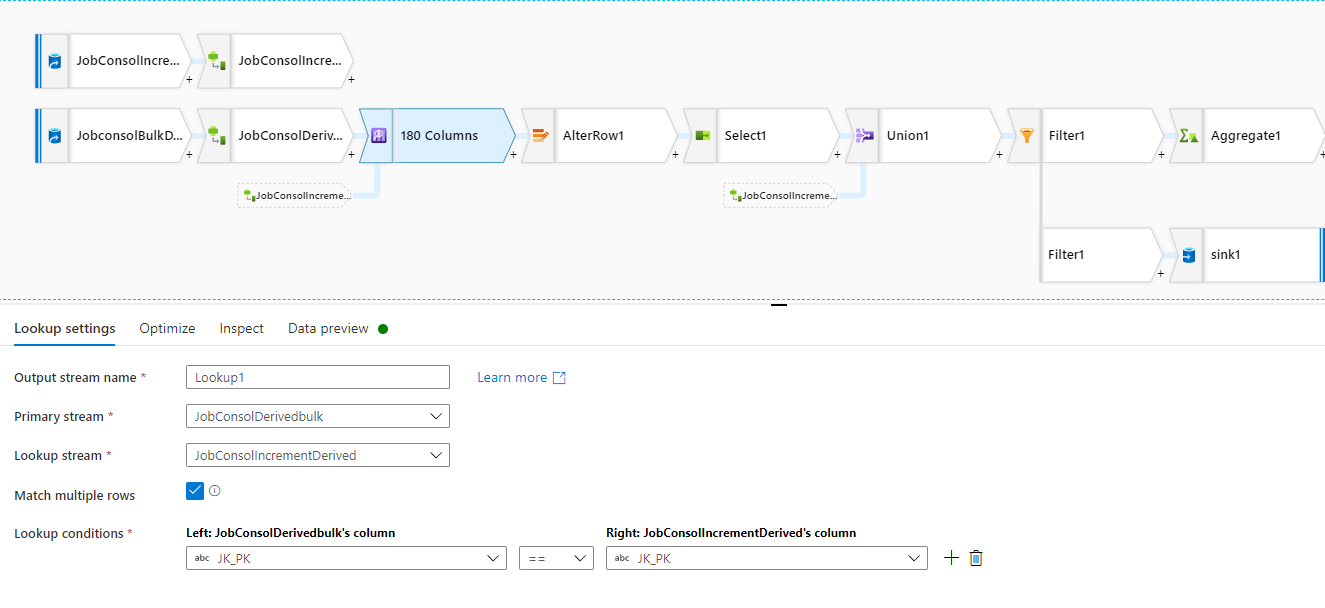

- 2#DataFlow does not make much sense to me (I could be wrong, as it's hard to tell what's the requirement was just by seeing the boxes), If the requirement was simply to UPSERT latest records from two tables i.e., incremental-DataTable and 2nd-StageStagingTable into the Bulk-DB, then I would do the followings: In the DataFlow, I would avoid the Lookup activity specially with "Match multiple rows" option, as it will create second set of same columns (i.e., 90+90 = 180) + multiple rows. What I would do something like: Source-incremental-DataTable --> DerivedColumns1

Source-2nd-StageStagingTable --> DerivedColumns2 --> Union (stream: DerivedColumns1) --> Filter1 (I see you have filter in your DF, you can have Filter1 here, or after Filter2)-->Window Activity (Over: PK, Sort: StatusDateTime/CreateDateTime DESC, WindowColumns: Rank = rowNumber() --> Filter2 (Filter on: Rank = 1) -->AlterRow --> Sink (Settings: upsert)

Hope this helps! Thanks! :)

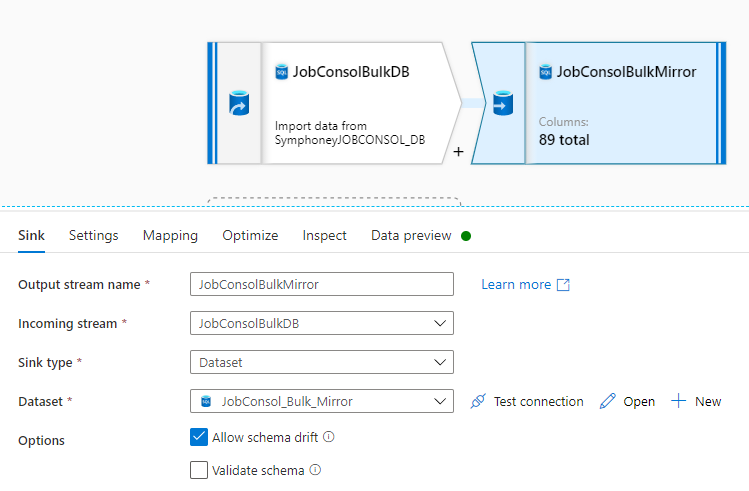

. Dataflow 1-

. Dataflow 1-