Hey,

Can you please share some sample data and some example of your expectation which might help us to provide a better approach.

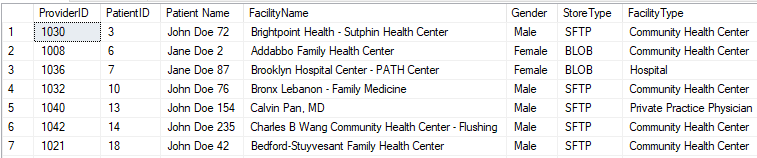

Based on my understanding that there is a config table which indicates what data needs to be copied where:

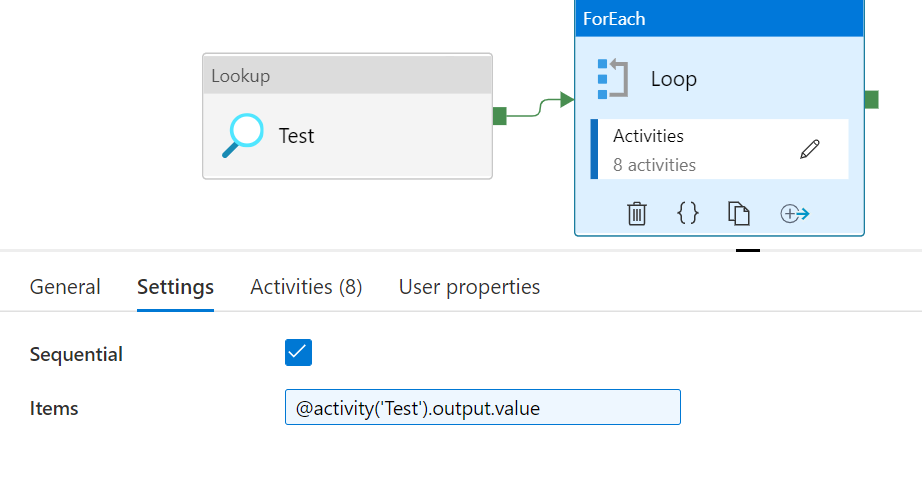

You can leverage a combination of below activities in a single pipeline:

Lookup activity to get the provider id and destination

The output of lookup activity can be added in if activity or switch activity with each scenario having a copy activity with SQL db as source and either of the destination