Hi,

This message comes up when I enter that string within the Items section of the ForEach section:

"The output of activity 'Test' can't be referenced since it is either not an ancestor to the current activity or does not exist"

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Hello everyone,

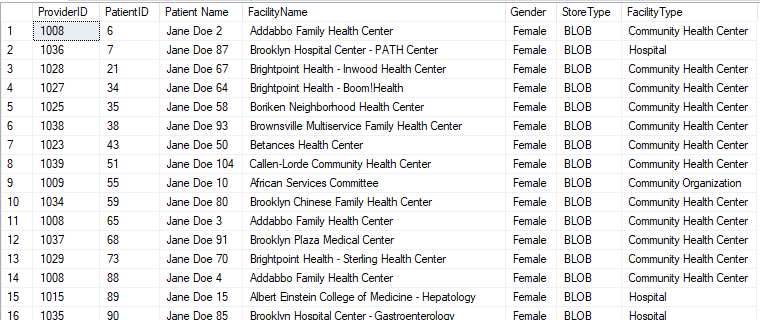

I'm in process of planning out a demo for one of our clients. They are a health care provider with many providers, but for the demo sake, this will be 3 - 4 records per store type, which is BLOB storage, Azure Data Lake and SFTP.

Based on the source data, which resides in Azure SQL Database, provider id and store type, the data will land in one out of three destinations (ADL, BLOB Storage & SFTP Gateway).

I'm not sure how I would go about developing this without separate pipelines.

Any information would be greatly appreciated.

Thank you

Hi,

This message comes up when I enter that string within the Items section of the ForEach section:

"The output of activity 'Test' can't be referenced since it is either not an ancestor to the current activity or does not exist"

@Nandan Hegde That works, thank you very much! The only thing this process needs to do now is create new containers in both the ADL and BLOB destinations for each ProviderID listed within the table for BLOB, the same with ADL and SFTP.

What it's currently doing is creating the first ProviderID and copying all the records that equal to BLOB instead of creating a new container for each Provider ID

I'm sure an adjustment needs to be made on the dataset side.

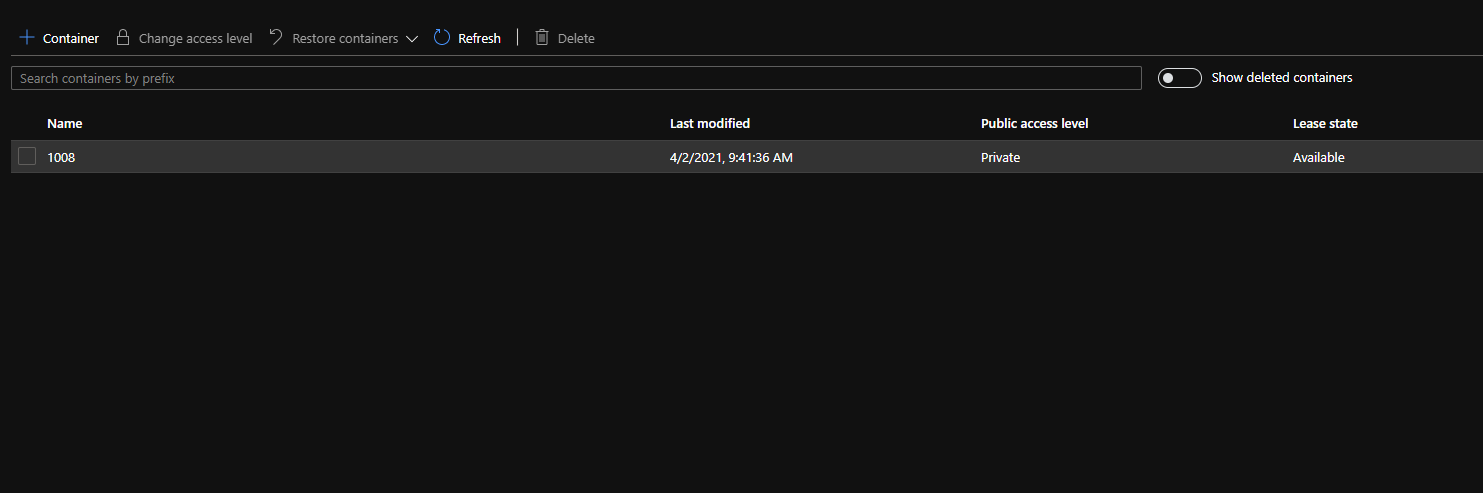

Here is a screenshot of how the containers should look with each unique Provider ID

Any help would be greatly appreciated.

Thanks again