Hi @Raja ,

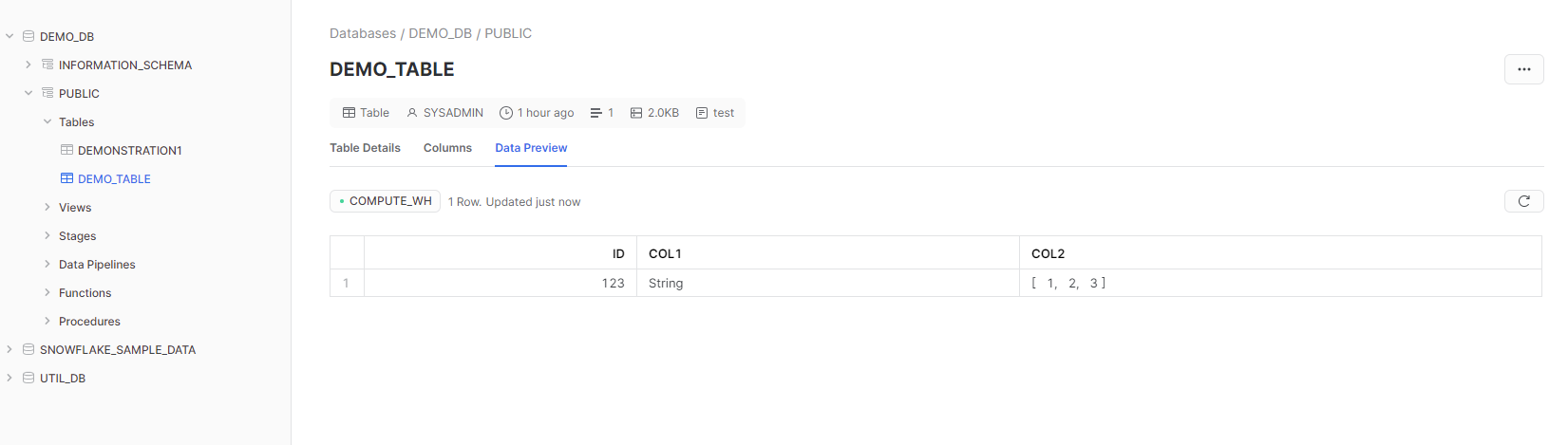

Question 1: Is the copying from Snowflake to Cosmos DB not supported? Question 2: Is my above understanding correct?

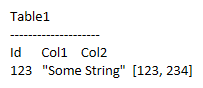

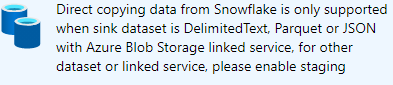

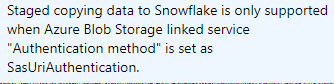

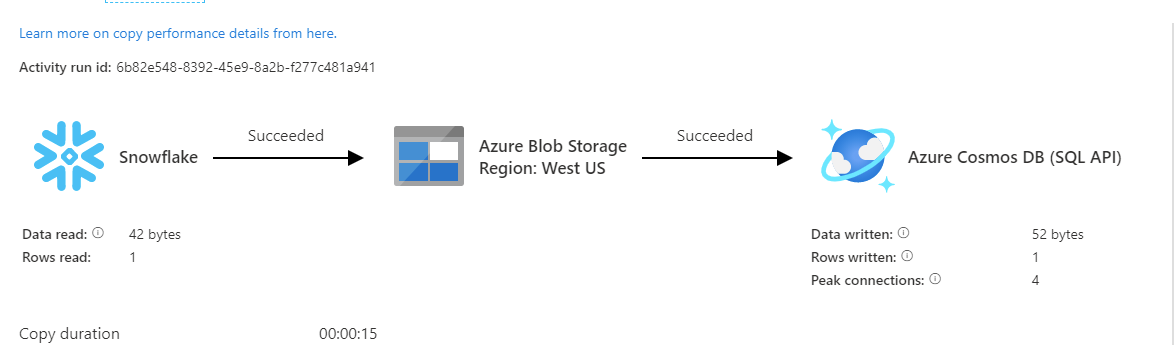

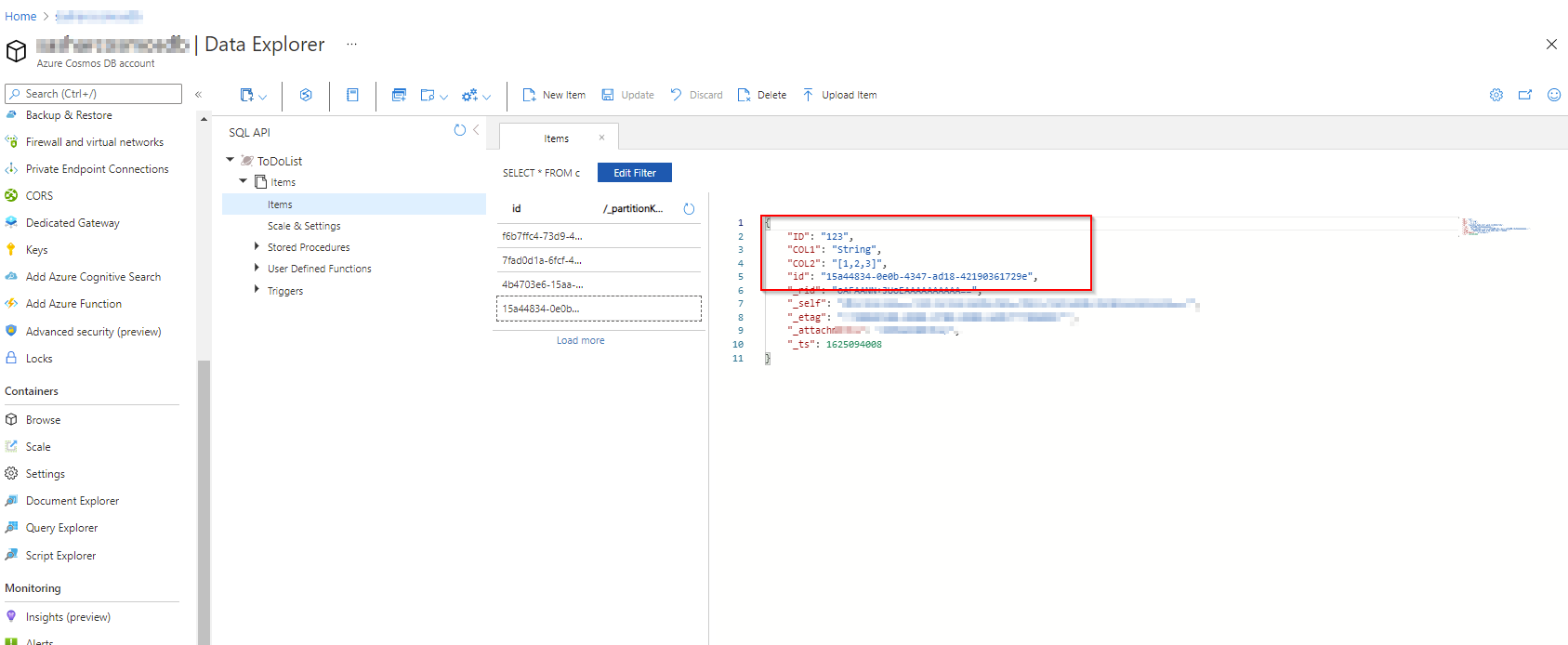

No, it is not supported currently and direct copying from Snowflake to sink is supported (With shared access signature authentication) if the sink is a Azure Blob Storage and sink dataset format is of Parquet, delimited text, or JSON. Please refer to the documentation.

Question 3: I really do not understand what should we do now? There's no concrete example as how to retrieve the Sas url to the blob. Does this url need to be pointing to an existing file location?

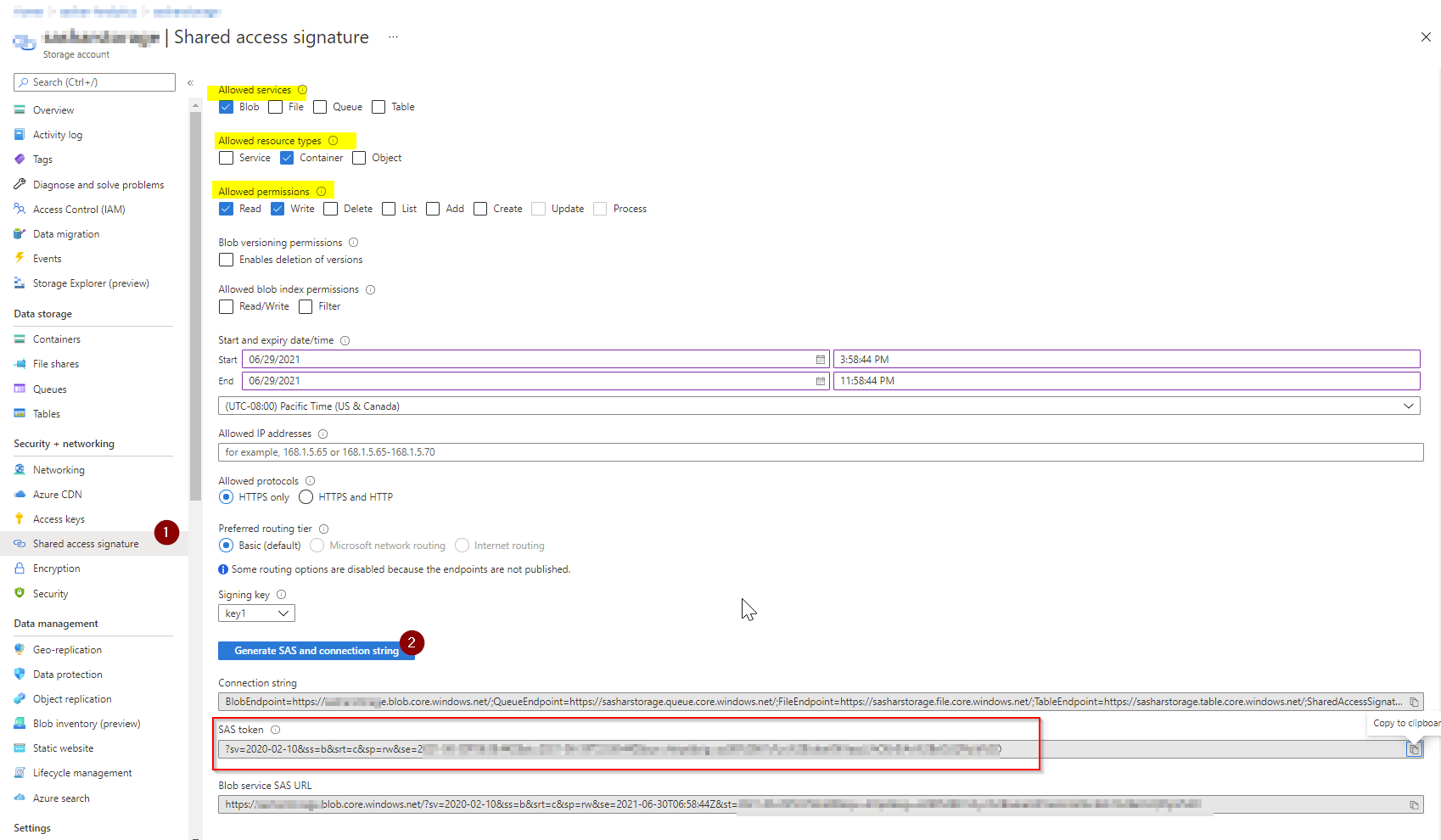

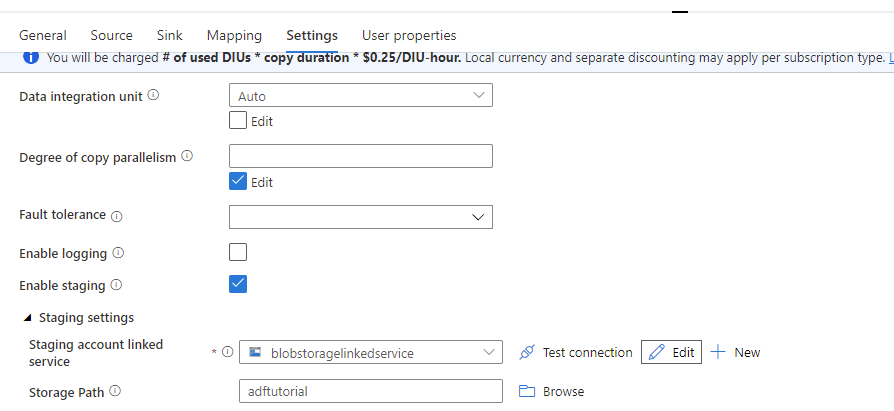

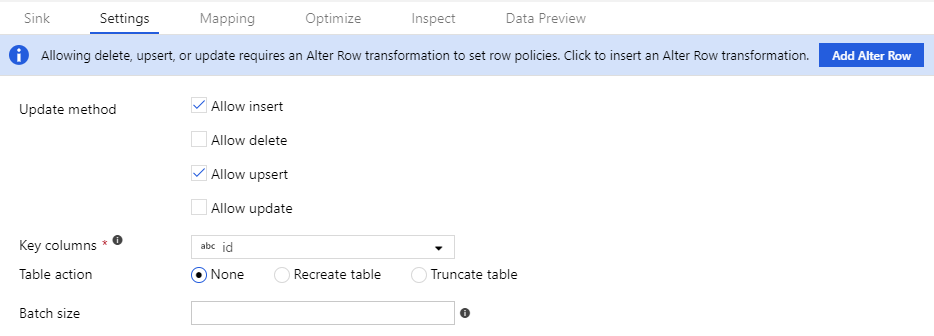

You need to enable staging as stated in the error message. In order to retrieve SAS URL for the blob you need to go to your blob storage account on azure portal and navigate to "Shared Access Signature" blade. You can configure the required set of permissions this SAS token could contain by selecting "Allowed Services" as Blob/File/Queue/Table. In your case you can select Blob as a service for staging.

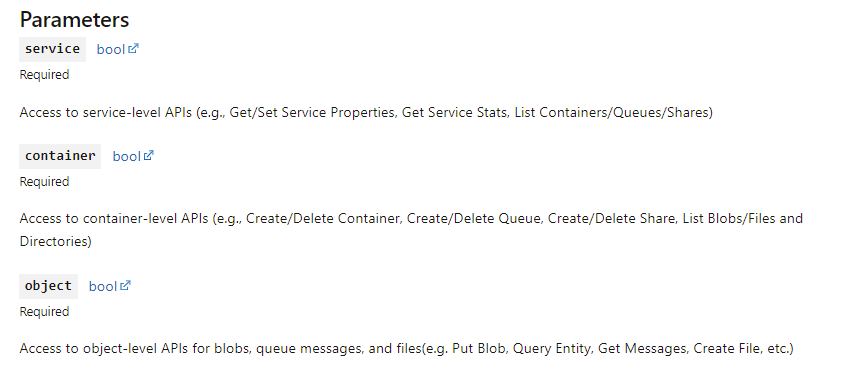

You can also define which allowed resource types which could be Service/Container/Object. See below for details around the same.

Select Allowed Permissions as Read/Write/Delete/List etc.

You can select multiple options from above setting as per your requirement.

For staging (Read/Write) should be enough.

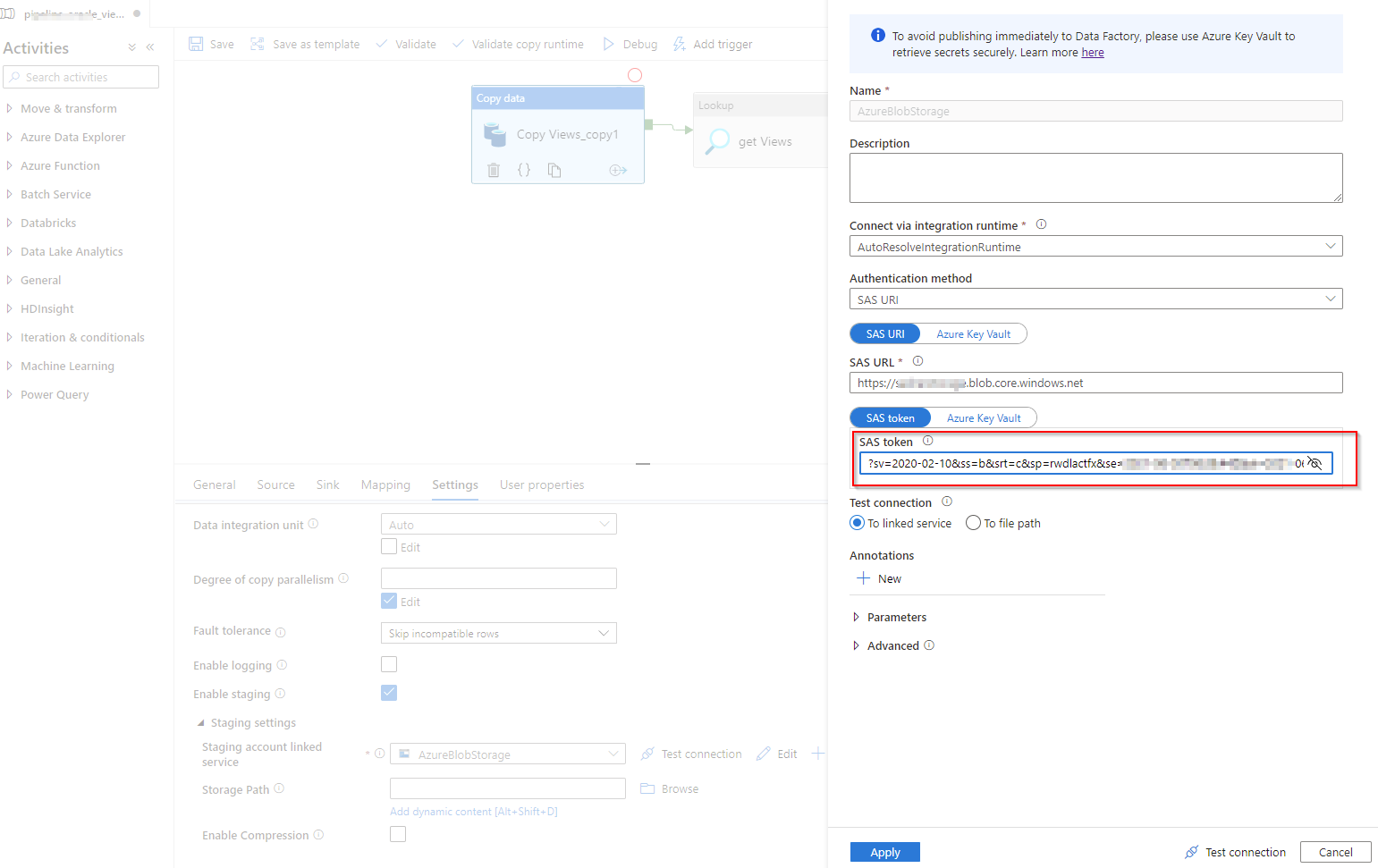

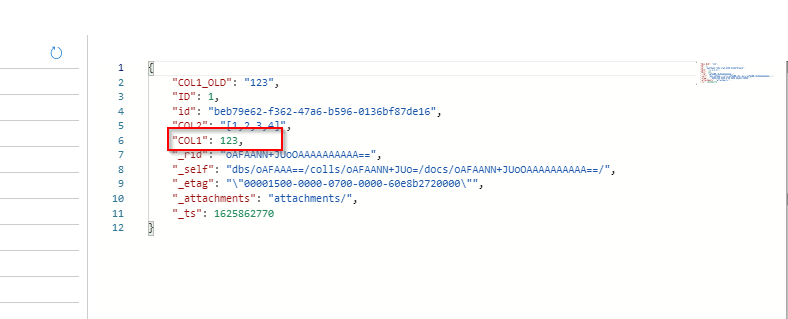

You can then generate the SAS tokens and use the "SAS Token" from the blade as highlighted above and use it in your linked service like below -

Hope this helps. Please let me know if you have any questions.

Please refer to the documentation - Staged Copy from Snowflake for any other additional details.

Thanks

Saurabh

Please do not forget to "Accept the answer" wherever the information provided helps you to help others in the community.

4: https://learn.microsoft.com/en-us/azure/storage/common/storage-sas-overview [6]: https://learn.microsoft.com/en-us/azure/data-factory/connector-snowflake#staged-copy-from-snowflake

.

.