Hi @Jeff vG ,

Thanks for the question and using MS Q&A platform.

If you want to write a pandas dataframe to Azure SQL Server using spark you should convert the pandas dataframe in a spark dataframe (using the spark.createDataframe method) and then there should not be any particular problem in writing it into a Azure SQL Server table.

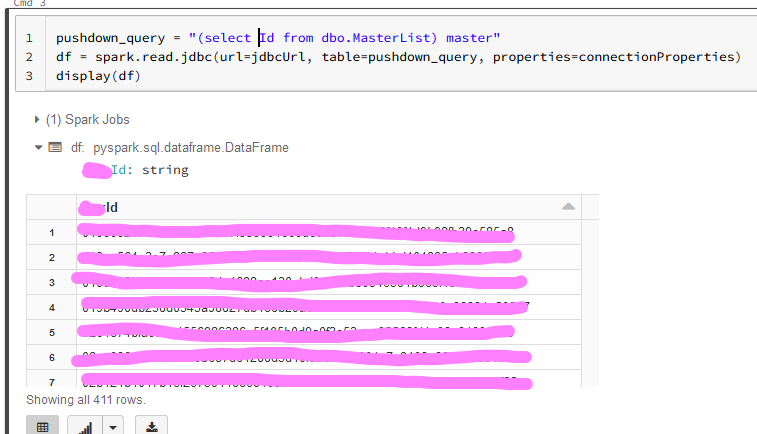

The following document (SQL databases using JDBC - Azure Databricks - Workspace | Microsoft Learn). It shows how you can read/write to/from SQL databases using JDBC. It, however, uses Spark dataframes. I would argue that doing the same with pandas dataframes is not a core use case of Databricks. If you run pandas, your code will only run on driver. If you really wish to use pandas, you can use sqlalchemy to create connection and then leverage .to_sql() on your pandas dataframe.

Before criticizing pandas, it is important to understand that pandas may not always be the right tool for every task. Pandas lack multiprocessing support, and other libraries are better at handling big data.

For more details, refer to Loading large datasets in Pandas

Hope this helps. Do let us know if you any further queries.

---------------------------------------------------------------------------

Please "Accept the answer" if the information helped you. This will help us and others in the community as well.