Hello @Anonymous ,

Thanks for the ask and using Microsoft Q&A platform .

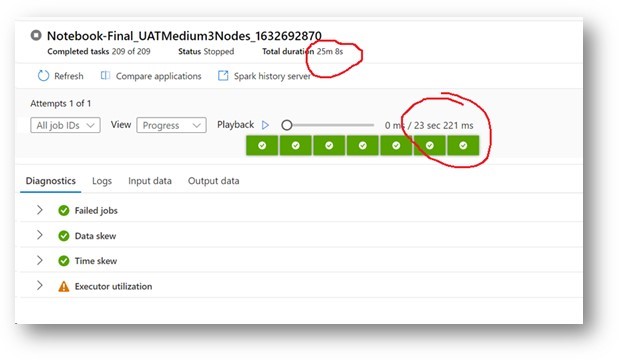

I will start with what is the workload which we are trying to process and is the data which spark is consuming is paritioned or not . For example if you are processing 100GB of csv file on a small cluster ( without partition) adding executor will not help . I wil also go ahead and put the autoscale ON in production . Also I think you will also have to look into the internal details as to how the data is processed . Have you gone through this link .

https://learn.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-history-server

Please do let me know how it goes .

Thanks

Himanshu

-------------------------------------------------------------------------------------------------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators