@Shreyas Arani , Thank you for the question.

Here is one automation approach that might interest you. With this approach we shall:

- Create a bash script that taints and labels the last node in the list of available nodes from inside a Kubernetes Pod.

- Create a docker image to run this script.

- Push the image to a container registry

- Create a Namespace, Service Account, Clusterrole and Clusterrolebinding before we deploy the solution. The Service Account will be granted permission to get,list and delete pods on the cluster scope defined in the Clusterrole through the Clusterrolebinding. We will mount this Service Account on the pods of our Deployment in the next step to enable the pods to access the required Kubernetes APIs.

- Create a Deployment in the AKS with the aforementioned docker image and using the Service Account created in the previous step.

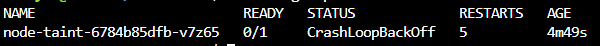

[Note: I will creating a Deployment which will eventually have the Pod in Succeeded (Completed) State, because I can count on my Deployment's desired state to be persisted when the cluster is stopped and the kube-controller-manager should scale a replica of the Pod every time the cluster starts. You might choose to use Jobs or CronJobs, depending on your needs]

Creating the bash script

- Create a fresh directory on your client machine and change the present working directory to this newly created directory:

mkdir directory-name cd directory-name - Create a

node-taint-script.shfile with the following content:

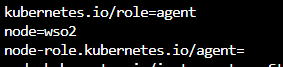

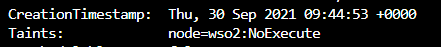

Please replaceAPISERVER=https://kubernetes.default.svc # Path to ServiceAccount token SERVICEACCOUNT=/var/run/secrets/kubernetes.io/serviceaccount # Read this Pod's namespace NAMESPACE=$(cat ${SERVICEACCOUNT}/namespace) # Read the ServiceAccount bearer token TOKEN=$(cat ${SERVICEACCOUNT}/token) # Reference the internal certificate authority (CA) CACERT=${SERVICEACCOUNT}/ca.crt #Get the number of nodes n=$(curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/nodes/ | jq '.items | length' ) #Get the last node nodename=$(curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/nodes/ | jq ".items[$((n-1))].metadata.name" | sed 's/\"//g') #Taint the node curl --cacert ${CACERT} -g -d '{"spec":{"taints":[{"effect":"<TAINT_EFFECT>","key":"<TAINT_KEY>","value":"<TAINT_VALUE>"}]}}' -H "Accept: application/json, */*" -H "Content-Type: application/strategic-merge-patch+json" --header "Authorization: Bearer ${TOKEN}" -X PATCH ${APISERVER}/api/v1/nodes/$nodename?fieldManager=kubectl-taint #Label the node curl --cacert ${CACERT} -g -d '{"metadata":{"labels":{"<LABEL_KEY>":"<LABEL_VALUE>"}}}' -H "Accept: application/json, */*" -H "Content-Type: application/merge-patch+json" --header "Authorization: Bearer ${TOKEN}" -X PATCH ${APISERVER}/api/v1/nodes/$nodename?fieldManager=kubectl-label<TAINT_EFFECT>,<TAINT_KEY>,<TAINT_VALUE>with your taint effect, key and value respectively and<LABEL_KEY>and<LABEL_VALUE>with the label key and value.Create a docker image

- Create a file named

Dockerfilein the same working directory with the following contents: FROM centos:7

RUN yum install epel-release -y

RUN yum update -y

RUN yum install jq -y

COPY ./node-taint-script.sh /node-taint-script.sh

RUN chmod +x /node-taint-script.sh

CMD ["/bin/bash", "-c", "/node-taint-script.sh && tail -f /dev/null"] - Build the docker image using: docker build -t <your-registry-server>/<your-repository-name>:<your-tag> .

Push the image to a container registry

- Login to your container registry. [Reference]

- Push the docker image to your container registry using: docker push <your-registry-server>/<your-repository-name>:<your-tag>

Create a Namespace, Service Account, Clusterrole and Clusterrolebinding

- Create a file named

In the AKS cluster,

- Create a namespace like:

kubectl create ns node-taint - Create a Service Account in the namespace like:

kubectl create sa node-taint -n node-taint - Create a Clusterrole like:

kubectl create clusterrole node-taint-clusterrole --resource=nodes --verb=get,list,patch - Create a Clusterrolebinding like:

kubectl create clusterrolebinding node-taint-clusterrolebinding --clusterrole node-taint-clusterrole --serviceaccount node-taint:node-taintCreate Deployment

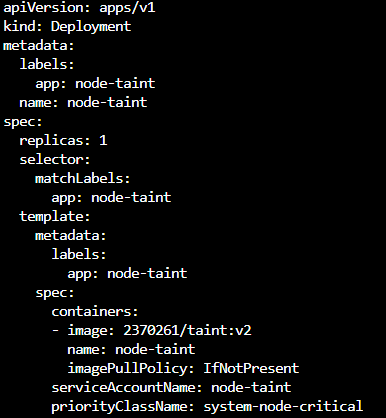

Create the deployment on the AKS cluster like:

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: node-taint

name: node-taint

spec:

replicas: 1

selector:

matchLabels:

app: node-taint

template:

metadata:

labels:

app: node-taint

spec:

containers:

- image: <your-registry-server>/<your-repository-name>:<your-tag>

name: node-taint

serviceAccountName: node-taint

priorityClassName: system-node-critical

EOF

We have used the priorityClassName: system-node-critical so this is scheduled and starts its action before your application pods are scheduled. Reference

When you create the deployment, you might already have a tainted node. The Deployment will again taint and label the last node available. You can manually remove the taint and/or label from the last node. This is a one-time action required since we are not adding conditions to check if a node is already tainted in the script (to keep things simple).

Hope this helps.

Please "Accept as Answer" if it helped, so that it can help others in the community looking for help on similar topics.