Hi @Kman ,

Thanks for using Microsoft Q&A forum and posting your query.

Yes you can copy data from Oracle to Parquet format using Azure Data Factory.

Parquet format is supported for the following ADF connectors: Amazon S3, Amazon S3 Compatible Storage, Azure Blob, Azure Data Lake Storage Gen1, Azure Data Lake Storage Gen2, Azure Files, File System, FTP, Google Cloud Storage, HDFS, HTTP, Oracle Cloud Storage and SFTP.

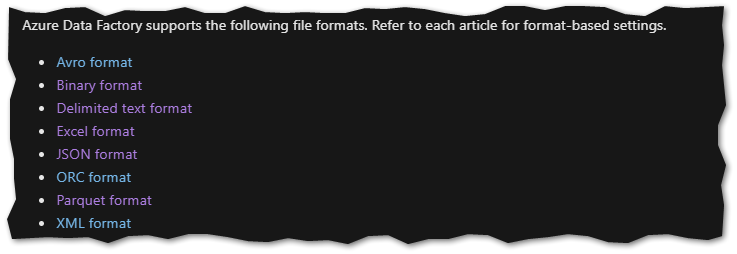

Azure Data factory supports the below file formats:

Please note that for copy empowered by Self-hosted Integration Runtime e.g. between on-premises and cloud data stores, if you are not copying Parquet files as-is, you need to install the 64-bit JRE 8 (Java Runtime Environment) or OpenJDK and Microsoft Visual C++ 2010 Redistributable Package on your IR machine

For copy running on Self-hosted IR with Parquet file serialization/deserialization, the service locates the Java runtime by firstly checking the registry (SOFTWARE\JavaSoft\Java Runtime Environment{Current Version}\JavaHome) for JRE, if not found, secondly checking system variable JAVA_HOME for OpenJDK.

Limitation Note: Parquet complex data types (e.g. MAP, LIST, STRUCT) are currently supported only in Data Flows, not in Copy Activity. To use complex types in data flows, do not import the file schema in the dataset, leaving schema blank in the dataset. Then, in the Source transformation, import the projection.

For more info about the Parquet format in ADF please refer to this doc - Parquet format in Azure Data Factory and Azure Synapse Analytics

I'm not sure about the requirement to copy data from Oracle to parquet format and then from Parquet to Azure SQL, but you can also copy directly from Oracle to Azure SQL.

----------

- Please don't forget to click on

and upvote

and upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators