Hi @arkiboys ,

Thank you for posting query in Microsoft Q&A Platform.

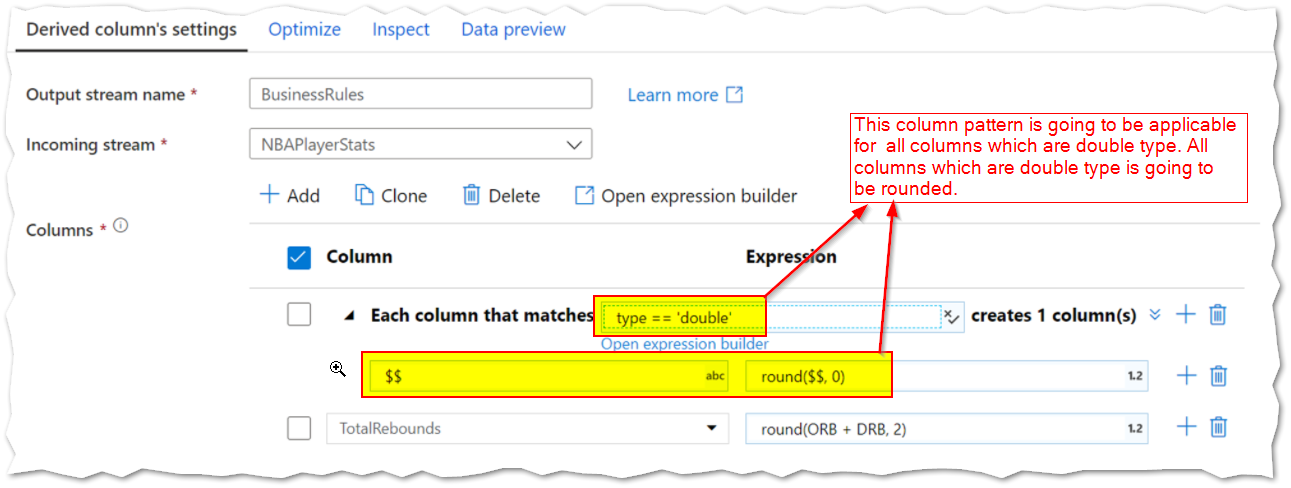

Column patterns allow to define pattern based conditions. You can define patterns to match columns based on name, data type, stream, origin, or position instead of requiring exact field names.

Below are two main scenarios were column patterns are used:

- If incoming source fields change often such as the case of changing columns in text files or NoSQL databases. This scenario is known as schema drift.

- If you wish to do a common operation on a large group of columns. For example, wanting to cast every column that has 'total' in its column name into a double.

Below is sample example of column pattern.

So, I guess column patterns are the right way to proceed in your case.

If your looking for saving the expressions of derived column also in to some file and dynamically pass that expression itself, then that is not possible. Expressions should be written in expression builder only. They cannot be dynamic. That means, when you pass your expression as value from a parameter then is going to be treated as normal text only. Not as expression.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators