Ok I asked this question weeks ago and havent had a solid answer below is a summary of what I have found to work and perhaps someone can tell me if there is a better way of approach it. The first reply was very useful in terms of giving me a clue about the issue but didnt explain how to handle type conversion.

Copy Activity

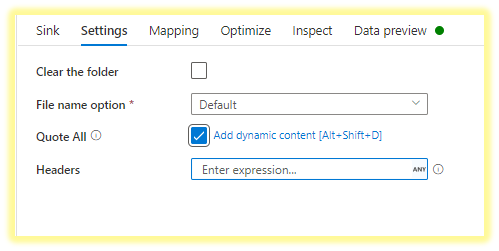

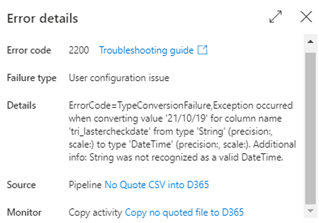

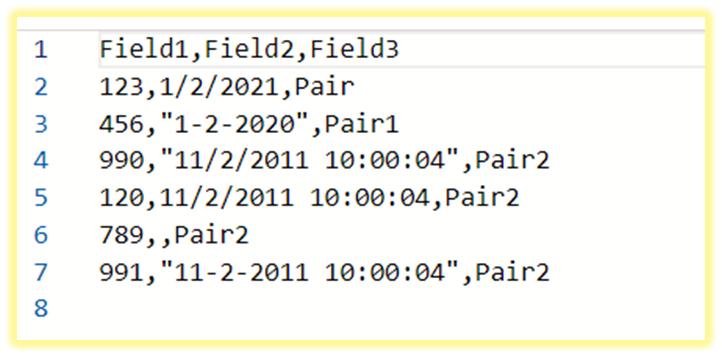

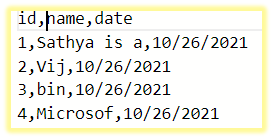

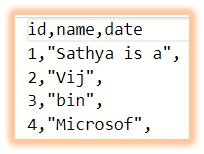

In a nutshell for a Copy Activity when the source dataset is processing a text quoted source (e.g. CSV) and the non-text field types are surrounded with quotes this will cause type conversion errors. For example “19/10/21” would generate the following error in the copy activity even if a format of dd/MM/yy was specified as a format in the copy activity:

ErrorCode=TypeConversionFailure,Exception occurred when converting value '19/10/21' for column name 'xxx_yourdate' from type 'String' (precision:, scale:) to type 'DateTime' (precision:, scale:). Additional info: String was not recognized as a valid DateTime.

The Sink type doesn’t matter as this error is raised as part of ADF conversion of datasets to its interim types before the sink operation. To help avoid type conversion errors ensure that all non-text data type fields (e.g. Date, Integer, Decimal) are not text-quoted. In addition a clear plan for handling non-text data types and formats needs to be in place. The Copy Activity has some very basic options for handling dates and number formats but have limited use-cases.

Scenario A: You don’t use data flow and don’t need to specify a date format in copy activity settings.

This applies only when you know that the source dates in all source date fields will be unquoted and of the literal format yyyy-MM-dd or yyyy-MM-dd hh:mm:ss. All other date formats will fail.

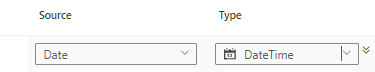

Scenario B: You don’t use data flow and know all dates are unquoted. You know the values in the date fields will always be dd/MM/yy or dd/MM/yy HH:mm:ss across all date fields

In this scenario because the date format contains a “/” and not a “-“ and is not the expected format you will need to specify a format in the copy activity. So long as when a date value is provided it follows either of these formats and of course is a valid date it will not error.

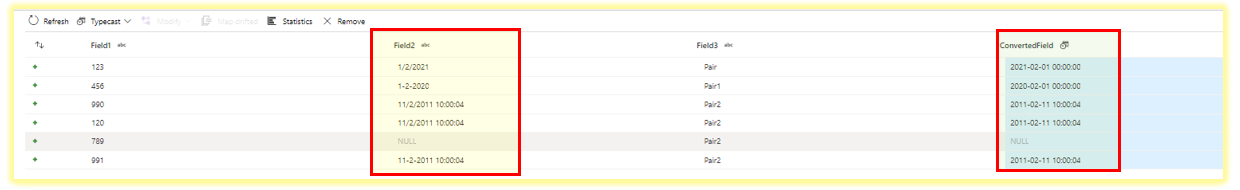

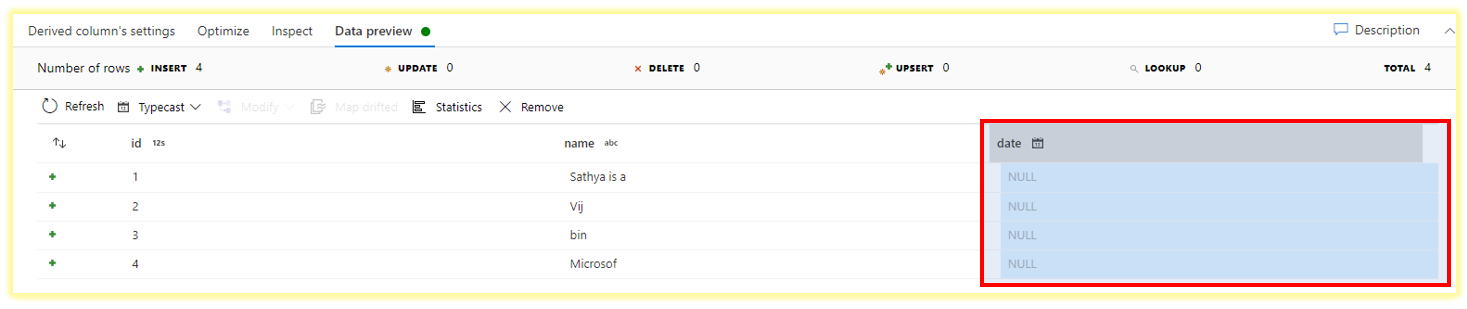

Scenario C: Date fields may or may not be text quoted and can vary in format

In this scenario the only option is to use data flows and derived date field columns. It should be an assumed (normal) requirement that if any source fields are non-text then a data flow and transformation will most likely be needed in real world migration or integration scenarios.

Summary

Based on my tests it is highly unlikely that the type conversion settings in a copy activity alone will be sufficient to handle most real-world scenarios. This means the use of data flows and derived columns should be an assumed requirement. This is especially true when the source is text quoted on (date, integer, decimal) and other non-text fields.

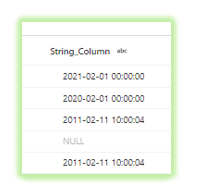

The supported sink types directly within a data flow at this time are very limited (E.g. D365, Azure Table) are not supported sink types. If the intended target is not supported, then you have to sink to an interim location such as a SQL table or BLOB first which has quotes removed and formats enforced. Then reference the interim location as the source within a Copy Activity with itself then has access to more supported sink types. The section below outlines the transformation expressions that were tested and used in the scenario where fields were quoted and the incoming date values were not supported.

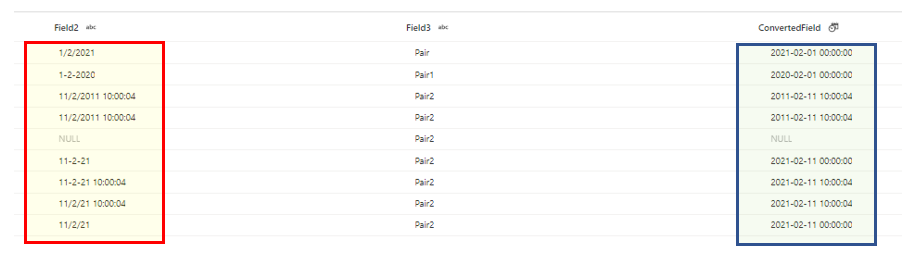

DATES

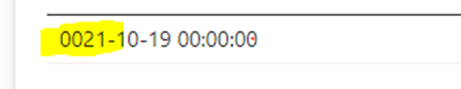

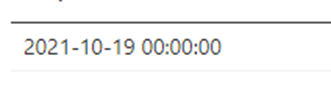

Input: 19/10/21 or 2021-10-19

The replace function was required because the date functions were returning NULL values just because the date contained a “/” and not a “-“.

toString(

coalesce(

toTimestamp(replace(exp_date_of_validation,"/","-"),'dd-MM-yy HH:mm:ss'),

toTimestamp(replace(exp_date_of_validation,"/","-"),'dd-MM-yy'),

toTimestamp(replace(exp_date_of_validation,"/","-"),'dd-MM-yyyy HH:mm:ss'),

toTimestamp(replace(exp_date_of_validation,"/","-"),'dd-MM-yyyy'),

toDate(date_of_validation),

null()

)

)

Input: 2021-10-19 17:32:00

INTEGERS

For example an optionset value in D365.

toInteger(

coalesce(

toInteger(

case

(

os_type == "1",167410000,

os_type == "2",167410001,

os_type == "3",167410002

)

)

, null()

)

)

DECIMALS

Things like currency/money fields or any other kind of fraction.

toDecimal(

coalesce(

toDecimal

(

estimated_tenure

)

, null()

)

)

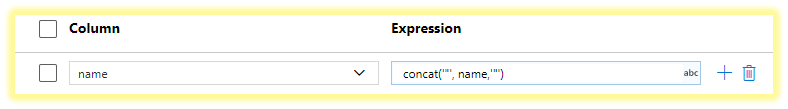

STRINGS

An example of string handling, although this isn’t do much at the moment you can build this to use case statements and return different values so would support basic inline business logic.

toString(

coalesce(

toString

(

polling_key

)

, null()

)

)

I hope this helps others and if there is a better way of validating dates or other non-text values in ADF I would like know about it.

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how