Hello @Sandz ,

Thanks for the question and using MS Q&A platform.

There are couple of methods to connect Azure SQL Database using Azure Databricks.

Method1: SQL databases using JDBC

The following Python examples cover some of the same tasks:

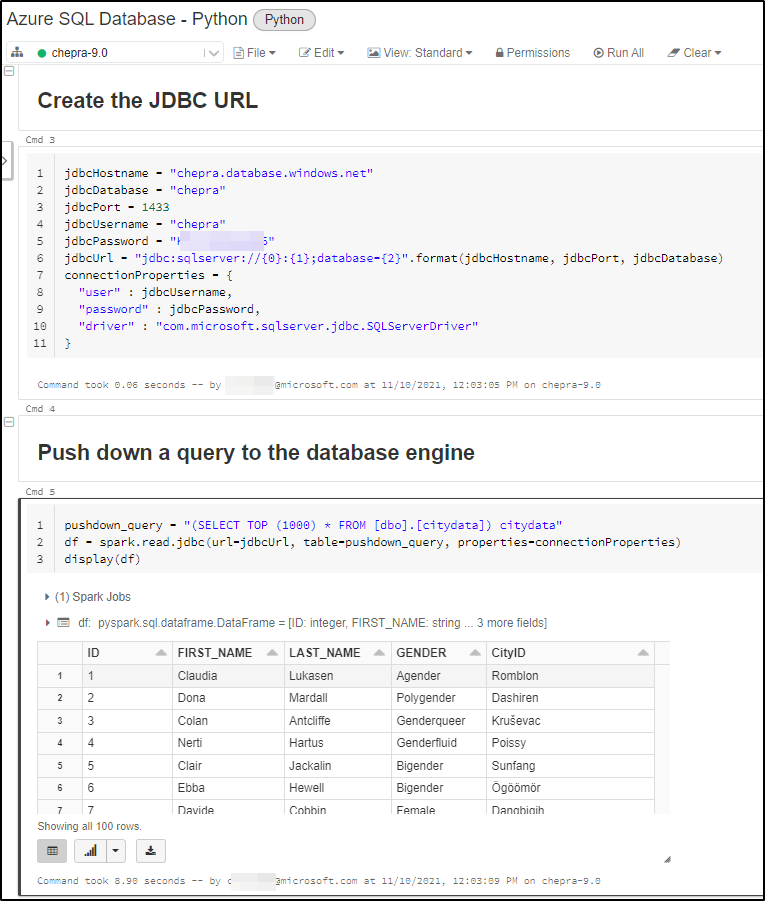

#Create the JDBC URL

jdbcHostname = "<DBNAME.database.windows.net>"

jdbcDatabase = "<DatabaseName>"

jdbcPort = 1433

jdbcUsername = "<UserName>"

jdbcPassword = "<Password>"

jdbcUrl = "jdbc:sqlserver://{0}:{1};database={2}".format(jdbcHostname, jdbcPort, jdbcDatabase)

connectionProperties = {

"user" : jdbcUsername,

"password" : jdbcPassword,

"driver" : "com.microsoft.sqlserver.jdbc.SQLServerDriver"

}

#Push down a query to the database engine

pushdown_query = "(select * from employees where emp_no < 10008) emp_alias"

df = spark.read.jdbc(url=jdbcUrl, table=pushdown_query, properties=connectionProperties)

display(df)

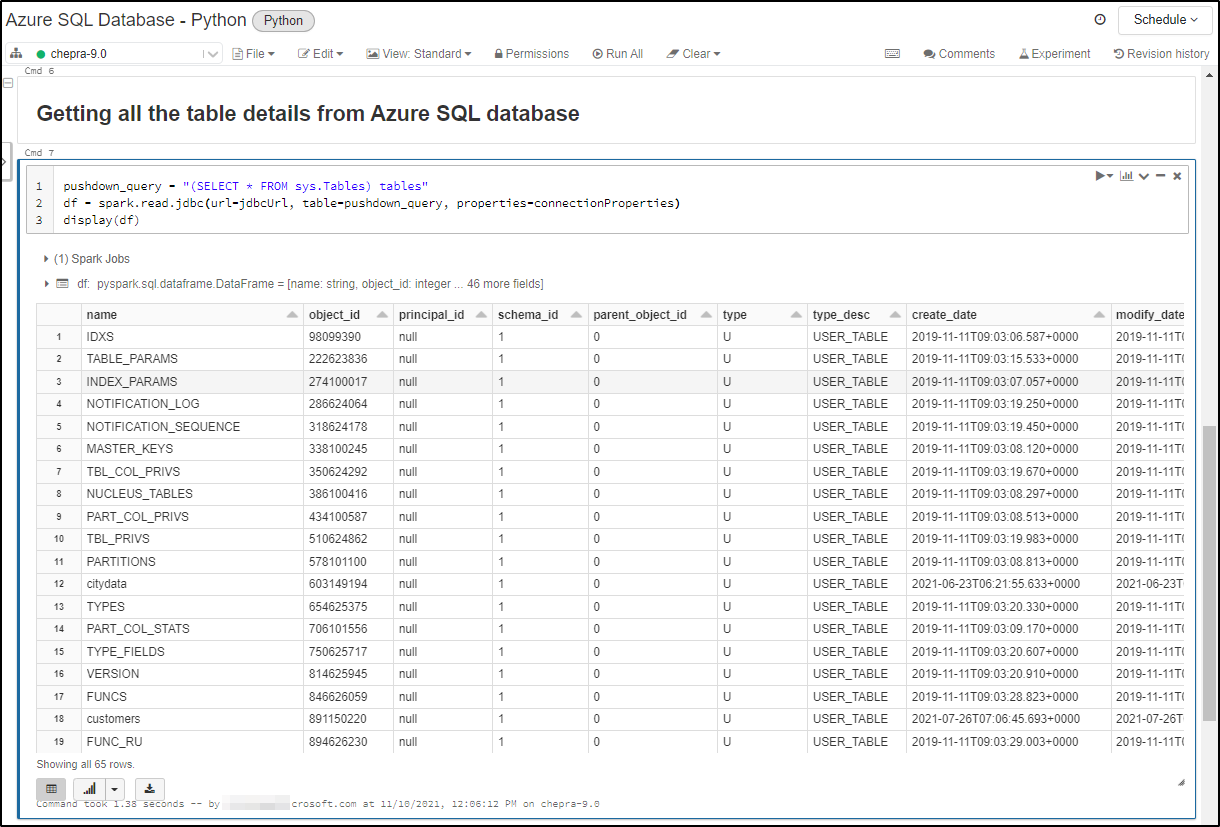

Push down query to get the tables details from Azure SQL Database:

pushdown_query = "(SELECT * FROM sys.Tables) tables"

df = spark.read.jdbc(url=jdbcUrl, table=pushdown_query, properties=connectionProperties)

display(df)

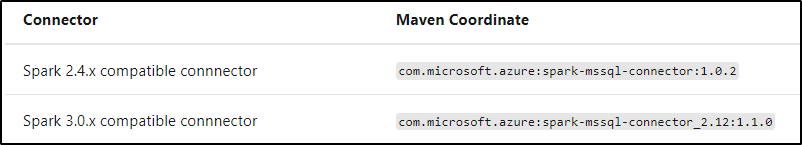

There are two versions of the connector available through Maven, a 2.4.x compatible version and a 3.0.x compatible version. Both versions can be found here and can be imported using the coordinates below:

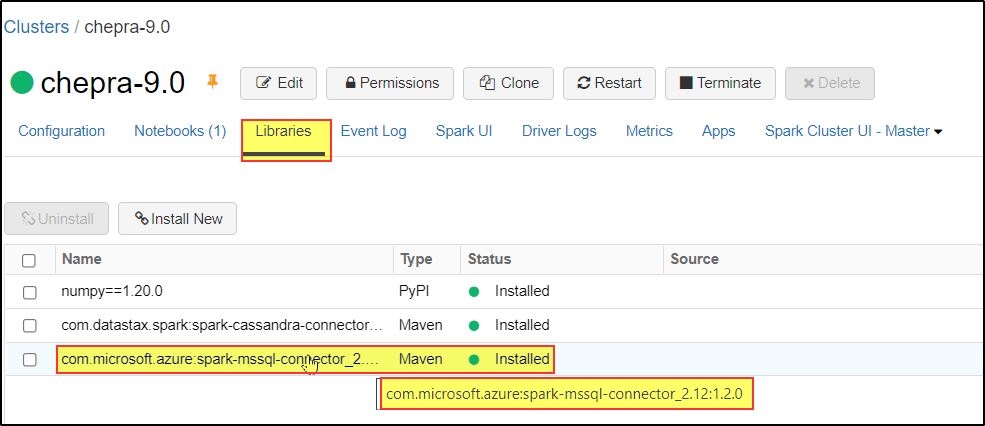

Prerequisites: You need to install the Apache Spark Connector.

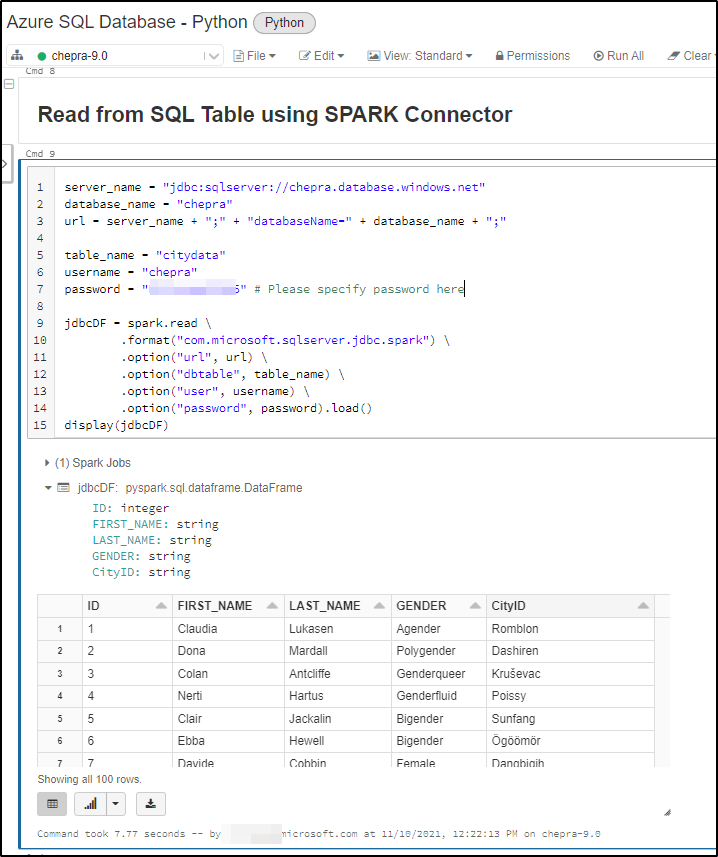

Python code to Read from SQL Table using Apache Spark Connector:

server_name = "jdbc:sqlserver://<ServerName>.database.windows.net"

database_name = "<DatabaseName>"

url = server_name + ";" + "databaseName=" + database_name + ";"

table_name = "<TableName>"

username = "<UserName>"

password = "<Password>" # Please specify password here

jdbcDF = spark.read \

.format("com.microsoft.sqlserver.jdbc.spark") \

.option("url", url) \

.option("dbtable", table_name) \

.option("user", username) \

.option("password", password).load()

display(jdbcDF)

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators