Hello,

We are having some problems every now and then with the daily processing of one of our SSAS tabular models, more exactly we are getting the following error sometimes:

Memory error: Memory Allocation failure . Try simplifying or reducing the number of queries. The process operation ended because the number of errors encountered during processing reached the defined limit of allowable errors for the operation. The current operation was cancelled because another operation in the transaction failed. Execution of DDL failed.

Some more information about the setup where it fails:

- We have an SQL instance and an SSAS instance on the same server (both SQL 2019), which has a total of 80GB RAM

- The SQL instance has a maximum server memory limit of 16GB

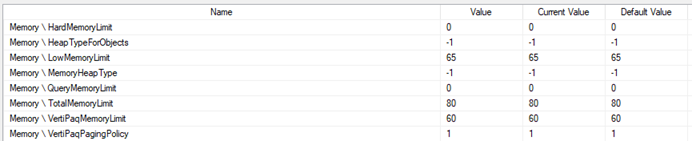

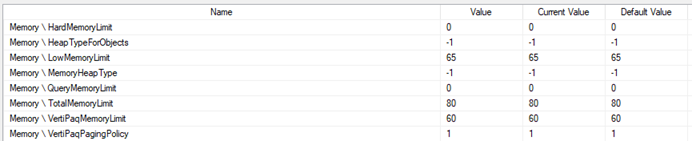

- The SSAS instance has following memory settings configured:

Some more information about our SSAS-model:

- It’s a Tabular model with compatibility level 1103 (we know this is an older version, but currently we can’t upgrade yet, we have this model installed with several customers, and not all of them have upgraded their servers yet and we want to avoid having to maintain several versions of the same model)

- On disk its around 20GB

- We process the model once a day, during the night:

- Monday to Saturday we do a “partial processing” where we only refresh the partitions that contain the most recent data (we have split partitions per year)

- We do this by building a dynamic XMLA script that we then execute through an SSIS “Analysis Services Execute DDL task”

- Sunday we do a “full processing” where we refresh the whole model

- We do this by using an SSIS “Analysis Services Processing Task”.

The fact we are getting the above error is a bit weird to us, considering:

- We think the server / SSAS instance should have enough memory looking at the numbers

- The processing does succeed without problems most of the time, so it doesn’t look like a structural issue

- When it fails, its during a “partial processing”, and not during the “full processing” job, which is strange, since we would expect that the “full processing” job generates a bigger impact on the resources.

Judging by the error message, we think the problem has to do with the “Analysis Services Execute DDL task”, and that there are maybe too many partitions being processed at once (we did set the “maxparallel” parameter on 0, so the server can decide how many It can handle).

We have been thinking about trying to split the xmla script up into smaller scripts with less partitions each and then execute those in separate “Analysis Services Execute DDL tasks”, in the hope that we can avoid the above error, but maybe someone here has a better suggestion/explanation why this error concurs sometimes, and how to avoid it?