Hello @CJ Hillbrand ,

Thanks for the question and using MS Q&A platform.

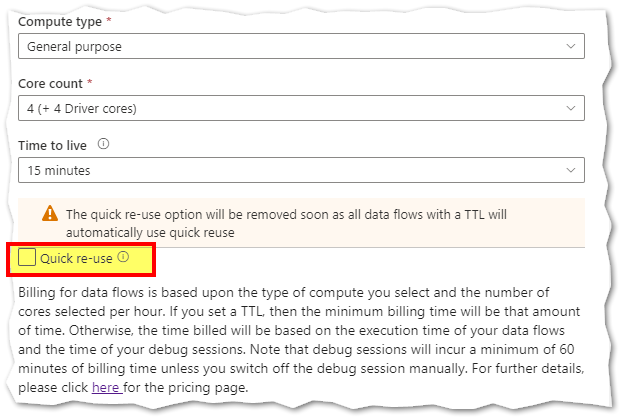

As per the description, my understanding is that you have an Azure IR with TTL set (the Quick re-use feature unchecked/disabled). And you would want to know why the Azure IR trying to acquire compute resources even though TTL is enabled. Pls correct if my understanding is incorrect.

By default, every data flow activity spins up a new Spark cluster based upon the Azure IR configuration. Cold cluster start-up (nothing but spinning a new a cluster) time takes a few minutes and data processing can't start until it is complete. If your pipelines contain multiple sequential data flows, you can enable a time to live (TTL) value. Specifying a time to live value keeps a cluster alive (Just the cluster is alive but the resources/VMs will be paused) for a certain period of time after its execution completes (nothing but after last dataflow job execution is completed). If a new job starts using the IR during the TTL time, it will reuse the existing cluster and start up (nothing but to restart the paused resources from existing cluster) time will greatly reduced. After the second job completes, the cluster will again stay alive for the TTL time.

You can additionally minimize the startup time of warm clusters (already spined up cluster) by setting the Quick re-use option in the Azure Integration runtime under Data Flow Properties. Setting this to true will tell the service to not teardown the existing cluster after each job and instead re-use the existing cluster, essentially keeping the compute environment you've set in your Azure IR alive for up to the period of time specified in your TTL. This option makes for the shortest start-up time of your data flow activities when executing from a pipeline.

Hope this info helps. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators