Hello @ankit kumar ,

Thanks for the ask and using Microsoft Q&A platform .

As I understand you have the below ask , let me know if thats not accurate .

- How to insert data in the table using pyspark .

- How to add the this table to the pipeline .

Just to lewt you know thats Lake house is still unders "preview" and we are actively working on this at this time .

I was able to use the below prove of code snippet to insert data into the table .

%%sql

INSERT INTOdb2.table_1VALUES (1,'Product','Prodycclass');

INSERT INTOdb2.table_1VALUES (2,'Product2','Prodycclass2');%%pyspark

df = spark.sql("SELECT * FROMdb2.table_1")

df.show(10)

+---+--------+------------+

| ID| Name| Class|

+---+--------+------------+

| 2|Product2|Prodycclass2|

| 1| Product| Prodycclass|

+---+--------+------------+

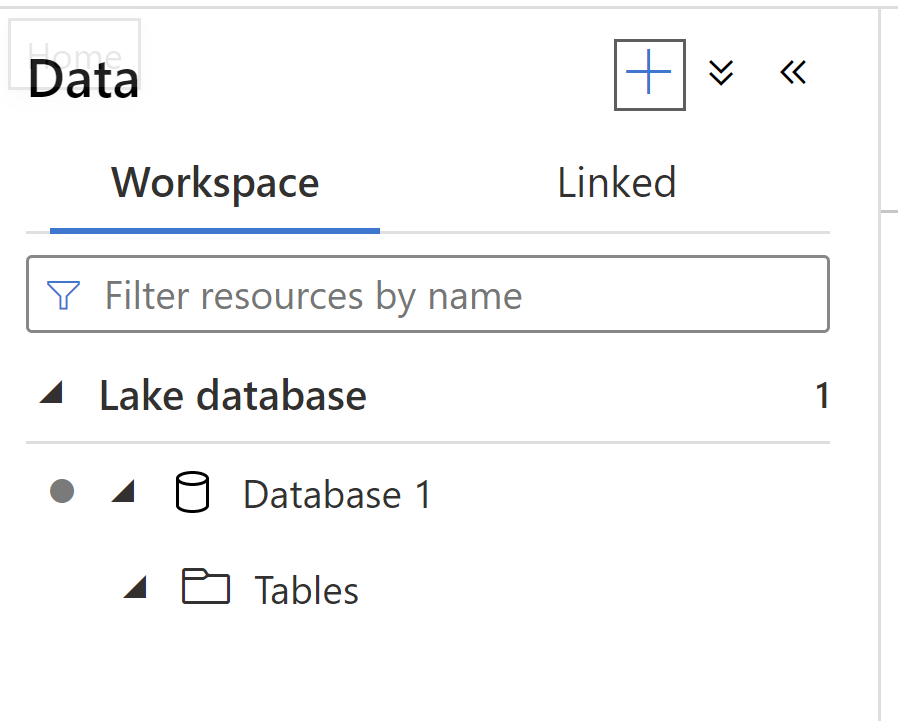

Note : Once you crreate the lake database and table , make sure that you commits all the changes and publish the pass , once you do this then only its going to be discovered by the sparl clusters .

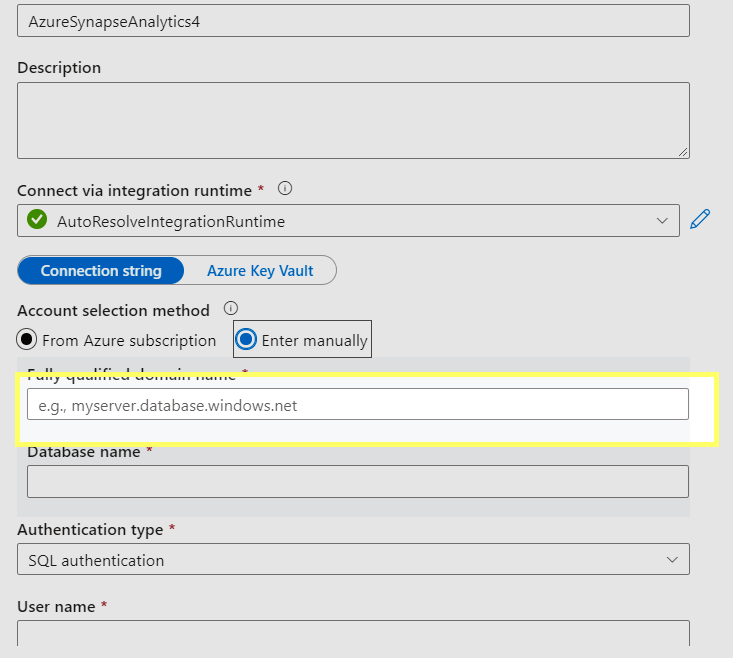

To view this db/table from the pipeline , please create a linked servive for Synapse and select the manual option and pass the url of the serverless instance and other details .

Please do let me if you have any queries .

Thanks

Himanshu

-------------------------------------------------------------------------------------------------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators