Hello @Anonymous ,

Thanks for the question and using MS Q&A platform.

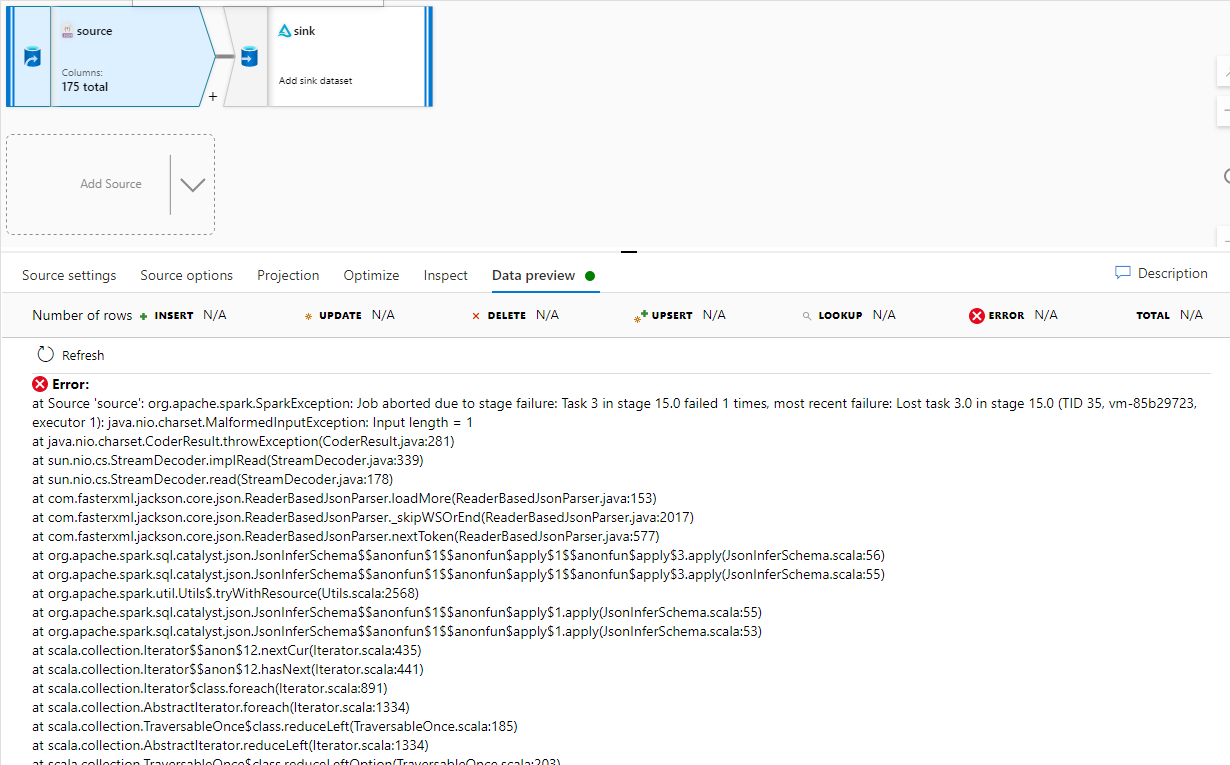

My understanding is that you are receiving the above error while trying data preview a JSON file as source in Mapping Data flow. But when you tried the same source in Copy activity then there no issue, which confirm you that there is nothing wrong with data. Please correct if I misunderstood the ask.

If the above understanding is correct then, it seems like there is something wrong with your source settings configuration in Mapping data flow source.

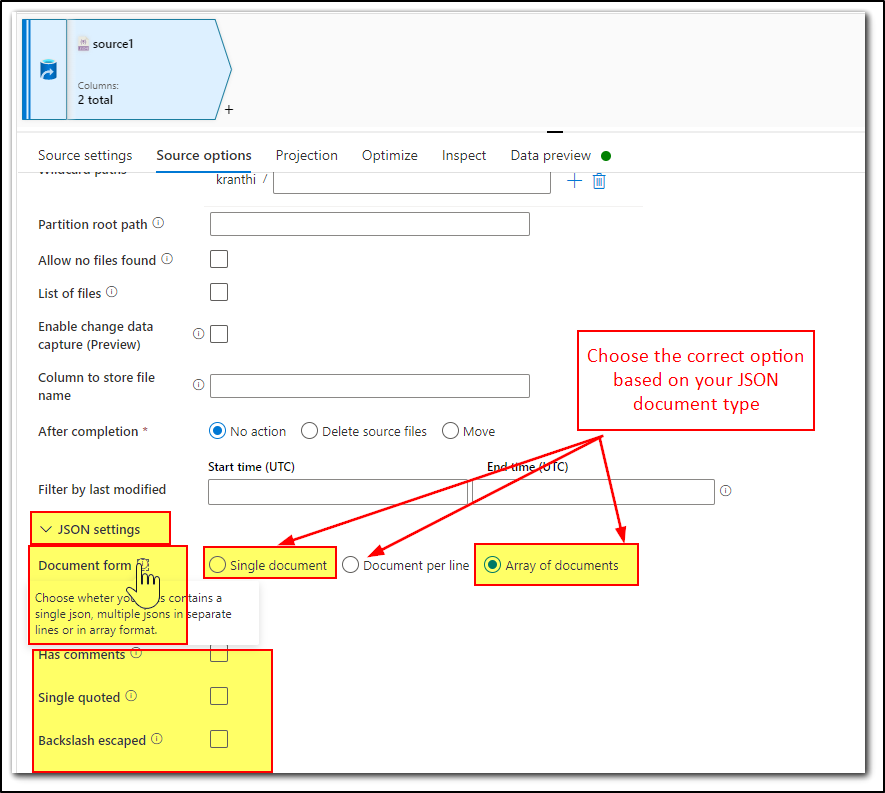

We recommend to please make sure the JSON Settings under Source Options tab are correctly configured.

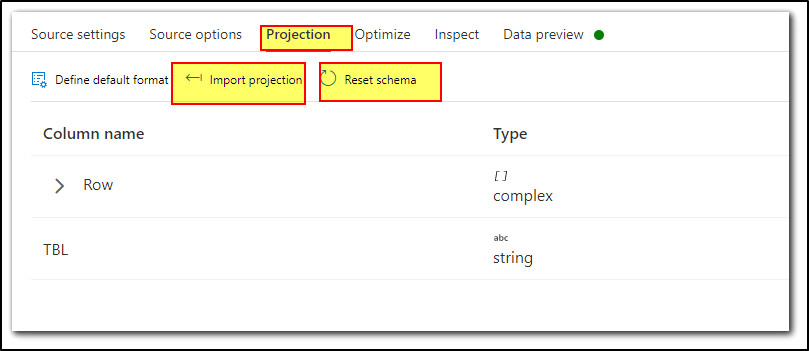

Before previewing the data, please correctly configure the JSON settings under Source Options tab and then under Projection tab check if you are able to see the schema if not try Reset Schema or Import projection. And then try to preview the data.

I tried to reproduce the issue but no luck. In case if the above steps didn't help to resolve your issue, we request you to please share a sample JSON file (Please note: save the JSON file as TXT extension to attach here) attached in your response along with screenshots of your Source settings, Source options (exand JSON settings), Projection, Inspect tab settings which would help us to reproduce the issue on our end.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators