i am also facing same issue please anyone have solution for this?

wrong datetime format from parquet with external table on synapse serverless

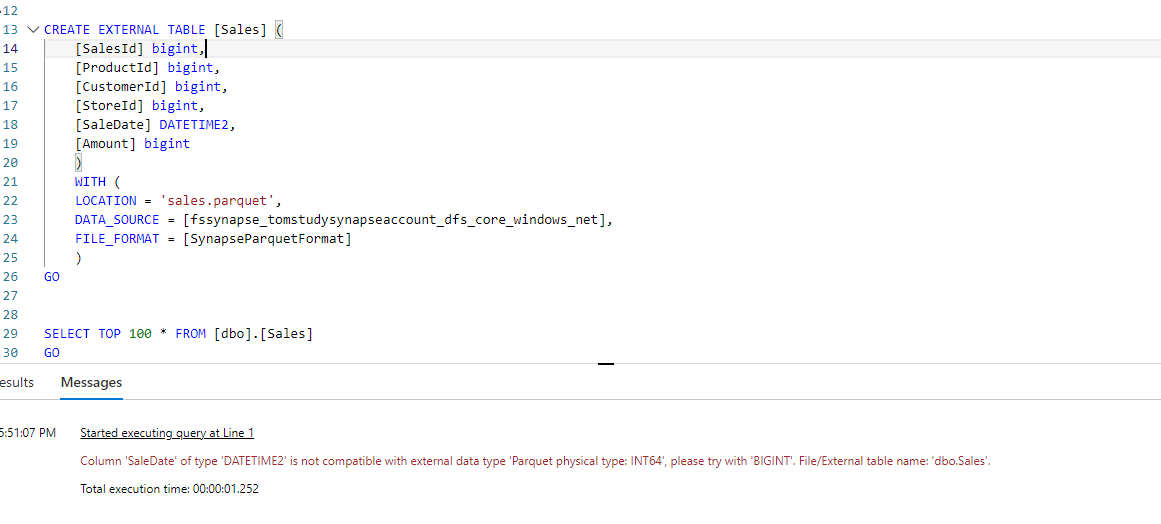

Hello everyone! I'm facing a problem while loading a parquet file(171118-salesparquet.txt rename removing txt externsion) in an external table in a Synapse serveless instance. In the file there's a column formatted as a datetime(Saledate). If I try to load it in an external table the datetime is always seen as a bigint and there's no way to cast it otherwise.

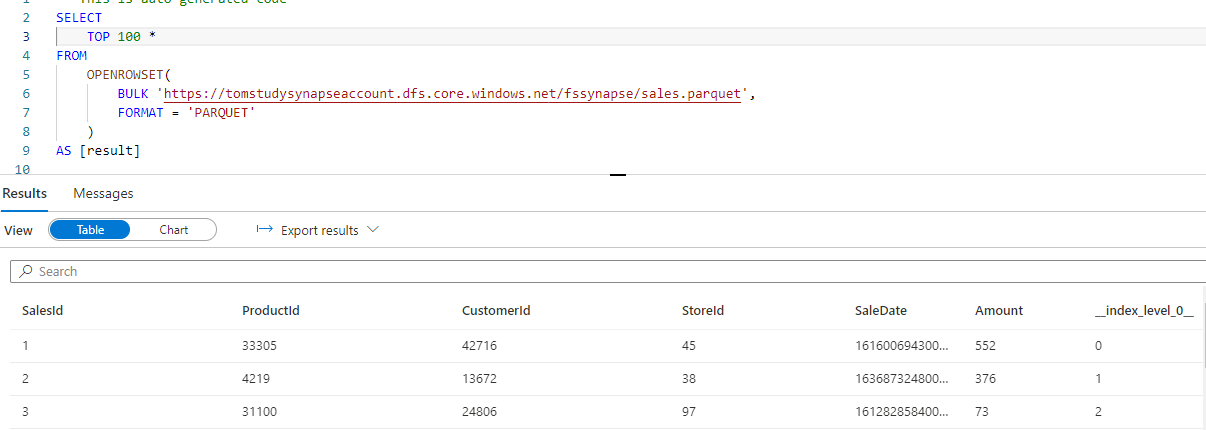

If I try to look at the data using CETA the column id recognized as int:

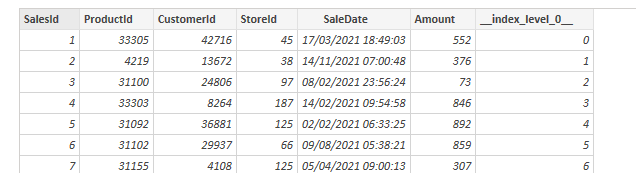

To check things out I tried to open the same file with Power Query in Power BI and the columns is correctly recognized as a datetime:

Do you have any advice/tip/solution for this?

2 answers

Sort by: Most helpful

-

-

AnnuKumari-MSFT 32,161 Reputation points Microsoft Employee

AnnuKumari-MSFT 32,161 Reputation points Microsoft Employee2022-02-15T12:18:49.947+00:00 Hi @Tommaso Capelli ,

Welcome to Microsoft Q&A platform and thanks for posting your query. Apologies for delay in response.

As I understand your requirement , Currently you have dates that appear to be not valid datetime2 values in the parquet and it is stopping the querying of the file.It might be different version of parquet read using to read parquet file. Say, you use spark 2.4 to write the file, it will use Julian calendar. However, when serverless reads it, it will use Gregorian calendar which causes the problem.

One possible solution in Synapse architecture might be,

- In spark 2.4 pool, read the parquet file and write as json.

- In spark 3.0 pool, set spark.sql.legacy.parquet.int96RebaseModeInWrite to CORRECTED, and read staging json file and write to ADLS again as parquet. With that parquet file, the value is correct

Doc reference that can be considered:

Column is not compatible with external data type

Inserting value to batch for column type DATETIME2 failedHope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators