Hello @Joseph Chen ,

Thanks for the ask and using Microsoft Q&A platform .

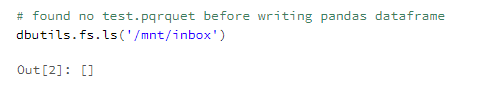

As we understand the ask here is why are you getting an error while running the command

df.to_parquet('/dbfs/mnt/inbox/test.parquet')

I am assuming that you are getting the below error

FileNotFoundError: [Errno 2] No such file or directory: '/dbfs/mnt/inbox/test.parquet'

Please do let me know if that is not accurate,

When I tried to repro the issue i got the aboev error . When checking the dbfs i do not had the path "/dbfs/mnt/inbox/test.parquet" but i did had the path "/dbfs/mnt/test.parquet"

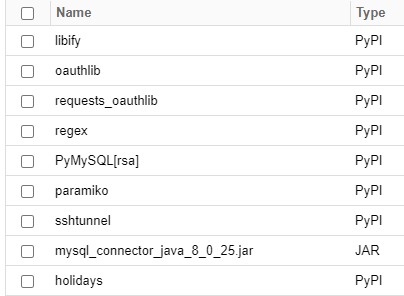

So the below code just works fine for me

Databricks notebook source

Import pandas library

import pandas as pd

initialize list of lists

data = [['tom', 10], ['nick', 15], ['juli', 14]]

df = pd.DataFrame(data, columns = ['Name', 'Age'])

df

df.to_parquet('/dbfs/mnt/test.parquet')

I am confident that the path which you are refering does not exists .

Please do let me if you have any queries .

Thanks

Himanshu

-------------------------------------------------------------------------------------------------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators