Hi @arkiboys ,

Thankyou for using Microsoft Q&A platform and posting your query.

By looking at your query, it seems that you are selecting data from a view inside Notebook and you want to load the same into a parquet file.

For this requirement , you can first create a dataframe in the notebook and then use that to write the data into parquet file using the following command:

df = spark.sql("select * from viewName")

df.write.parquet("abfss://<file_system>@<account_name>.dfs.core.windows.net/<path>/<file_name>")

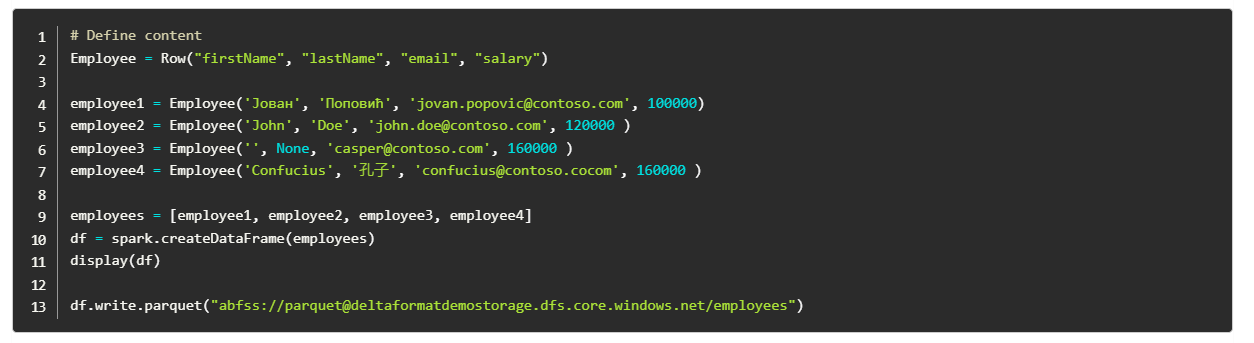

Please refer to the following code snippet for the same:

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators