Hello @Venkatesh Srinivasan ,

Thanks for the question and using MS Q&A platform.

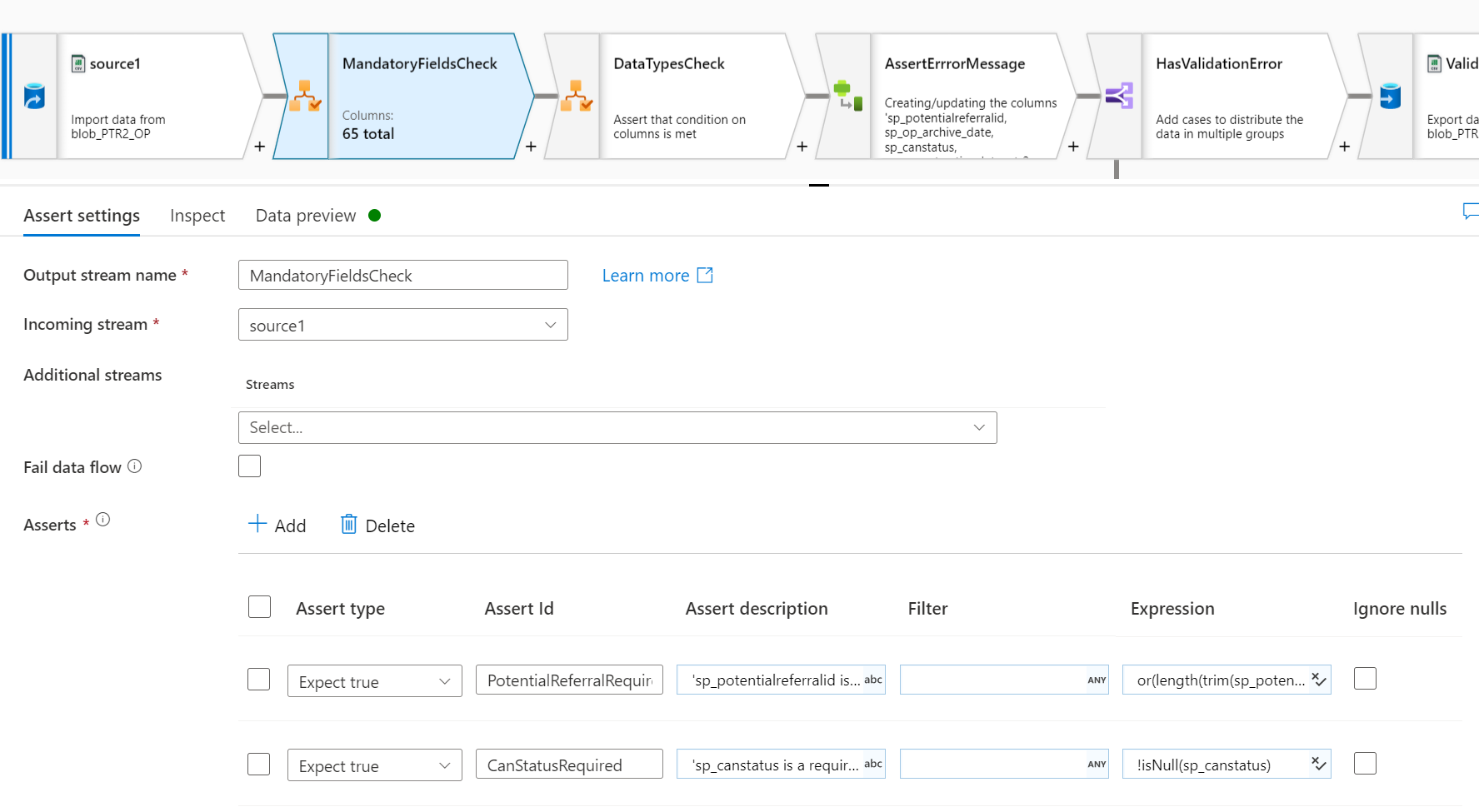

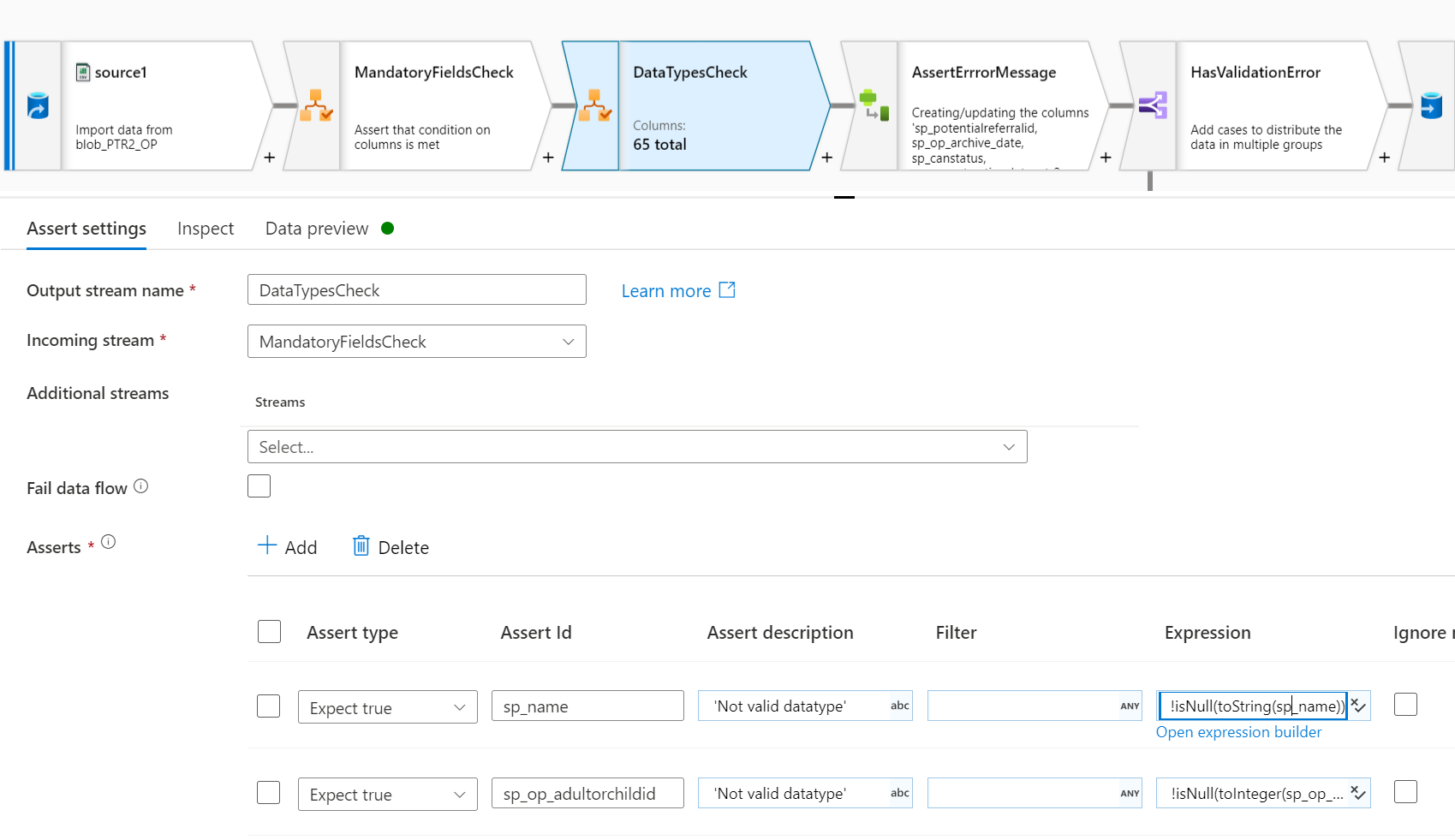

As we understand the ask here is to ensure the incoming data has the correct type ?

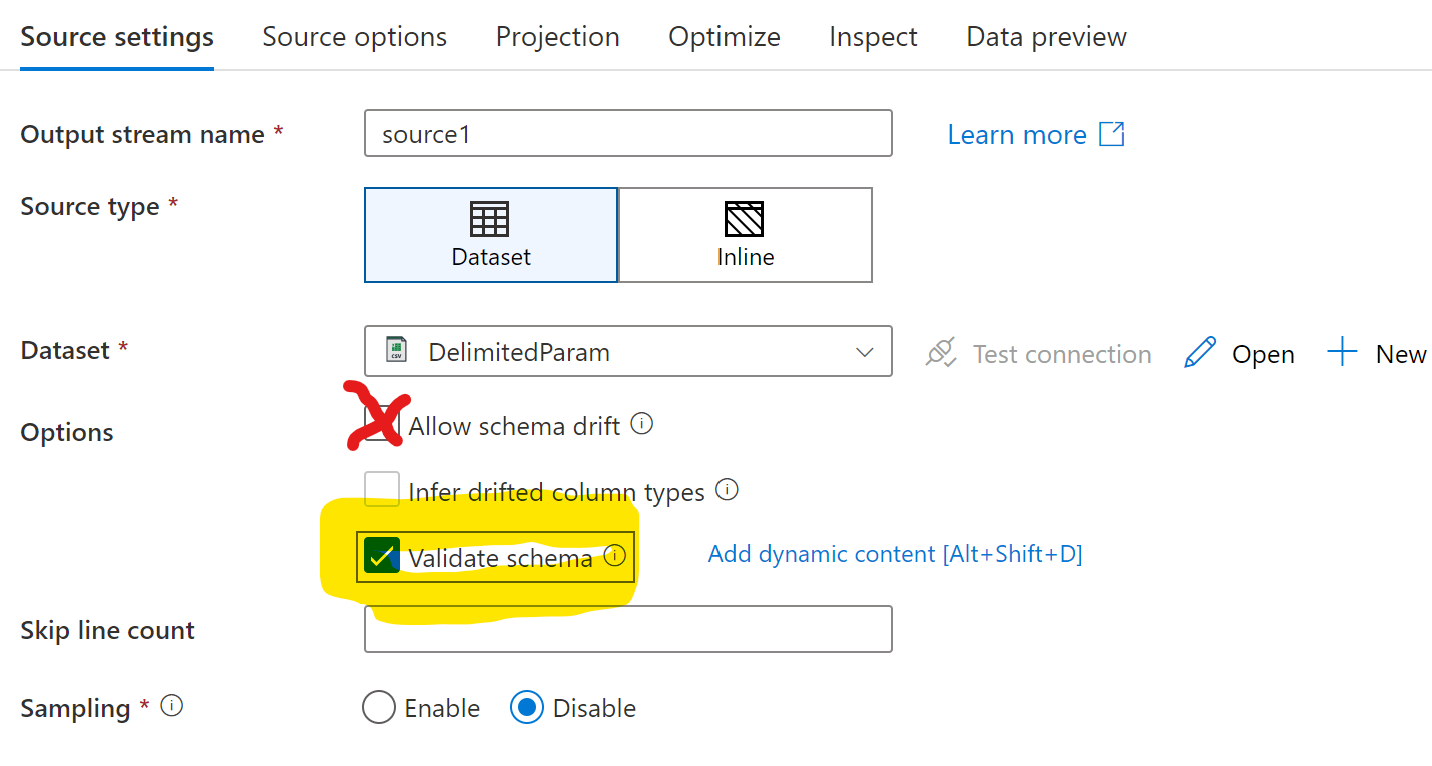

Instead of using Assertions, why not leverage the Validate schema option in source settings? This feature is available for Dataset sources, but not for Inline sources. Setting Validate schema to true will cause the Data Flow to fail when there is a mismatch between the schema defined in the projection, and the schema read in.

You will also want to turn off Allow schema drift.

Please do let me if you have any queries.

Thanks

Martin

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators