Hello @Anushree Agarwal ,

Thanks for the question and using MS Q&A platform.

As we understand the ask here is to either troubleshoot your line of code or explain why it might work in Synapse but not locally .

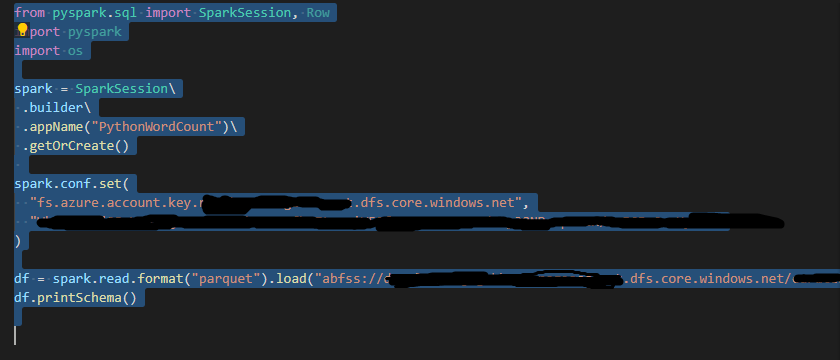

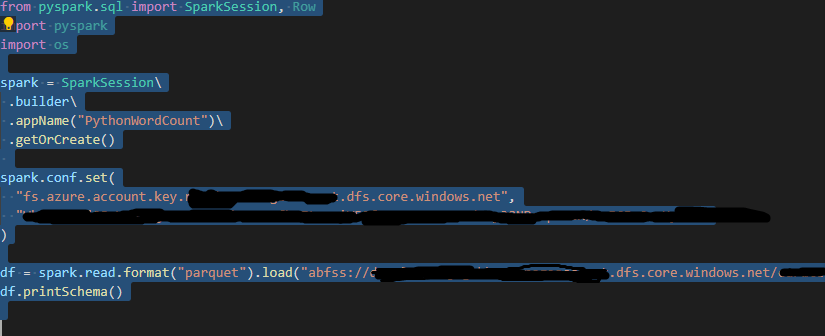

The line in question being:

df = spark.read.format("parquet").load("abfss://{v_containerName}@{v_accountName}.dfs.core.windows.net/<Path to parquet file>)

There are several things of note here. First in terms of possible typos or substitutions. I see you have put {curly braces} around v_containerName and v_accountName. I assume this is for either substituting or redacting, and you have things proper on your end. You also have <Path to parquet file> . This one is different, and looks like it might have been copied from some other place. Since it is done differently, I suspect it is an error, and you forgot to replace it.

Second point of note, I do not see any place where you are providing credentials or authentication. Unless your Data Lake Gen2 container has been set to public access, some form of authentication is required. In Synapse, credential passthrough is built in, and leverages Azure Active Directory to do the credential stuff in the background so you don't have to explicitly code it in. This feature is not available when you run on your local machine because your machine isn't part of Azure Active Directory.

Third point, the error message includes

java.io.IOException: No FileSystem for scheme: abfss

This is saying your machine doesn't know what to do with abfss. You will need to install drivers at a minimum. Python is not Pyspark.

Please do let me if you have any queries.

Thanks

Martin

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators