Hello @Arindam Pandit ,

Thanks for the question and using MS Q&A platform.

As we understand the ask here is to read the records from the cosmosdb and right them to the JSON , please do let us know if its not accurate.

To write the data back to the cloud storage , you can follow the document here . https://learn.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-directory-file-acl-python.

<< extract from the link >>

Upload a file to a directory

First, create a file reference in the target directory by creating an instance of the DataLakeFileClient class. Upload a file by calling the DataLakeFileClient.append_data method. Make sure to complete the upload by calling the DataLakeFileClient.flush_data method.

This example uploads a text file to a directory named my-directory.

def upload_file_to_directory():

try:

file_system_client = service_client.get_file_system_client(file_system="my-file-system")

directory_client = file_system_client.get_directory_client("my-directory")

file_client = directory_client.create_file("uploaded-file.txt")

local_file = open("C:\\file-to-upload.txt",'r')

file_contents = local_file.read()

file_client.append_data(data=file_contents, offset=0, length=len(file_contents))

file_client.flush_data(len(file_contents))

except Exception as e:

print(e)

Please do let me know if you have any further questions .

For the clarity of the other community , I am sharing the code base to enumerate the records in a contaner below which worked for me .

from azure.cosmos import CosmosClient

import azure.cosmos.cosmos_client as cosmos_client

import os

import json

url = "https://yourcosmosdbcoreurl.documents.azure.com:443/"

key ="accesskey"

client = CosmosClient(url, credential=key)

database_name = 'himashu'

database = client.get_database_client(database_name)

container_name = 'container1'

container = database.get_container_client(container_name)

client = cosmos_client.CosmosClient(url, {'masterKey': key})

dbClient = client.get_database_client(database_name)

containerClient = dbClient.get_container_client(container_name)

for items in containerClient.query_items(

query='SELECT * FROM c',

enable_cross_partition_query = True):

print(items['id'],items)

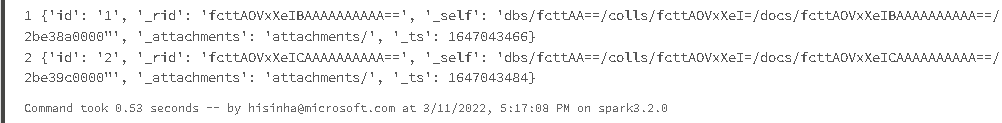

Output

Please do let me if you have any queries.

Thanks

Himanshu

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators