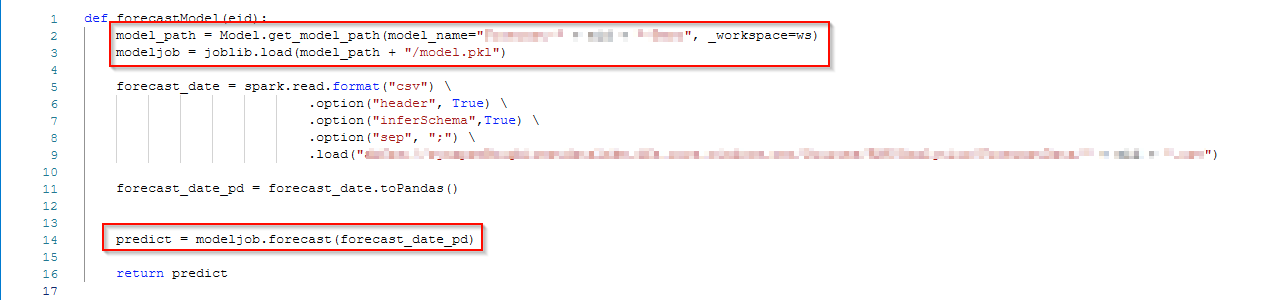

I solved it. In my case it works best like this:

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

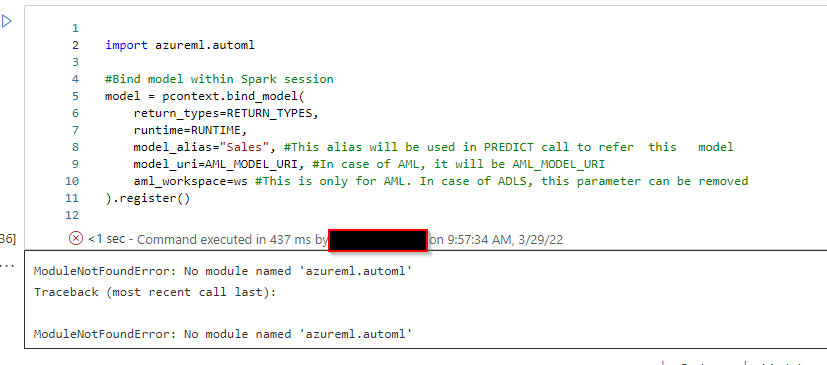

I get the following error with Apache Spark version 3.1 : ModuleNotFoundError: No module named 'azureml.automl'

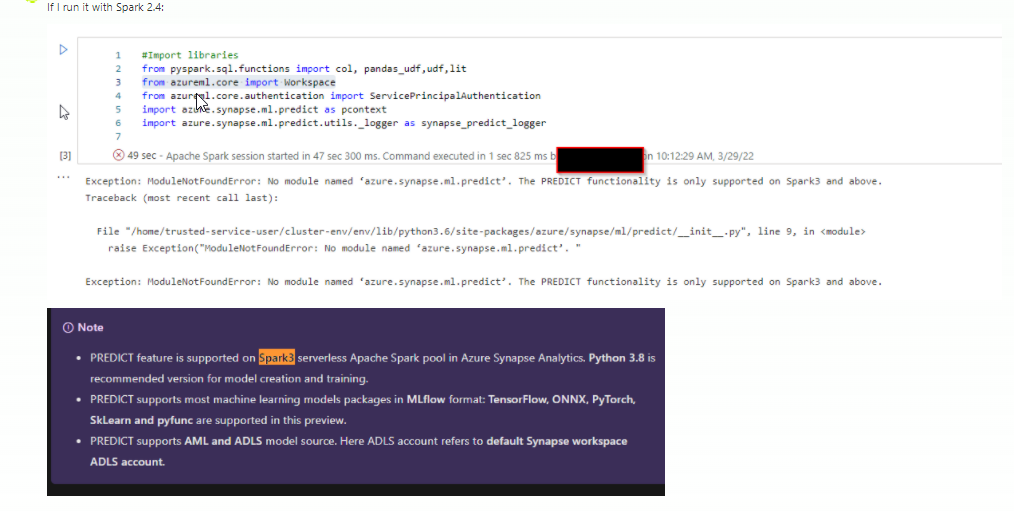

with version 2.4

I solved it. In my case it works best like this:

Hello @Anonymous ,

Thanks for the question and using MS Q&A platform.

If you are using import azureml.automl in Apache spark 3.1 runtime, you will experience the error message stating No module named 'azureml.automl'.

As mentioned in the official document could you please try using

from notebookutils.mssparkutils import azureMLand it will work as excepted.

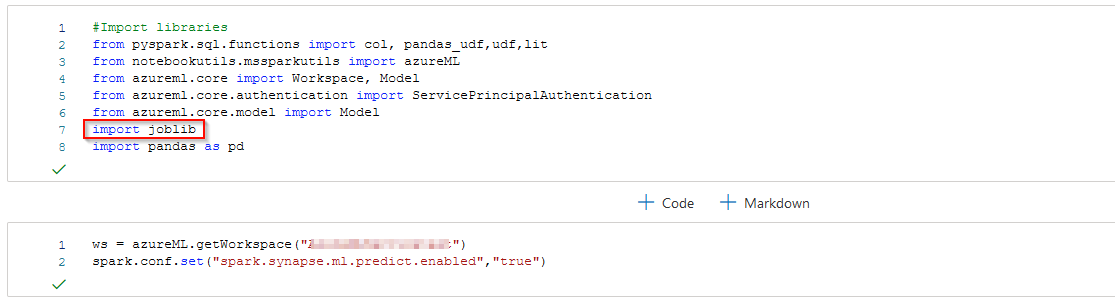

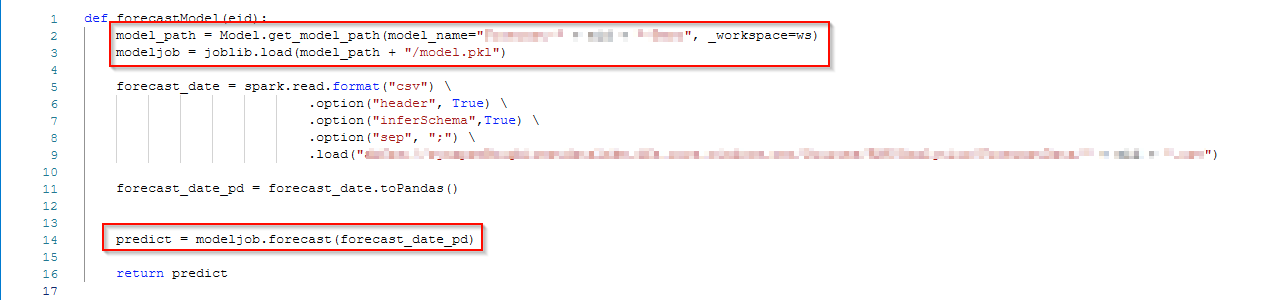

Here is the sample notebook for Score machine learning models with PREDICT in serverless Apache Spark pools

#!/usr/bin/env python

# coding: utf-8

# ## Azure_Synapse_ML_predict

# In[Cell-1]:

from notebookutils.mssparkutils import azureML

# In[Cell-2]:

ws = azureML.getWorkspace("AzureMLService")

# In[Cell-3]:

from azureml.core import Workspace, Model

model = Model(ws, id="linear_regression:1")

model.download('./')

# In[Cell-4]:

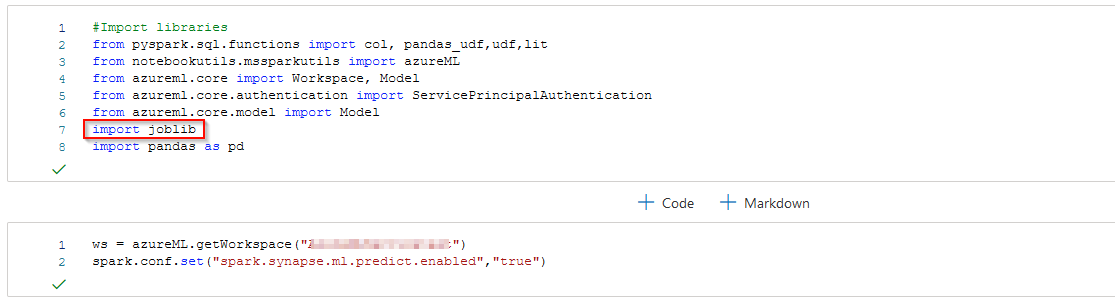

from pyspark.sql.functions import col, pandas_udf,udf,lit

from notebookutils.mssparkutils import azureML

from azureml.core import Workspace, Model

from azureml.core.authentication import ServicePrincipalAuthentication

import azure.synapse.ml.predict as pcontext

import azure.synapse.ml.predict.utils._logger as synapse_predict_logger

spark.conf.set("spark.synapse.ml.predict.enabled","true")

# In[Cell-5]:

AML_MODEL_URI_SKLEARN= "aml://linear_regression:1"

# In[Cell-6]:

model = pcontext.bind_model(

return_types="Array<float>",

runtime="mlflow",

model_alias="linear_regression:1",

model_uri=AML_MODEL_URI_SKLEARN,

aml_workspace=ws

).register()

# In[Cell-7]:

DATA_FILE = "abfss://******@cheprasynapse.dfs.core.windows.net/AML/LengthOfStay_cooked_small.csv"

df = spark.read .format("csv") .option("header", "true") .csv(DATA_FILE,

inferSchema=True)

df.createOrReplaceTempView('data')

df.show(10)

# In[Cell-8]:

#Call PREDICT using Spark SQL API

predictions = spark.sql(

"""

SELECT PREDICT('linear_regression:1',

hematocrit,neutrophils,sodium,glucose,bloodureanitro,creatinine,bmi,pulse,respiration)

AS predict FROM data

"""

).show()

Hope this will help. Please let us know if any further queries.

------------------------------

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how