Hello @AHMAD Muhammad ,

Welcome to the MS Q&A platform.

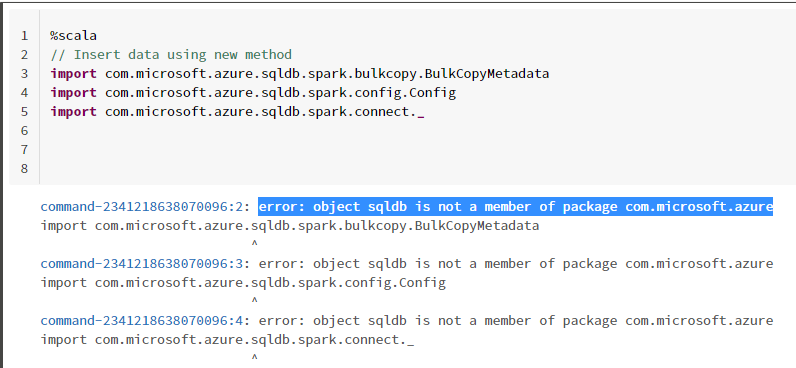

(UPDATE:5/4/2022): If you are getting the

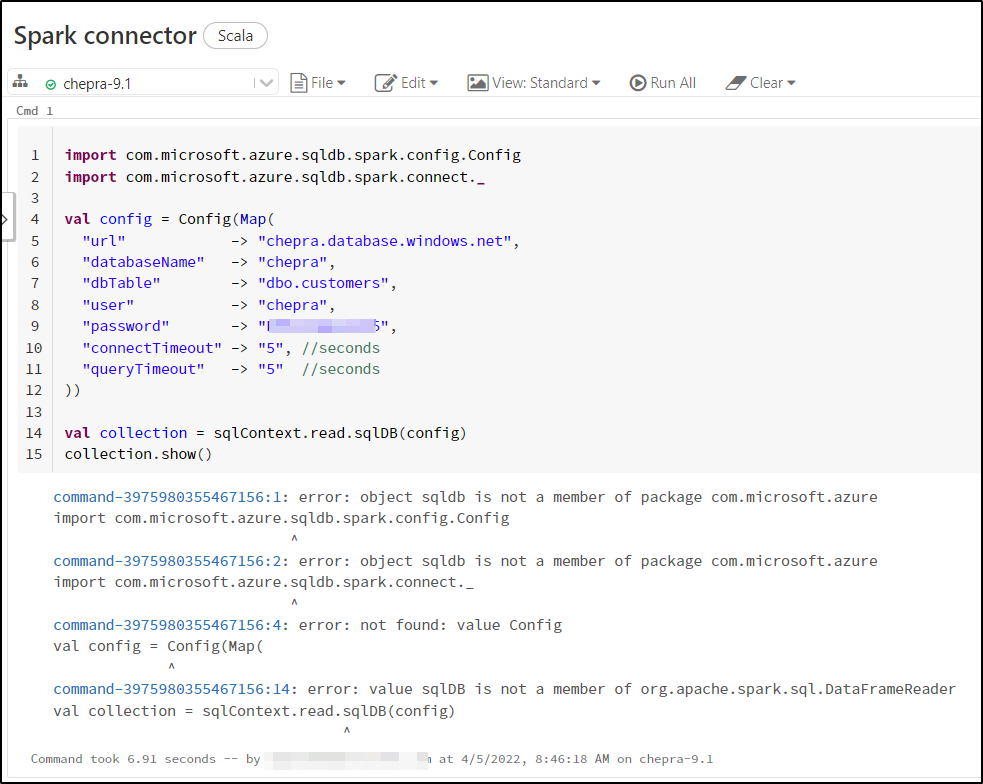

sqldberror means all other support libraries already imported to your notebook and only the latest JAR with dependencies are missing.

As per the repro, I got the same error message as shown above:

After bit of research, I had found that you will experience this error due to missing JAR with dependencies.

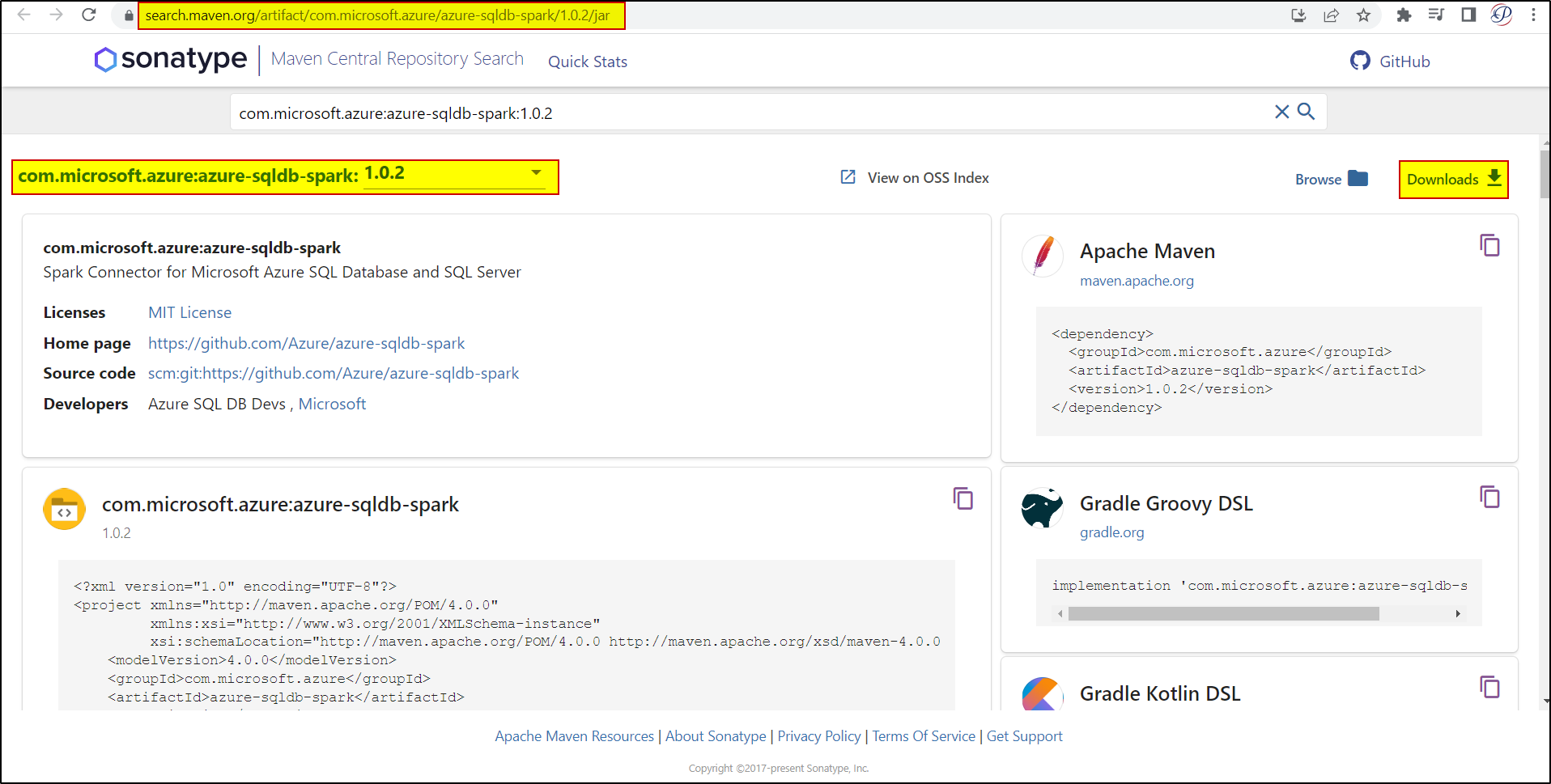

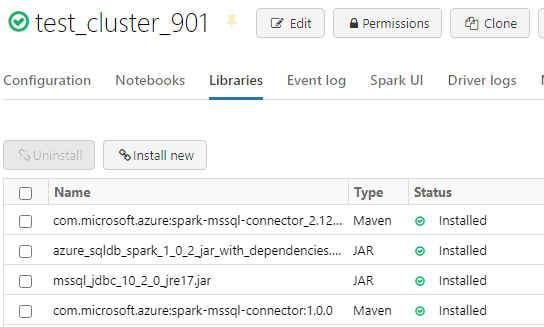

To resolve this issue, you need to download the JAR file from here: https://search.maven.org/artifact/com.microsoft.azure/azure-sqldb-spark/1.0.2/jar

After downloaded the jar file, upload the JAR library into the cluster and install it.

Note: After installing both the libraries, make sure to restart the cluster.

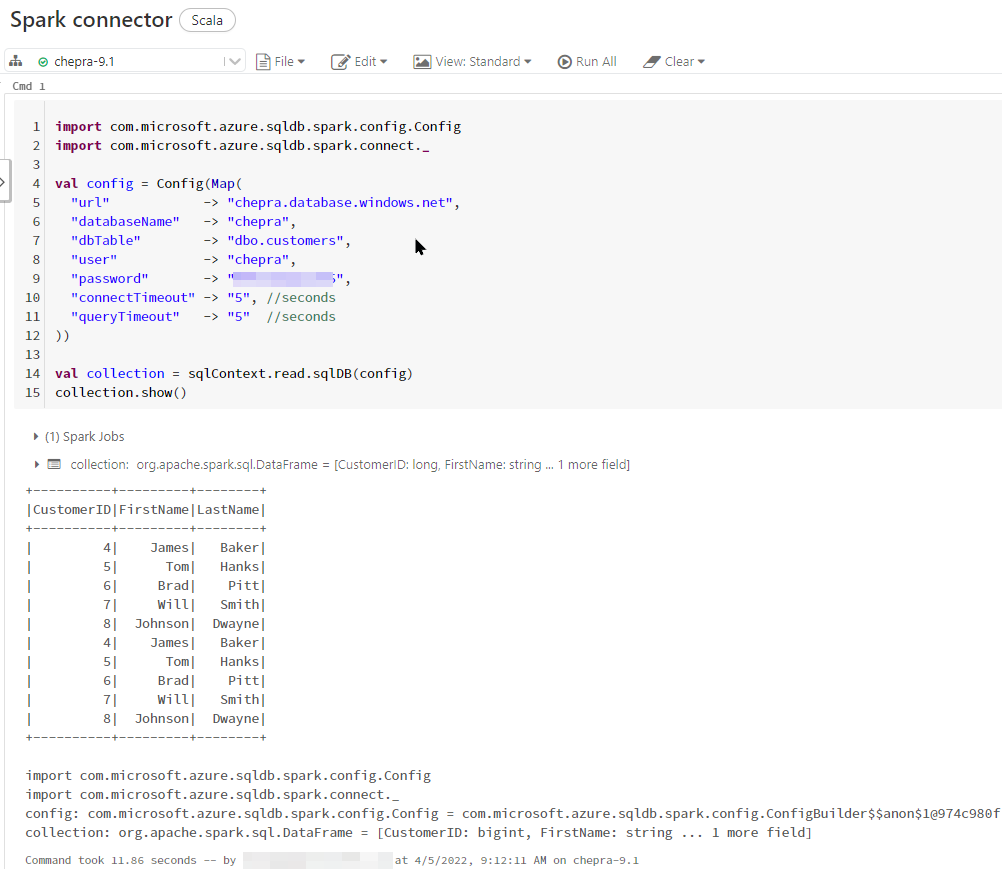

Now, you will be able to run the command successfully.

---------------------------------------

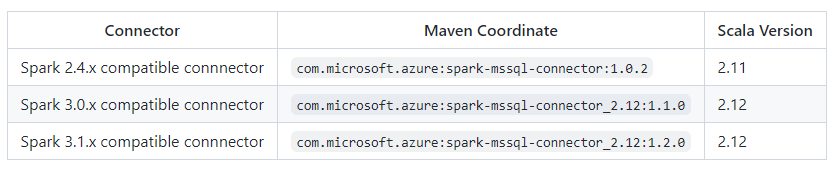

The GitHub repo for the old connector previously linked to from this page is not actively maintained. Instead, we strongly encourage you to evaluate and use the new connector.

There are three version sets of the connector available through Maven, a 2.4.x, a 3.0.x and a 3.1.x compatible version. All versions can be found here and can be imported using the coordinates below:

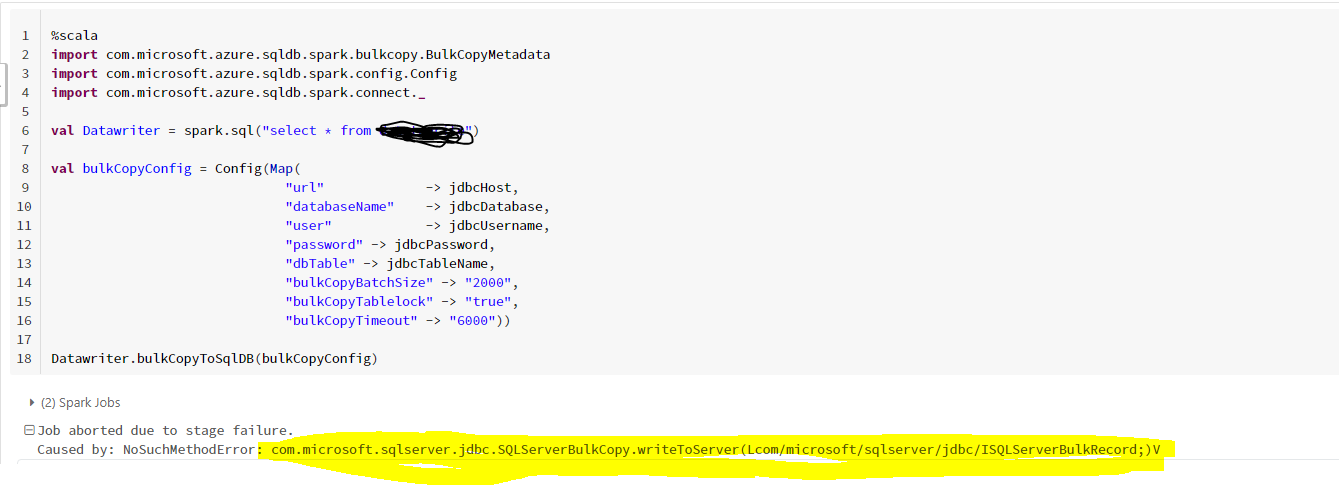

The traditional jdbc connector writes data into your database using row-by-row insertion. You can use the Spark connector to write data to Azure SQL and SQL Server using bulk insert. It significantly improves the write performance when loading large data sets or loading data into tables where a column store index is used.

import com.microsoft.azure.sqldb.spark.bulkcopy.BulkCopyMetadata

import com.microsoft.azure.sqldb.spark.config.Config

import com.microsoft.azure.sqldb.spark.connect._

/**

Add column Metadata.

If not specified, metadata is automatically added

from the destination table, which may suffer performance.

*/

var bulkCopyMetadata = new BulkCopyMetadata

bulkCopyMetadata.addColumnMetadata(1, "Title", java.sql.Types.NVARCHAR, 128, 0)

bulkCopyMetadata.addColumnMetadata(2, "FirstName", java.sql.Types.NVARCHAR, 50, 0)

bulkCopyMetadata.addColumnMetadata(3, "LastName", java.sql.Types.NVARCHAR, 50, 0)

val bulkCopyConfig = Config(Map(

"url" -> "mysqlserver.database.windows.net",

"databaseName" -> "MyDatabase",

"user" -> "username",

"password" -> "*********",

"dbTable" -> "dbo.Clients",

"bulkCopyBatchSize" -> "2500",

"bulkCopyTableLock" -> "true",

"bulkCopyTimeout" -> "600"

))

df.bulkCopyToSqlDB(bulkCopyConfig, bulkCopyMetadata)

//df.bulkCopyToSqlDB(bulkCopyConfig) if no metadata is specified.

For more details, refer to Accelerate real-time big data analytics using the Spark connector.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators

3: /api/attachments/189984-image.png?platform=QnA 5: https://github.com/microsoft/sql-spark-connector

). This can be beneficial to other community members. Thank you.

). This can be beneficial to other community members. Thank you.